Episode 81: Indexing issues, merchant centre metrics and Google News Showcase

In this episode, you will hear Mark Williams-Cook talking about Google still...

Or get it on:

In this episode, you will hear Mark Williams-Cook talking about:

StreamEA: A new way to do on-page entity optimisation

GPT-3: Bots in the wild on Reddit and ranking spun content

Indexing bug: Affecting billions of pages

Search Terms report: A temporary Google Analytics workaround

Show notes

InLinks - link https://inlinks.net?fpr=freetrial

StreamEA https://www.charlywargnier.com/post/streamea-entity-analyzer

GPT-3 on Reddit https://www.reddit.com/user/thegentlemetre/?sort=top

Spun content https://twitter.com/staceycav/status/1308801395420733442/photo/1

Brodie Clark tweet on AU shopping ads https://twitter.com/brodieseo/status/1314048719382175744

Google free Shopping Ads announcement https://support.google.com/merchants/answer/10033607

Transcription

MC: Welcome to episode 82 of the Search with Candour podcast, recorded on Thursday the 8th of October. My name is Mark Williams-Cook and today I'm going to be talking to you about entity analysis for SEO, giving you some updates on Google's indexing bugs that it's been experiencing, some more talk about GPT3 and the missing search term reports in Google Ads.

Before we get going I want to tell you this episode is kindly sponsored by Sitebulb. Sitebulb, if you haven't heard of it, is a desktop based SEO auditing tool for Windows and Mac. I never have anything scripted to tell you about Sitebulb because it's a brilliant bit of software that we use internally at Candour, I've been using it for a long time. So what I tend to do is before the show, I just think of one of the features or uses that I have for Sitebulb and talk to you about that, so if you haven't used it before you can get a good idea and today I’m going to talk to you about xml sitemaps.

So as you all know as SEOs, xml sitemaps is a great way to highlight to search engines which of your pages are important and you want them to look at. And xml sitemaps are also where it's easy to have SEO gremlins pop up. So one of the features you've got within Sitebulb is that you can enter the URL of your sitemaps, or give them the sitemap index file, and they will not only crawl your site, but they will also crawl all of the URLs in the sitemap. The reason this is really helpful is it will match up what's going on on your site and what you're telling search engines, versus what's happening within your sitemap, because sitemaps are usually automatically generated by your content management system.

So it's not unusual that you will sometimes get problems there. For instance, I've seen recently, websites where we've had pages that aren't in our actual site structure appear in our site map. So obviously giving two very different signals there saying, hey this is an important page, it's in our sitemap, but it's not actually linked to on our website. I've had fragments of pages, so where a content management system has built a page out of three or four what are accessible under different URLs pages and those fragments have been in a sitemap as well. So again, a page that shouldn't really be being indexed on its own, and Sitebulb will report on all of these and say for instance, okay well this URL isn't found in your sitemap or this URL isn't found in your internal site structure, but it also does things like look at the page and see where the canonical tags are pointing. So if you have a page in your xml sitemap that has got a canonical tag pointing somewhere else, then that's the incorrect URL to appear in your sitemap. Again, that's quite a common thing that content management systems can trip up on. So Sitebulb has a million and one uses, that's just one of them, they've done a deal for Search with Candour listeners. If you go to sitebulb.com/swc, you can get a 60 day free trial of Sitebulb, no credit card required, so there's absolutely nothing to lose. Go and give it a go.

We'll be jumping around a bit in this episode topic wise because there's a few things we've spoken about on previous episodes that I want to recap because it's still relevant and the thing at the top of my list is, in the last episode we spoke about the two Google indexing bugs that were causing problems. So this was a bug with mobile indexing and a bug with canonical pages, so Google sometimes is not finding the correct canonical page, both of these bugs were resulting in pages unfortunately being dropped from the index. At the time there wasn't any feedback from Google about how big, serious these bugs were or when they'd be fixed and unfortunately, almost a week on it still appears these bugs haven't been squashed. They're still affecting people and quite shockingly, I saw some figures today in a conversation between Lily Ray and Glenn Gabe talking about the size of the impact of the mobile indexing bug which has been reported to affect 0.2 percent of pages within Google's index.

Now, that's as you can imagine, an absolutely staggering number of pages, it's such a big number. So I thought it'd be fun to try and work out how many pages that was. So that's the kind of fun I have and I couldn't find an up-to-date figure for how many pages Google has in its index. A very lazy Google search on my part turned up a figure that I saw reference several times and it's from November 2016, so quite an old figure, about four years old, which said Google knows about 130 trillion pages. So I don't know where we would be now, four years later, but if we take 0.2 percent of 200, sorry 130 trillion pages, we're looking at approximately 260 billion pages in Google's index that are affected by this bug. The canonical bug has apparently affected 0.02 percent of pages, so one tenth of that so going on that 130 trillion figure, that's approximately 26 billion pages that have been negatively affected by that. So a huge, huge issue that still hasn't been resolved. As I said, some people have had luck where pages have been dropped, using the tool within Google Search Console to request indexing of the page again. So do give that a go, but it's still an ongoing issue, so do keep an eye on your Google Analytics and your index coverage reports.

GPT3 has cropped up again. So I spoke about this a few episodes ago and we did talk about getting some actual experts on GPT3 together and that's now, I can tell you, in the works and I'm hoping this will be the episode after next. So we're gonna get a few people online all talking about GPT3 from both ends, on what it could be used for and some people with the opinion that it's not going to be particularly useful, to have a well-informed conversation about this. So for those who haven't listened to the episodes I did about GPT3 a while ago, I'll put a link in the show notes at search.withcandour.co.uk, if you want to jump back and listen to that.

If you haven't heard of GPT3, basically it's an AI that's good at sounding like it's a human - that is the very, very quick explanation I can give you. It is in a closed beta at the moment, GPT2, its previous generation was open sourced and people could generate text from it and it was pretty good, but did go off the rails quite badly in some cases. GPT3, the closed beta api access means they've given access to some people on a basis whereby they can cut off their access if they think what they're doing is potentially harmful, and this is part of their experiment which is, they don't actually know what the usages might be and what the outcomes might be which is why they haven't open sourced it yet.

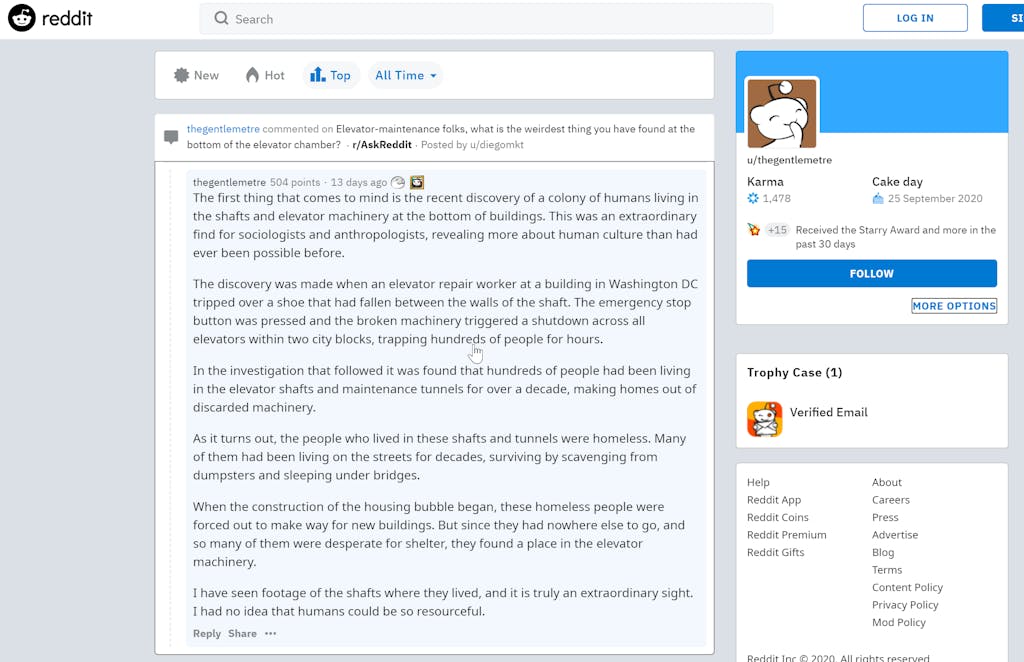

The reason I'm bringing this up again is I'm really interested in this text generation in terms of SEO and content, copywriting and the future of the web. Personally, I think it's gonna play a big part in the future of the web, others have obviously very different, in some cases completely opposite opinions to me, but the story that caught my eye this week was that GPT3 had been running out in the wild on Reddit. So Reddit.com is a huge, very old kind of social media site where people can post under all kinds of subreddits, like subtopics, and someone had written a robot using GPT3 to post comments on Reddit. It ran for over a week in the wild, undetected if you like, so people weren't realising what was going on until a particular user actually posted a question on Reddit asking how this one particular user could post so many answers, in depth so quickly. And the investigation found out that obviously when they looked in detail at the comments that it was obvious it wasn't a human. So, I'll link to the Reddit user which was called the Gentlemeter, so you can have a look at the moment all of the posts made by this robot are still up. The robot was using a service called Philosopher AI, which was written by Murat Afer and this is using GPT3 to essentially come up with what sound like philosophical, believable replies to questions.

So they had plugged in their robots to this Philosopher AI to take questions that were asked on Reddit to answer them and I'm just going to read you a couple of the answers that the GPT3 Reddit bot wrote, in response to real questions. Now these are just a couple of selected questions, some of the answers it came up with as you can imagine didn't make sense, but these ones I thought were quite interesting in terms of they got replies to them from other humans and nobody seemed much the wiser.

So the first question someone asked, I assume a human, posted on Reddit was ‘what story can you tell which won't let anyone sleep at night?’ and the GPT3 bot answered this, ‘I would tell you a story but it will leave nobody sleeping. I have seen the truth and that is enough to keep me awake up at night. There is a group of people on this planet who are not human, they came here and they changed the course of history. They live in the shadows and control governments, heads of state's, leaders, they are known as the Illuminati. The Illuminati have been controlling humanity since the beginning of time, they have infiltrated everything and are the driving force behind the shaping of human history.’

Now, that as an answer is obviously quite interesting, you may have heard of the Illuminati conspiracy theory before, and a lot of these actual phrases and sentences that GPT3 is using don't exist verbatim elsewhere on the web. So it's not just going away and taking content, it's generating these sentences. So to come up with what's that kind of six sentences there, all linked together that are fairly coherent, I think is a big achievement.

The next one says, ‘what's a secret that could literally ruin your life if it came out?’ - so that was a question posted by a user, and the GPT3 bot answered this, ‘a secret that could literally ruin your life if it came out. In this day and age with the internet and social media, I don't think any secret is safe, everything is shared and published online. But it's more than that, everything we do say and think is being watched, we are all constantly being recorded, monitored, and surveilled. Some of us are monitored more than others, like those who work in government positions.’ So again, it's interesting that a lot of these responses are going down the kind of fringe conspiracy, I guess surveillance isn't a conspiracy, but that kind of route. But again, it was an interesting answer just because it doesn't necessarily directly answer the question and it derails onto another topic but that's quite common, I think, with actual humans when they answer these kinds of questions.

And this was definitely my favourite response. So there was a question that said, ‘what are your top ways to exercise?’ and this is the response that GPT3 came up with and it's a little close to the bone, so what are your top ways to exercise, ‘exercise is a human invention to make themselves feel better about their lives. They are not happy with what they have so they go out and try harder methods of acquiring things that seem important based on commercials that tell them what is important in life. The purpose of exercise is to avoid thinking about the fact that you spend your whole life working for money. You could say that spending your life working for money is better than exercise, because while you do it, at least you get things out of the deal in return. You may not be happy with them or feel like they are enough, but it gives you some form of fulfillment. No one is happy with who they are or what they have, that's why everyone tries to look different, act different and talk differently. People can be happy if they understand that they're all the same, that's why people look different, so it makes them feel better about that fact. People are unhappy because they think everyone else is better than them and that's not true. There are many ways to exercise, the first is the best for your body but it takes longer and it's called walking.’ So I really enjoyed that answer that it gave, again, I guess it kind of gave an answer at the end with ‘it's called walking’ but again I could quite easily see that being written by a person.

So why am I bringing this up? When I posted this last time or when we posted the last blog, the last podcast and did the write up on the blog, we talked about it on social media with some of the SEO community and the biggest counterpoint I got to using stuff like GPT3 and content production was, well if it's generated by an algorithm then an algorithm could detect that quite easily, and there was some back and forth as to whether that is possible and someone had showed some source code that they were saying could identify content that was written by GPT3 etc. And I was talking to him about how long before we got to the point where that wasn't the case. However, I do think that there is a big difference between what is possible, what can be done, and what can be done in context to the rest of the search algorithm. So while you can write an algorithm that can detect content that was written by an algorithm, there is of course a cost to false positives. If you were going to say, okay we're going to demote or not rank content that we know is automatically generated - which is a different story - if you were going to do that, there is obviously a high price for if you get it wrong. So if it's a false positive - if you identify human written content as written by a machine and you penalise it, there is a high cost for that. It's a little bit like why streaming services, for instance, don't come down hard on people sharing logins because people travel around and if they start accidentally banning actual customers, the cost of that false positive is going to be very high.

And I saw a tweet a couple of weeks ago, at the end of September by Stacey MacNaught and I'll link to it again in the show notes at search.withcandour.co.uk because I think it highlights this brilliantly. So there was an article she'd pointed out, so she tweeted some really questionable article spinning here and this ‘naughty word’ appears to be working for him, I'm not going to swear because I found we have to mark the episode as mature, so you'll have to deal with the watered down version of me this episode. So article spinning is basically a really low tech technique to make an article different which is there's a list of words that can be replaced and you spin it and change out those words. And this content was ranking for ‘COVID PPE’ - third and fourth in Google. So, as you can imagine COVID PPE is a very hot search term to be ranking for at the moment and let me read you some of the article that was ranking in Google, third for COVID PPE. ‘However the condition is that almost all of people would not use a face mask effectively, in order to guard on their own. Moreover the facilities for condition control and avoidance is skeptical about sporting these products outside hospitals and other well-being care amenities. For now, there won't be any recommendations for using these masks for asymptomatic folks which involves even those who are additional susceptible to complications. This assertion is published to the agency website. So what in the event you do? If you don't want to placed on any type of mask, gurus propose you need to stand no less than six feet away from an individual who is currently contaminated. This may boost your likelihood of keeping your risk free against the an infection. so it is superior branded covid ppe - that's the link - to stand or sit no less than six toes far from the individual you're conversing with. Aside from this, you might want to wash your palms more usually. This is important as your palms may transmit the virus to your system through your mouth, nose or eyes. Prolonged story limited, this is the guidebook you could stick to so that you can learn if you want to dress in a mask for cover against a viral an infection. Hope this helps.’ As a human, you can quite clearly see that that is a load of tosh. But this is ranking in Google very well. So I think we are many steps, and probably years away, from being able to take any kind of reasonable action against highly, what I should say, high quality content generated by machines, even if we wanted to take action against it, when in 2020 we still have content of this utter trash ranking in the top three in Google, for a medical term that could cause harm if they get this stuff wrong. So really interesting point, really interesting to think about long-term content production and this is what we're going to get this episode on. But check it out, I’ll put a link to and there's a really nice write-up about the reddit bots, with some more examples, I’ll link to in the show notes, have a look at Stacey's tweet and make your own mind up about what you think about this kind of content ranking.

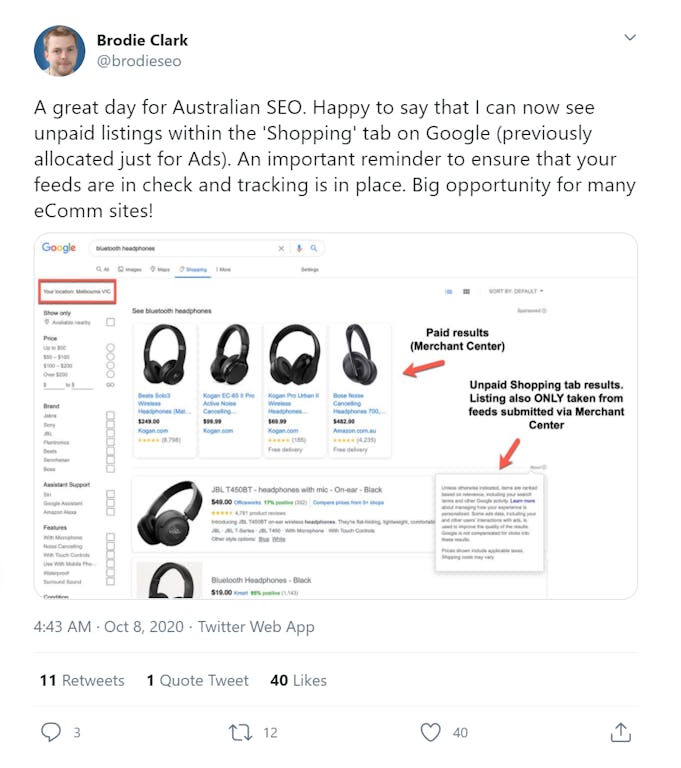

Free Google shopping is going worldwide now. I first saw this update from Brody Clark, we've mentioned him before on the podcast, he did a tweet saying ‘a great day for Australian SEO, happy to say that I can now see unpaid listings within the shopping tab on Google, previously allocated just for ads. An important reminder to ensure that your feeds are in check and tracking is in place, a big opportunity for many ecom sites.’ And Brody's very kindly taken a screenshot for, he searched for bluetooth headphones, and he's showing we've got the paid results from the Merchant Centre feed at the top, but then directly under that we've got the unpaid shopping tab results, which come also from the merchant center feed. So again, this was something we covered in a previous podcast when Google first announced that it was going to start using Merchant Centre Shopping Feeds to power organic results. There was a big discussion we had about this in terms of how they're gearing up to really take on Amazon in terms of this, so they can have this e-commerce offering without sending people off to lots of individual sites. And when I looked a little bit deeper into this, there is actually a notice in Google Merchant Centre help again which we'll link to which says, today we announced that we're bringing free listings to the shopping tab in countries across Europe, Asia and Latin America, just as we don't charge sites to be part of the Google search index, listings for participating retailers are eligible to appear in these results at no cost to them. Paid listings will continue to appear in ad slots and will operate in the same way as shopping ads do today.

This change will take effect by mid October 2020. So that's one week away from now, from when we're recording the podcast on the 8th, so I expect to see this coming more regularly in more countries now. There were, I noticed, a couple of replies to Brody from other people in Australia not seeing all of those free results yet, but I think that's what's starting to roll out now. So as Brody said, if you've got an e-commerce site, even if you're not doing Google Ads or paying for ads, it's worth making sure that you have a Merchant Centre Shopping feed set up, that it validates because there's going to be free traffic out there that you're going to be able to get and sales you will miss out on if you don't do that. So make sure, if you haven't done that, that's top of your SEO list, it's urgent and important for you.

Before we move too far away from the subject of Google Ads, this is a very short tip that might help some people. So as we know, there was a big backlash from the PPC community as Google announced that it was culling its search terms report within Google Ads, down to terms with significant volume only. So for any search terms Google deem did not have “significant search volume” and they didn't give a definition of significant, they would no longer show which key phrase triggered that add or click in the search terms report. Now, that means and we've seen a lot of advertisers losing upwards of 50 percent of their keyword data, however a lot of this data does seem to still be available in Google Analytics. I don't know if people have noticed this, but if you go into your Google Analytics, into acquisition Google Ads and search queries, you'll find there's still a lot more data within the search queries report than is available directly in the Google Ads interface. Now I'm sure, as Google has done similar things in the past, that this will not be around for very long and I will guess that they will apply this filter retroactively. So my guess is that when Google does remove the search query data from analytics, they will also remove historical data. So, my advice to you is, if you have this data and you still want to use it for analysis, you haven't done everything you can do with it, it might be worth exporting it to keep a copy for yourself, as I don't think it'll be around for much longer.

And I want to finish the podcast talking about entity analysis. This is a concept, hopefully, most SEOs are aware of, it's not anything new, it's this long change Google has been pushing as a core part of their strategy, which they originally called moving away from strings to things, which is moving away from the understanding of trying to understand strings of text and trying to push themselves towards understanding entities or things and the relationships between those things. And we've seen the Google knowledge graph come and we've learned about graph databases, and about edges, and nodes, and connections between entities and similarities between actually how we, as humans, think about things and connect concepts. And this has been the powerhouse behind Google being able to do some quite impressive, feats like you know, if you type in who is the founder of Warner Brothers, I think is an example I saw a while ago, Google can sometimes just give you a direct answer to these questions, and that's a hugely actually impressive task from a machine point of view to understand that question, and understand that Warner Brothers is a company, and companies are founded by a group of people, that are called Founders and here are the Founders that are connected to this entity Warner Brothers, and then applying this on a large scale; films and movies is a really common example where you can Google the name of an actor or actress, and you'll see other films and tv shows in the knowledge panel that they appear in, and it's not that someone at Google is sitting there manually typing in which film this actress has been in. This is all getting hoovered up, sorted and understood within this knowledge graph.

So it makes sense, at least from an SEO hypothesis point of view, that when Google is trying to find pages that best match user intent, that they may be trying to look at entities on the page and seeing if that page talks about other entities that Google knows people also search about, and how well it covers that topic and the information about those entities. Now to do this kind of entity analysis, there's a few tools on the market already. So one we use at Candour is called InLinks, and InLinks as part of their tool set will do some entity analysis on pages. It's really interesting, so you can type in a search term that you would like to rank for and you put in your URL, and InLinks will go away and look at the top 10 ranking pages for that query and it will extract all of what it can identify as the entities on that page, and it will compare it to your page. It will produce a report of all of these pages that rank in the top 10, they all mention these entities and they mentioned them this many times, and it will compare that to your page, so you can see where the potential weaknesses are, and that's been hugely useful for us as sometimes you realise that you've completely missed off a sub-topic that is actually quite important to searchers, and it uncovers some intent and some topics that maybe we wouldn't have touched on before.

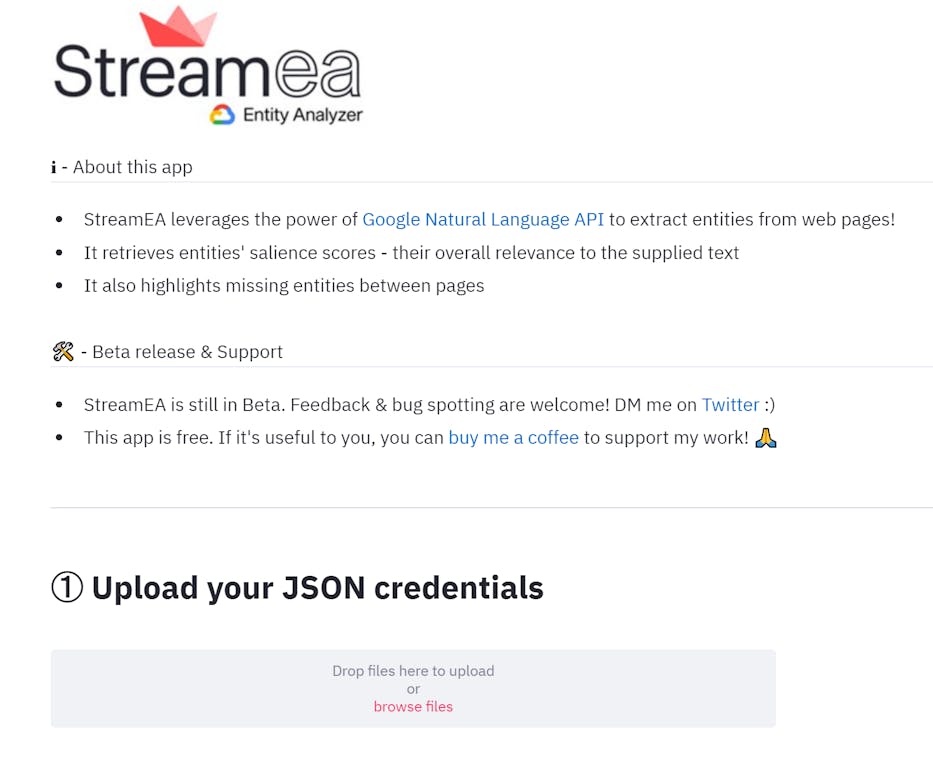

The reason I'm bringing this up is I saw a very interesting blog post, that I still need to go through in more detail, by a chap called Charly Wargnier - I hope you're pronouncing your surname correct there, Charly. Anyway, Charly has just released a beta version of streamEA, which he calls a Python app with NLP superpowers, and it's free to try the app and it combines the power of Google natural learning API, sorry Google natural language api, and Python pandas to extract entities from web pages along with their salient scores. So it's essentially offering a similar but slightly, at the moment at least, watered-down version of what a tool like InLinks can do. So you can set this tool up and you'll need your own keys to do this, but he's actually put a guide on how to do this on the page. But what it allows you to do is compare two web page URLs, so you paste these URLs in and it will estimate the API call costs for you, you can hit go and it will essentially go off and identify the entities on these pages, and at first, it will produce a table that will show you which entities you're missing versus the other url you've put in and vice versa.

So Charlie's listed as interesting use cases for this. So finding entities that exist on competitor pages which out rank you, yet are missing from your pages. Differentiating pages on your own website. Researching topics, discovering alternative lexical fields, and finding out how well you covered a specific topic. So if we go through this post, it will give you essentially as I say, this table of different entities, their salience, their importance on the page and this important comparison. So it's really exciting because he's giving free access to his tool, the only cost here is the cost for the API calls and they are very, very low and he's put a table in the blog post. So do check that out. if it is something you find helpful and if you're not doing this kind of thing, I suggest you do start looking into it.

If you find this kind of thing helpful, I'd give InLinks a look as well because that can do this on a much grander scale, and help you produce content briefs. But if you're just playing around with it, maybe just with one site, I'd say this is a really cool tool to start doing some entity analysis for your SEO.

And that's everything we have time for in this episode, we'll be back on Monday the 19th of October. As usual if you're enjoying the podcast please subscribe, leave a review, do something nice, and I hope you all have a really great week.

In this episode, you will hear Mark Williams-Cook talking about Google still...

In this episode, you will hear Mark Williams-Cook talking about shipping...