12.05.2019

21 min listen

With Mark Williams-Cook

Season 1 Episode 9

Episode 9: Google I/O, Evergreen Googlebot and HowTo/FAQ structured data

New features coming to Google Search and Assistant, an updated Googlebot and more structured data support in the SERPs.

Play this episode

01

What's in this episode?

Mark Williams-Cook will be discussing:

Google I/O 2019 New features coming to Google Search such as AR and Google Lens updates

Evergreen Googlebot What does Googlebot's version update mean for Javascript and SEO

HowTo and FAQ structured data Google's support for HowTo and FAQ structured data on search and assistants

02

Links for this episode

Helpful new visual features in Search and Lens: /products/search/helpful-new-visual-features-search-lens-io/

The new evergreen Googlebot /2019/05/the-new-evergreen-googlebot.html

New in structured data: FAQ and How-to /2019/05/new-in-structured-data-faq-and-how-to.html

03

Transcript

MC: Welcome to episode 9 of the Search with Candour podcast recorded on Friday the 10th of May 2019, my name is Mark Williams-Cook and I'm going to try and make your lives a bit easier by helping you catch up with this week's Search news. This week we had the Google I/O developer conference and some other announcements from Google so I'm going to talk about some new features coming to Google search, what you should know about them, and what they mean. There's also been some technical changes to Googlebot which Google's announced about how Google can handle JavaScript, and I'll go through both the technical and non-technical explanation of what's going on there. It wouldn't be a Search with Candour podcast if I didn't somehow mention schema! Google's added support for some new types of schema which look like they are going to be really helpful.

Kicking off with some changes to Google Search, really interesting and quite exciting announcement two-fold Google has announced AR in Search - so that's augmented reality in Search and some new features to Google Lens. So starting with the augmented reality, if you don't know augmented reality is you've probably seen it before. It's not VR - so VR is when you have total virtual environments and augmented reality is essentially when you're blending reality with virtual reality, so you've got overlays of virtual objects in the real world.

So Google's announcement reads reads like this, saying:

There's a link in the show notes which will have an image of what Google’s explaining here which is basically a great white shark in someone's room, and I think it's another example of SEO being about the best type of content to answer a query. So when, I've mentioned this before, when you have content writers or marketers that were SEO’s and they talk about content creation, some people immediately jump to that meaning content = text, whereas we know there's lots of different ways to deliver content.

One example I like to use, and it's an example I saw which was trying to explain to someone how tall The Shard of glass is in London, and one of the best ways to answer that question isn't to say it's so-many meters or so-many feet high. It's actually to use an image and you can actually, if you look at the search data there, you'll see people are searching for ‘how tall is The Shard compared to the Eiffel Tower’ so this says to me that maybe the best way to answer that question is actually with an image comparing how tall The Shard is compared to the Eiffel Tower, or Empire State Building or Berlin TV tower or something the user is familiar with. That instantly answers the question for them in a way that they can understand.

Whereas if you say it's X number of meters tall, that might be quite hard for someone to imagine so this AR I think is another really rich way for Google to answer the question in the best richest possible medium. There's lots of potential uses I think for this, they've given another example in their blog post which I'll link to in the show notes which is just if someone's doing some research on human anatomy and they've been given a 3D model of an arm and how the joints work in 3D over their desk that they're viewing blended into a search result.

So there's lots of commercial applications for this, we've seen it happen in some examples with dedicated apps in fashion - in hairstyling where you've been able to look at yourself and you've been able to see what it would look like if you had a pair of different pair of trainers on your feet or if you had a different haircut. I think they're really interesting examples and that might be something we start to see advertisers, organisations doing is finding a way to use this in a commercial sense rather than just at the moment Google's presenting it as an informational thing so that will be really interesting to see how that develops.

The other announcement is around Google Lens, if you don't know Google Lens or you haven't tried it I would recommend. You can get it through the Google app, so if you've got an Android it will be kind of be baked in there. If you've got something like an iPhone you can download the Google app and part of that is Google Lens. So Google Lens was I think announced in 2017 originally. The description of Google Lens from Google is:

“Google Lens is an AI powered technology that uses your smartphone camera and deep machine learning not only to detect an object but understand what it detects and offer actions based on what it sees”

So if you open up the Google app and go to the search bar you'll see an icon in there for Google Lens, you press that and your phone will switch to camera mode and point it around, you can tap on different objects and if it's a product Google will do a scarily good job you'll probably find it quite unnerving the first time you use it with just how good the image recognition is. It will be able to tell you what products are and immediately link to where you can buy them on the web, even random objects you know you can scan, you know animals or your hand or whatever and Google will identify what those objects are. So kind of fun if you haven't used it before it's really interesting tool. Google's announcement around Google's Lens again takes this almost to the next level. I'll just read it to you and then we'll talk about it, Google says:

They say:

That’s quite a few steps Google Lens is taking there to provide you that information, and I think this again this is a really good example of Google kind of taking almost their crawling and indexing into the real world and meshing up offline stuff with online data. This is really opening up, I think this world what we've got an even greater than we have now increased access flow of information so your digital footprint as an organisation is going to be more important than ever. People want to find out about, you know, what's on your menu and what people think about it. No longer do they have to you know look at PDFs of menus online and then try and find a review site where someone's been and even at your restaurant and left a review. They can tap into that information directly and do it from Google's databases. So it's changing this paradigm of you doing a search, having a list of pages and then you finding the right webpage for you, the information is being stored directly from Google and then being delivered and essentially leapfrogging over the whole website thing. That's going to again force people to start caretaking their online presence a bit more outside of their website.

We've also had an announcement this week on Tuesday the 7th of May, Google did a post called ‘The new evergreen Googlebot’ and they said:

So what this means is, as the post says, we know Googlebot is the agent that goes around and looks at pages on the web for Google, and up until recently it was as if Google was looking at the web from essentially a really old version of Chrome. What they're saying is by Evergreen is that they are going to now continue to update Googlebot alongside in parallel with Chrome, meaning that however you're viewing the web now through Chrome you can assume that Googlebot is having the same experience essentially. Google have also written:

So what does this mean? So if you’re a developer hopefully everything I said just make sense, if you're not a developer you are probably wondering what ES6, IntersectionObserver, lazy-loading, transpiling, polyfills etc. are so I will try and give you a little bit of background and context to this. It is good news but it doesn't actually fix a lot of the main problems we encounter with JavaScript, with SEO, so to take some examples from this. A lot of this is around web browsers, so when you're viewing web pages, have certain functionality that's built into the actual browser that can be utilised with JavaScript.

There are cases where a web browser might not support that function, so a specific brand of web browser or historically what we're referring to here is Googlebot wouldn't support that particular function that's built into a newer version of Chrome so what you would do is use a thing called a ‘polyfill’ which is essentially a chunk of code that provides that function. So again normally if everyone was running the most up-to-date version and we're all using the same browser we would know exactly what that browser is capable of doing. There are instances such as Googlebot when it was running old versions of Chrome or you know Internet Explorer so people using Internet Explorer that sometimes the browser isn't supporting the function the developer wants it to do so they use a thing called a ‘polyfill’ which is essentially this chunk of code that will provide that functionality because it's not built into the browser.

What this means is that Googlebot will essentially have the same capability to render JavaScript as a user's browser which is great, doesn't mean we're in a world where we don't have to worry about JavaScript in SEO - no not at all unfortunately not. So to give you a little background to this, what's actually going on is Google does its crawling, indexing, rendering in a phased process. Meaning the first crawl of your site and when pages first get indexed, it actually won't execute any JavaScript.

I did a test on this recently and what we did was we put some new pages up for Google to index which it did within minutes. So we requested these pages to be indexed through Google Search Console and what we did on these pages was we just used JavaScript to change the page title. The idea being that we could see which page title was shown in the search results and we would know if Google had executed the JavaScript. In this particular case we found that the pages as I said were indexed within a few minutes, however it took Google 24 days to get around to executing the JavaScript on that page and the reason for this is that it's actually very expensive from a processing point of view. It's very resource-intensive for Google to execute JavaScript, therefore it tends to take this approach of it will gobble up pages - just kind of the raw HTML without executing the JavaScript, put those in its index and then there's a backlog that it goes through and renders those pages and updates with the JavaScript version.

So this update with Googlebot, while it's increased the capability to improve the fidelity of the JavaScript rendering it's not going to speed things up, this means if you still have a site that is reliant on JavaScript - so Wix websites are a good example, you have to have JavaScript for them to show you anything. You're still going to be hamstringing yourself massively, so if you have JavaScript and it's required for Googlebot to access the navigation it's going to be days or weeks between discovering separate pages on the site. So unfortunately we're not quite there and I don't know if Google will ever have the processing power. I guess they probably will one day to execute JavaScript kind of on the fly but we're certainly not there yet and it still needs to be near the top of your list of things to fix.

Okay we're back onto schema, as we know structured data being apparently one of my favorite subjects!

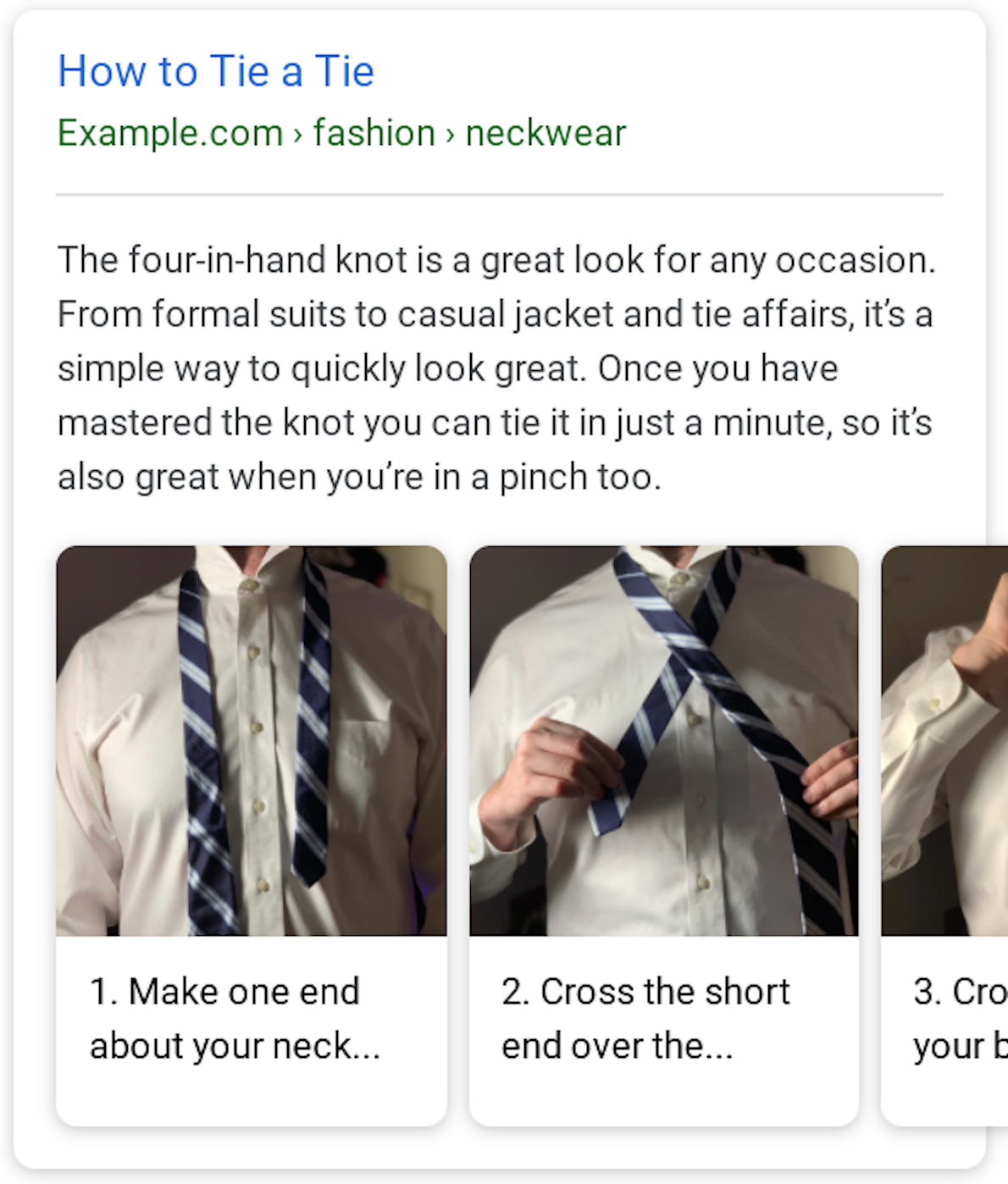

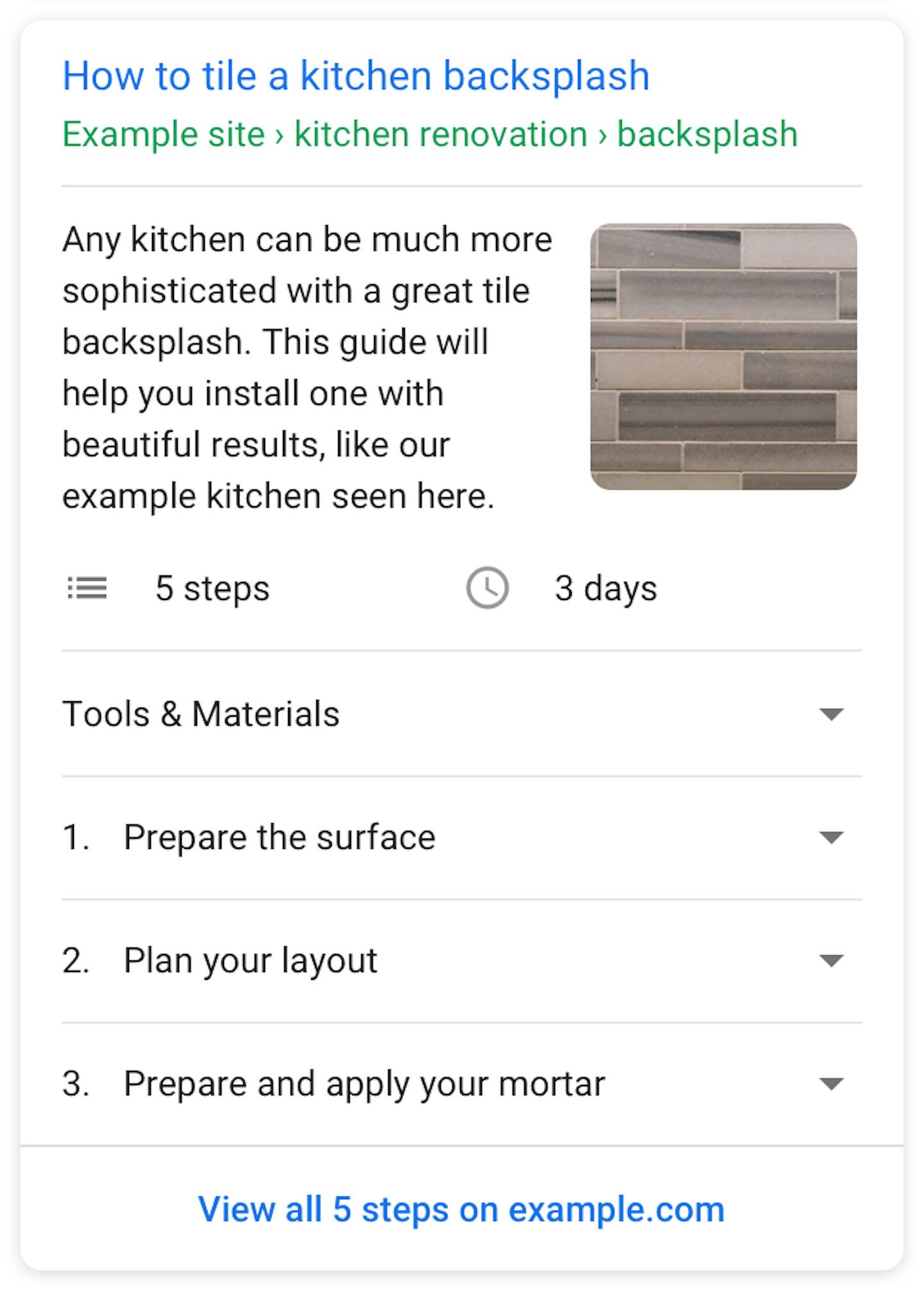

This Wednesday on the 8th of May Google announced support for two new types of structured data FAQ and how-to on Google search and Google Assistant, including new reports in Search Console to monitor how your site is performing with those. They've given a really nice example for how to tie a tie, so an example of that to how to structure data and what it will look like in the search results so you can see in the show notes we've got an image here.

Well that's constituting a few individual cards which have images of each step and they're numbered, and you can kind of swipe between them and we've got another example of how to tile a kitchen backsplash which has got kind of the regular rich snippet that starts with the instructions, but then we've got the extra details of how many steps it is. It says five steps, the duration three days and we've got concertinas we can click on to see the tools and materials we need and then 1. is prepare the surface, 2. plan your layout, 3. prepare and apply your mortar.

So these again these concertina boxes you can click on to get more detail. What's really interesting here is Google goes on to say:

So using this schema is essentially giving Google the granularity and quality of detail it needs to be confident enough that it understands this is the question I'm asking/answering, these are how the steps break down, and it can then be delivered through the Google Assistant in a way that you can ask it for one step at a time. So again it's opening up your data to be accessed through another medium instead of just your web page. Google also have got details on their FAQ on Search and Google Assistant saying:

and then they link you off to the developer documentation.

This is another step for webmasters, business owners. It's more effort as another thing to add, and it's another step away Google's taking from their search and click the model of if you do a search you look through the results and then you try and find out a page that's useful to you. You've probably heard the term SEO is AEO now so that's search engine optimisation is answers engine optimisation so there are people saying search engine optimisation is almost over. Now what we're really doing is answers engine optimisation, so we're trying to help Google directly answer people. There's a whole other debate you can have about how this affects publishers and businesses, Google are kind of scraping their information, their content, their data - circumnavigating their websites and profiting from it but the fact is that this will be the path of least resistance for users so that in my experience that I've seen historically tends to become the dominant default behavior. They will do what's easiest for them, they'll do the least expensive thing to get the information they need.

Something we tell a lot of our clients is that is that they have to consider their website is never finished and that's because technology moves on very quickly. User expectations move on very quickly and there can be a good first adopter advantage for implementing things like this structured data to be the first person to provide answers to those questions to be the default answer on these.

Personal assistants can be quite a powerful position to get yourself into, so definitely something we're going to be having discussions with our clients about, whether we can add this to their sites.

I hope you've enjoyed this episode, I'll be back in a week on May the 20th with the next one and we've got the Google marketing live conference on the 14th of May so I'm sure there'll be absolutely loads to talk about.

You can find this episode's show notes at search.withcandour.co.uk, I'm Mark Williams-Cook and I hope you have a great week!