20.09.2021

46 min listen

With Mark Williams-Cook & Will Critchlow

Season 1 Episode 129

Episode 129: SEO A/B testing and how companies should think about SEO investments with Will Critchlow

In this episode, you will hear Mark Williams-Cook talking to Will Critchlow about:

SEO A/B testing: How A/B testing can work for big websites

Google's algorithm shift: Is a more machine-led approach making SEO harder and testing more important than ever?

Enterprise vs SMEs: Can SMEs actually do A/B testing and is it worth it?

Are we actually bad at SEO?: Why do so many experienced SEOs disagree on fundamentals

Play this episode

01

What's in this episode?

In this episode, you will hear Mark Williams-Cook talking to Will Critchlow about:

SEO A/B testing: How A/B testing can work for big websites

Google's algorithm shift: Is a more machine-led approach making SEO harder and testing more important than ever?

Enterprise vs SMEs: Can SMEs actually do A/B testing and is it worth it?

Are we actually bad at SEO?: Why do so many experienced SEOs disagree on fundamentals

02

Links for this episode

Will Critchlow - /about/team/will-critchlow/

How to make sense of SEO A/B test results - /resources/blog/business-not-science/

03

Transcript

Mark Williams-Cook: Welcome to episode 129 of the Search with Candour podcast, recorded on Friday the 17th of September. My name is Mark Williams-Cook and today I'm going to be joined by Will Critchlow, CEO at SearchPilot, and we're going to talk all about A/B testing for SEO and how companies and senior marketing leaders should think about SEO investments.

Before we get going, I'm delighted to tell you this podcast is very kindly sponsored by the people at Sitebulb. Sitebulb is a desktop based SEO auditing tool for Windows and Mac. We've used it for many years at Candour. I've used it myself for a long time, so I'm really glad to have them as sponsor because I love telling people about this software. If you've heard previous episodes, you'll know I normally go through kind of one feature that I've recently used or I like about Sitebulb, and actually this week, I was going through some training with some internal teams, so some in house teams for SEO, and we were talking about internal linking, and thinking about users, and trying to work out how best to use our internal linking structure to get content to rank.

And one of the really cool things that Sitebulb does is they have a nice detailed internal linking, internal anchor text section in their tool, which highlights things such as pages that only have, for instance, one link, or one no followed link throughout the whole site. It gives you a really quick report as to the type of content that basically is probably not going to rank, because you're telling search engines that it's not that important because you've buried it in your site, and actually is a good flag for your users as well are unlikely to find that content because you've only made one route for them to get to it.

So it's a really, really insightful report you can pull off Sitebulb as well as a whole bunch of other stuff. They've got a special offer for Search with Candour listeners. If you go to Sitebulb.com/SWC, you can get a 60 day trial of their software, no credit card or anything required. Give it a full test drive, if you don't like it, no problem. If you do, and I would highly recommend that you probably will like it when you've tried it, you can obviously sign up. So Sitebulb.com/SWC to give it a go.

Today we are very lucky to be joined by Will Critchlow who is CEO at SearchPilot and SEO partner at Brainlabs. Welcome, Will.

Will Critchlow: Good to be here. Thanks, Mark.

MWC: So, I've known Will now ... And we were actually just talking about this, I'd say it's more accurate to say I've known of Will, and he's known of me, for quite a few years. We've talked online quite a few times, but not so much in person. You'll know if you listen to this podcast, we've actually featured SearchPilot, I think three times at the last count for the A/B testing they're doing. SearchPilot itself, Will I think it'd be a really good place to start if you could give us a little bit of history about yourself and SearchPilot, maybe for those who it's all new to.

WC: Yeah, absolutely. So I mean, I guess right from the beginning, I got into the industry, founded a company called Distilled back in 2005, along with my co-founder Duncan Morris. And Distilled became an SEO agency, we started in web dev, web design, but ended up an SEO agency. Running conferences, Search Love, people might have heard of. And we ended up with offices in the UK and the US. And then in the last few years we'd built up an R&D team, just kind of looking for new innovations, looking for exciting new things that we could do. And the biggest, the most exciting thing that came out that, was the platform that we originally called the ODM, the Optimisation Delivery Network, which is what's now called SearchPilot.

And just before the pandemic, January 2020, we went through a deal where we spun out the technology and software platform, which is the platform designed for SEO A/B testing. That we rebranded SearchPilot, spun out into its own independent company, and sold the rest of the company, so the agency and the conference business to a company called Brainlabs, which is based on the PPC side of things. and that group is now doing most digital marketing channels After having acquired Distilled and a couple of other folks. And so we're now running SearchPilot as an independent business owned by the old Distilled shareholders. And my time is majority spent running that, and then I have some ongoing responsibilities on the Brainlabs side as well.

MWC: Brilliant. So if we talk about actually then ... You mentioned you made this R&D group at Distilled where you came up, amongst other things I guess, with this ODN. Why did you feel it was necessary at the time to go down this route and make an A/B Testing platform for SEO? Because as you know there's lots of opinions in the industry about how things work, and even very experienced SEOs, you can see disagreeing on pretty, I guess basic things. So at what stage did you think, "Okay, this is going to be a good idea, this is going to be really helpful for us," or was it more just kind of a punt than you thought you'd see, "Let's see what we get back from it?"

WC: Well I mean, I think what you described there is exactly why do we need testing, is the depth and breadth of opinions, it's one of the most delightful things about SEO. But there's times, in my opinion, where we also would really like to know what's actually true. And so it was a convergence of a few different things. Partly we'd seen the direction of Google, direction of the industry in general, towards more complexity, more kind of black box thinking on Google's side, more machine learning in the algorithm. Just less predictability, I think. Actually just less understandable, for us on the outside, I suspect also for search engineers. So there was that trend, which we've been talking about for a few years. Conferences, writing about, and so forth.

There was the fact that this was an emerging capability that some folks were able to do. So we heard about people at places like TripAdvisor had built an internal platform for doing some of this stuff. Pinterest had blogged about it. There are a few places, big tech companies mainly, who'd managed to build out the capability to do this, what was totally novel kind of SEO testing. Not just kind of testing in the laboratory, or testing on a kind of made up domain, or trying to look at before and after, but actually trying to apply some kind of statistical significance to this kind of stuff.

And so, I guess there was need because of Google's shifts and so forth. There was opportunity because of the kind of discovery of this way of doing things, I suppose. And we thought that there was a chance that we could build it out in a way that would be accessible to way more sites and organisations than just these tech companies who could build their own product. And so Tom Anthony, my colleague now at SearchPilot, was then the head of R&D at Distilled, and my co founder Duncan joined that team, he was come on the engineering side of things. And it came ... I guess the final piece of the puzzle was, was it possible for us to build something like this as a product, rather than just integrated into the platform like somebody like TripAdvisor had done?

And there we set off ... This is what R&D is all about, it was literally risky research and development, can we build something that's performant enough, that can deploy the way we want it to? So actually, we had a hack week where the teams built the very first version of a thing that could just make modifications. It wasn't even split testing at that point, it was just fixing stuff basically, in a CDN like layer deploying into the server side of the website. And obviously, given where we are now, the answer was yes, we can build it, yes, it can be performant, yes, it can deploy that way. And from there, it was a questions of all of those pieces coming together and building it out.

MWC: So you touched on a few really interesting points that really, I think, impact the way SEO professionals have to both strategically and tactically consider what they're doing, what they're advising to clients. And I saw in ... We're going to be talking about today, how senior leadership should think about SEO investments. And one of the things I saw that really interested me, a tweet you did, was you said that, "The future of SEO looks more like CRO," so conversion rate optimisation. And I just wanted to explore that with you, and is that ... How close do you think we're going to get, and do you see A/B testing playing more of a role as we head towards that future?

WC: I think, I mean spoiler alert, yes. Which obviously I'm biased, I run a testing company. But I think the causality runs the other way. I run a testing company because I think it's going to be important. And so I think there's two big strands to what I mean by this, future of SEO looks more like CRO kind of line of thinking. One is that methodology side. So the methodologies of CRO, the idea of going broad to develop a hypothesis, then testing your hypothesis. On the CRO side, that might come from user research, it might come from surveys, it might come from looking at what your competitors are doing, any of those kinds of areas.

On the SEO side, again, it can look at what your competitors doing, it can come from first principles on the kind of information retrieval side of things, it can come from Google announcements, it can come from, again, user research, what do you think users enjoy or like? And then building that out as a specific hypothesis and testing it, and iterating through those side of thing. So that's part of it. I think that the methodology of SEO at high performing teams, especially on large websites, big organisations, looks more like the methodologies of CRO.

The other piece is the ... I actually think the kinds of hypotheses that we have, and the kinds of changes that we make to websites to help them perform better in search, will be more and more user centric over time. And that's not exactly the same thing as saying, "All we have to do is optimise for the user." Google would love us to think that that was what we had to do. I don't think that's the case now, and I don't think we're anywhere near that case, and possibly not even converging to that case. Because the the needs of search engine spiders, the needs of a web scale index crawler, et cetera, they're not the same as a user, and there's always going to be things I think that we have to do just for the robots.

And actually, arguably there's more of that that we have to do just for the robots than there ever has been. If I think back over the 15 years I've now been in SEO, we do more and more of that than we are used. Robots.txt, sitemaps.xml, hreflang, all these kinds of things that are not designed for humans at all. So I'm definitely not saying all we're going to need to do is user base stuff, I just think that a lot of our best hypotheses will come from, "This is going to be better for our users."

MWC: I think it's really interesting as well from a point of view of training people to do SEO. So something I've certainly noticed when we've run training courses is when we're talking to people who are involved in content, and on page SEO and not so much technical SEO, a lot of what I will teach them, rightly or wrongly, is sometimes they don't need to know the specifics, or they shouldn't be thinking about the rules of what they're doing so much as the longer term Google's goal of just focus on making this helpful for the user in terms of the content you're writing. But still from the technical point of view, as you say, things like schema, they're not seen by the user, we still have to do them for the search engine. I still have ... I spent a lot of time with clients on canonical tags because Google's getting stuff horribly wrong, and hreflang as well.

So yeah, we're doing more technical SEO than ever. But it does seem, at least from the more content point of view ... I'm talking to people less about specific rules they should be trying to optimise for, and just think more generally about what's good. So I think that's really interesting. The question I've got for you out of this is, do you think now, for people coming into SEO, and learning about SEO, and wanting to go across any of those fields, whether this kind of technical, content, whatever, they should be looking at experts such as, I know Dawn Anderson is is specialising a lot recently in information retrieval. We've got people like Bill Sawatzky, who over many years has been taking this approach of looking very heavily at patents. So do you think we should think more about the technology and Google's aims, and trying to work out where we fit in there, rather than, "We should do these things because these help ranking?"

WC: Yeah, I think that ... So I think my view on this is that at the very entry level, you're probably better off understanding some of the fundamentals of how users search, how search engines are used, how search engines crawl your website. The kind of the foundations, I guess, of appearing in search rather than optimising for search, in many ways. And I think everything you're describing there is what I would encourage somebody to do when they are at the intermediate level. I think yes is the short answer. So once you've got the basics down, the next level down is understanding information retrieval theory.

I mean, I read as much as I can of the things that people that ... You mentioned Dawn and Bill and so forth, put out. Because they're ... Certainly from my style of learning, my style of approach to these things, I find that the better I understand the fundamentals, the better I can reason about it from first principles, the better my recommendations and ideas are. I can imagine a different approach. And I know some very success ... I don't think there's only one way of doing this, I know some very successful SEOs and digital marketers who don't really think about it from the algorithmic side at all, right? So that's that's my approach, but I'm not saying it has to be for everyone.

I think there are alternative approaches. I know some phenomenal content driven folks who really just think about it through the editorial lens, through the use of behavior lens, that kind of stuff. It's probably a little bit of building teams with diversity across these different approaches. But my view, especially on the consulting side, which I guess is the side I know best, where you're working with a wide range of different websites, seeing all kinds of different problems. The kinds of technical issues that come up, the kinds of things that block rankings, the kind of things that result in under performance. I think yes, you probably do ... To get to an advanced level, you do need to understand the basics, and the fundamentals, and the information retrieval side.

MWC: So that's something I want to pick up on a little bit later, which is the difference in context to A/B testing with working on one site to multiple sites. But while we're talking about technical knowledge and people knowing things, I'm just going to read out some stats that I saw Will tweet that made me feel incredibly uncomfortable being an SEO professional. Which is that there's a few things in SEO that have kind of correct, right and wrong answers, and Will did a series of polls on Twitter and asked SEOs, or at least people I guess that follow him, which is mainly SEOs, to answer these.

And this is the results that we got, which is that apparently 80%+ of answers to robots.txt or crawling questions were incorrect. 70%+ of answers to questions about frequently cited keyword algorithms like TFIDF were incorrect. 70% of answers to questions about link flow algorithms like PageRank were incorrect. And 70%+ of answers about JavaScript and cookie handling were incorrect. Even experienced people on various tweets I saw Will do about deciding which page might rank better were pretty much no better than a coin flip. Why is this? Why? Are we just all terrible at SEO?

WC: I mean, some of us are some of the time. I think there's a lot to unpack here. So let's work through some of my ... What I think is going. For a start, you mentioned these are Twitter polls, right? So who knows who's responding to them, who knows how much experience they have, who knows how much thought put into them. There was ... I've seen baseline estimates of everything from 15 to 20% of these kinds of polls, people are just clicking any old thing, right? And you can see, if you do things about ... On the less serious side, "Have you been abducted by aliens?" Consistently gets kind of 10 or 15% yes response rate. On the more serious side, I noticed on the ... Obviously we're recording this still in COVID times, and there was a survey about what COVID restrictions UK folks wanted to keep in place after the pandemic, and something like 20% of respondents wanted a forever curfew, right? A 10:00 pm curfew, forever.

And just so I think the baseline is at least 10, 15 20% of respondents, who knows what they're clicking in these polls? However there is a serious problem. I think ... So let's break it down. Some of it is just technically wrong, but it doesn't matter to your performance, right? So if you have a slightly different definition of ... Or rather, you're slightly incorrect ... We're talking mainly about, as you said, things that are factual, things that do have right and wrong answers. Suppose you get it wrong about the definition of a particular word or those kinds of things. That may not make your recommendations worse. You may be just as good at SEO. You may be a little bit less convincing in describing your recommendations to some people who take you less seriously because you used a word incorrectly or whatever. I'm not saying they should, but that can happen.

Some of it is quite niche. So some of the robots.txt stuff for example, I go pretty far down the rabbit hole. And so some of that was really quite technical and quite esoteric, and often quite testable. So that's the equivalent of at school we were told, "You won't always have a calculator in your pocket." Little did you know that actually, these days we not only have a calculator in our pocket, we have access to the entire world's information. And so a lot of this stuff, I would just look it up.

If I was going make a recommendation around some of these weird, edge case, robots.txt stuff, I wouldn't be relying on knowing exactly what the precedence for those rules are, I'd go and look it up. So some of it doesn't matter. Somebody doesn't know off the top the head, maybe not. The ones that I get most bothered about are things like the fundamental misunderstandings of things ... Some of link algorithms and those kinds of areas. And it's not ... I can't figure out whether it makes people's recommendations worse, but it kind of bothers me that this is foundational to what made Google different in the first place.

And I'm probably the least gatekeepery person you can imagine, I love people learning themselves and discovering their own stuff, and I wouldn't stop anybody from getting into the industry. I would strongly recommend anybody getting into the industry to go back and read some of those foundational papers, right? The hypertext protocol, the original PageRank papers, that that kind of stuff. And I personally find very hard to reason about things like, "Will this internal linking change be a good idea?" I could just end that sentence there. I find it very hard to reason about things like whether internal linkage chains is going to be a good idea. I certainly find hard to do that without thinking deeply about how intuitive link algorithms might work.

And then the final piece, I think, is the ... Some of our more crowd pleaser type content, or crowd disappointing content, such as asking people which page will rank better. And what I think are essentially core SEO tasks, like is it a good idea to change this page in this way? That kind of stuff. And I think those are hard problems, genuinely hard problems. So I think we may be slightly better than these surveys suggest, because I'm sure that people are seeing a tweet survey, going and cracking out all of their full toolkit, doing the full research, diving into all the data. They don't even have access to the analytics and those kinds of things.

So there's a lot of limitations, this is really first impressions. And first impressions are often a coin flip. I hope we could better than that with with focus, and attention, and practice. But I do think there's a ceiling. I think from all that we've seen, I think that ceiling is something like 67% accuracy. Which means we're getting 30-40% of those calls wrong. And that's why I think testing is so important, because the calls you get wrong can really hurt you.

MWC: So let's about that and making changes on specific sites. A/B testing with specific sites versus making decisions, I guess across a range of different sites. So I know you've spoken before about ... And we've spoken about it in this episode, Google essentially slowly moving towards these machine learning based algorithms, which make things a bit more opaque from the outside, and like you said, probably the inside as well in that for one website, we might have, if you want to call them factors, variables, they're weighted differently for another industry or another site. It's not just this static set of rules, and probably never has been that there's just kind of copy pasted across, this will apply to any site.

And I've seen some fairly prominent people in the SEO industry, no names mentioned, that very much kind of poo poo A/B testing for SEO. They're just like, "You can't do it, Google's way too big, too complex, things are moving too fast." And I guess I have a question around ... I can certainly see, like you mentioned at the beginning with TripAdvisor, if you're run sitting in your own box on your own platform, the value of the testing there. And my question is, are the findings that you discover, even when they're significant, do you think they are applicable then as a soundbite for, "We found internal anchor text did this on this site." Is that something then SEOs should take and think about on their side? Should we be modifying our thinking based on these tests done on other sites?

WC: Excellent question. And I mean firstly, just to go back to the ... I guess some of the premise of the folks who ... I've seen people who don't think it's possible or worthwhile. My question to them is, what's your suggestion? What do you think is better than what we're doing? Because I think ... I mean I guess you could just throw your hands up and say, "SEO is impossible." Right, I guess that is a ... That's a coherent position. I would argue against it, but it's coherent.

I think if you don't think SEO is impossible, then I believe scientific data helps you, and testing is the path to that. So that's just covering off, I guess that part. We have gone through a few evolutions of thinking on how generalisable these results are. And although it didn't exactly happen in this order, I think it's probably related to the separation of SearchPilot from the consulting business actually. I think in the early days we imagined that maybe by running all these tests, we could get better at knowing answers. And we, as Distilled at the time with this testing capability, we could be the best agency because we had access to the database of all the tests that we'd run, and from that we knew more answers than anyone else.

We have discovered that broadly isn't ... It didn't pan out exactly like that, and there's a few subtleties to it. But I think broadly speaking, the biggest benefit of this is running tests on your own side. That's definitely true. Not every site is suitable for testing on, that's one of the problems. And not every page on any websites ... You can't run an SEO A/B test on a homepage, for example. It needs to be on large site sections.

And I think my current position is, there is a benefit to reading about winning and losing tests. I mean, we publish a lot on our website. We have an email list of ... Where we publish the case study every couple of weeks. I find that valuable. I hear from around the industry that other people find that valuable. If I'm working on a small website, for example our own, a B2B SaaS website, I am going to take lessons learned from scalable tests on very large websites and use them to inform my thinking. So yes, to answer your very final question, yes, I do think tests run on other websites can inform your thinking about what you should do. However, we very much not discovered generalisable rules that hold true across the board. Almost every test that we've run, almost every kind of test we've run, we've seen a positive version and a negative version, depending on implementation details, the kind of website it's running on, the various other, I guess competitive type situations.

Schema is a classic one for that, right? Schema is a classic prisoner's dilemma, where it's good if your competitors don't have it, and if your competitors have it and you don't you kind of need it, if neither of you have it that might be okay too, and it might actually be better because you're giving Google less information. But if neither of you have it, both of you have an incentive to defect and put it on your website, so we end up with schema everywhere and possibly not benefiting anyone but Google. So I think it should inform your thinking. You should not take a test run on a different website and say, "This test means this always works, therefore I'm definitely doing and I'm not even going to pay attention to whether it's a good idea on my website, I'm just doing it." You have to do some form of your own thinking around that.

And that's just kind of ... I guess difficult, but it's the way ... So I don't if you remember ... When would it have been? Late 2000s, so 2008-2009, there was the Moz ranking factors survey. It was probably called the SEO Moz ranking factor survey at the time, and I contributed to that for a number of years. And I found it really interesting in the first few years, and increasingly found it impossible to answer their questions, because I just ... The combination of I didn't know, and the answer depended. And I kind of suspected that nobody knew. And I think that's where we are now, is we can have hypotheses and we can test those hypotheses, but they're very hard to turn into generalisable, "This always works type rules."

MWC: We're at the midpoint in the show, so I want to give you an update from our podcast sponsor Wix. You can now customise your structured data markup on Wix sites, even more than before. Here are some of the new features brought to you by the Wix SEO team. Add multiple markups to pages. Create the perfect dynamic structured data markup, and apply it to all pages of the same type by adding custom markups from your favorite schema generator tools, or modify templates by choosing from an extensive list of variables. Easily switch between article subtype presets in blog posts, and add quick link for structured data validation in Google's rich results test tool. Plus, all this is on top of the default settings Wix automatically adds to dynamic pages, like product, event, forum posts, and more.

There's just so much more you can do with Wix, from understanding how bots are crawling your site with built in bot log reports, to customised URL prefixes and flat URL structures on all product and blog pages. You can also get instant indexing of your homepage on Google, while a direct partnership with Google My Business lets you manage new and existing business listings, right from the Wix dashboard. Visit Wix.com/SEO to learn more.

I did actually see a new list of compiled ranking factors done by survey the other day. I think it's an interesting insight you've given, which is as you've got more experienced as time has moved forward you perhaps found those questions harder to answer. So maybe the people left who are answering them haven't realised yet how hard they are to answer, which is really screwing up the end results.

WC: Yeah, I mean put it this way. Nobody asks me to complete those surveys anymore. I think my opinions might be too robust for them.

MWC: I picked up on something you said earlier, and I think kind of the answer. But can you expand why you say you can't run a test on a homepage, an A/B test on the homepage?

WC: Yeah, sure. So you can ... I mean there's things you can do on a home page. So you can ... Obviously you can change something and look at the before and after. You can try and compare what happened before you made the change and after you make the change. That can be confounded by many things. Seasonality, competitor changes, Google algorithm updates, all kinds of other things can compound that data. The challenge ... And there are other kinds of tests you can run on a home page, right? So you can run a conversion rate test on your home page, where you show different versions of your homepage to some of your users, and different versions to another of your users, and compare the resulting behavior.

The problem with SEO tests is that we're essentially talking about an audience of one in the first instance, which is Googlebot. And so you can't be version A and version B, because you have to pick a version to show the Googlebot. And although there could be millions of users kind of the other side of Googlebot, they all go through that Googlebot funnel, if you like. So they all get the experience that Googlebot got as the search results are concerned. And so, yeah, there's essentially no way of doing a kind of controlled, real time A/B split test on a single page.

The way that we run tests is we take a large group of pages that have the same layout, the same template, what we call a site section, and we change some of those pages and leave other pages as the control. And then the statistics, the machine learning model on that is quite complex, but essentially we're using the control pages to figure out ... The behavior of the control pages to get rid of those confounding variables like seasonality, and site wide changes, and Google algorithm updates, and competitor actions, to figure out is the change real? Is the change that you see on the pages that you modified, is that change real or is it a statistical artifact?

MWC: So I'm now going to start wading knee deep into statistics, an area I'm incredibly uncomfortable going in, so I'm going to stop at the edge of the pool and ask two very careful questions. Which is, for ... So we deal with a lot of medium sized businesses that I don't think would be able to do this kind of testing, as we said on sections of the site, there's just not enough, perhaps traffic to do that. So I have two questions, so I think they're related. Which is, firstly, do you think it's worthwhile for smaller sites to try their own kind of testing, without this platform, knowing that they couldn't do this? And secondly, because I think it's a related question, because I've seen you write about it, if you want to talk about what happens when you do tests and you get a null result, and whether you still deploy stuff or not based on that?

WC: Yeah, they are quite closely related in many ways, I think. So to take the first question, what do I think folks should do with their site is too small or their traffic is too low to run statistical tests? I do think they should run ... I guess what I call them experiments, rather than tests. So you're not trying to get statistical significance, you're not trying to do science, but you do want to go back and say, "Was that thing I did a good idea?" And sometimes the answer will be, "I don't know," and you just have to do your best with that. And some ... It's not for literally every change, but I think for anything significant, anything with a big hypothesis behind it, yeah, annotate it in your analytics, look at before and after. I probably wouldn't get into trying to do it too statistically.

I think what ... And this is the same on the conversion rate side, right? If you've got a very small site without a ton of traffic, you can absolutely do conversion rates ... Apply conversion rate methodologies, conversion rate optimisation methodologies. You can interview your users, you can come up with hypotheses about what's going to improve things, but you're not going to be saying, "I'm going to be able to measure a 0.2% uplift," in the same way that Amazon might be able to. You're looking for doubling your conversion rate.

And so I think that's the other part, is go bold. If your site is small, low traffic, try the big changes. Because if you improve your conversion rate by 0.2%, not only can you not measure it, but it doesn't matter. That's an extra conversion every couple of decades or something. Look for the big changes, and look for the things that will show up in your analytics if they work. If they're risky, they'll show up negatively and you can undo them. So yeah, that's my answer to that side of things.

And it relates to the second part, which is what do you do when you run either an experiment or a test, and the answer is, "Don't know." Which is common. One little bit of language stuff that we've tried to really implement at SearchPilot is not to call that a null result. Because null is a terminology that comes from statistics, and anybody who ... Would it be GCSE statistics? Probably would be. You have the null hypothesis, right? And the null hypothesis is the default case. And that's the situation in most of the cases we're talking about, the null hypothesis is, "This change made no difference." And the hypothesis you're testing is, "The change did make a difference."

And most statistical tests are not designed to tell you that the null hypothesis was true. All they're capable of doing is saying, either the alternative hypothesis is true, probably. Statistically likely to be true. Or we can't rule out the null hypothesis. And that's not the same as saying that the null hypothesis is correct. And that's quite important, because what you're saying in that situation is ... Suppose you get a test that is what we call inconclusive. You're saying, "Basically we don't know." You're not saying, "We think the original version is better." You're not even saying, "We think they're equivalent." We're saying, "We don't really have enough information to judge."And normally the error bands are so big that they include, "There was an uplift," they include, "There was a downturn," and so that's why we call them inconclusive.

So the question is what to do with an inconclusive result? And that can be an inconclusive result to a scientific test like we're talking about here, or it could be the inconclusive result to an experiment, like I was talking about before where you just do something bold, and then you go look in your analytics and you're, "I don't know, can't really tell." And so you do something, the results are inconclusive. What should you do next?

And there's a lot of information out there, there's a lot of people with very strong opinions that you should absolutely not deploy the change, right? You run a test, you were looking for statistical significance, you did not find it, so it's just wrong. Scientifically, mathematically, statistically wrong to roll out the change. I disagree. I think ... And the phrase I've used for this is that, "We're doing business, not science." So in scientific ... In true science what you're trying to do is push forward the boundaries of human knowledge, and you want people to build on the work that other scientists have done. So you want to say with confidence that a result is correct, and then future scientists can take that result and extend it, and expand it, and go further and deeper.

And in some areas, it's actually really important that the first result is correct as well. I think medical trials or something like that. And if you're in those kind of situations where you need to know the answer, then you absolutely shouldn't draw an inference from a test that is inconclusive. However, we're doing business, not science. So what we want to do is not discover with statistical confidence, "Is version B better than version A?" What we want to do is roll out the one that is most likely to make us the most money. And if the statistics come back and say ... Well let's do the Brexit ratio. 52% chance of version B being better, 48% chance of version A being better. You can't build a medical trial on that. You can't publish that in scientific journal and expect future scientists to go, "Well this is definitely true. We can build the future endeavors off this."

But if you're going to say, "Which one do you want to roll out?" I'm going to go, "Well, B." Right? Because it's only a slight ... The odds are only slightly in my favor, but they're in my favor by the same amount that casinos odds are better than mine, and they make a lot of money. So there is a whole bunch of mathematical statistical research under pinning this, it's not just me talking nonsense. If people want to go and delve more into it, it's all kind of regret minimisation type stuff, multi armed bandit. There is a load of research in this area.

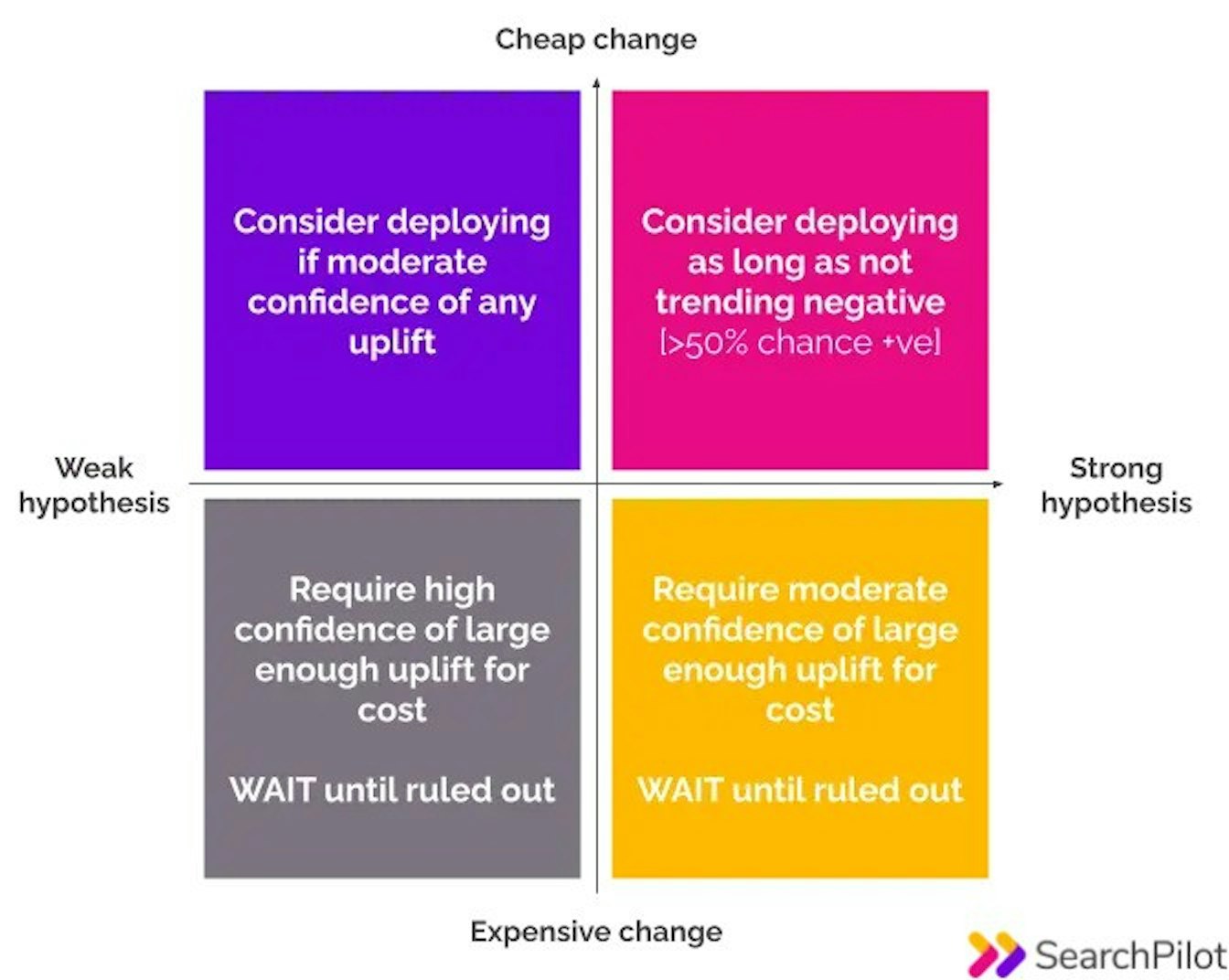

But we simplify it to, essentially a decision quadrant. So how strong is the result, how likely do we think the result is, how strong is the hypothesis before we started, right? If we're just throwing stuff at the wall and see what sticks, we need a fairly strong result to believe it. If we had a very strong reason for thinking that this was a good idea from first principles, information retrieval theory, et cetera, then a weaker result will do, and the final variable is how hard is it?

So if this is a very simple change, we're literally just going, "Should we have A or B?" And they're both built, then we'll just go with the one that got the better results, even if it's only very marginal. Whereas if there's a big cost to building that out, then we'll look for a high hurdle, essentially, on that confidence interval. But we're definitely not dogmatic about saying, "This must be P equals 0.05, 95% confidence, whatever. There are things you can learn from other tests, in my opinion.

MWC: Wow, that was ... I don't think I've ever quite heard that so expertly explained, so thank you for that. Trying to pull my mind back to just summarise some of that. Small sites doing conversion testing, go big. I think I actually saw that advice first from, I think it was Conversion.com, and it was really valuable when I learned that about ... As well, as the amount of data then you need to prove that something does have an impact. So, again, great advice. I love the comparison to casinos, with those small percentages making a difference, and you're doing business, not science. And also, at the show notes, so Search.WithCandour.co.uk, we'll link to that quadrant you spoke about, sort of so people can visualize that about making decisions. So we're kind of coming to the end now, do you have any final advice for kind of senior leaders in general when they're thinking about SEO investments?

WC: Yes, so I've been doing a lot of thinking about this. And I think we're in a fantastic situation now, actually, where for the last ... As long as I've been in the industry, so 15 plus years, we've been talking about how the skills that you get as an SEO practitioner could set you up really well for marketing leadership. Because you have to understand people, data, channels, creative, analytics, it's really great training. And so back in 2005, we're all talking about the folks that we're hanging out with are one day going to be marketing leaders. And we kind of ... We're the dog that caught the car, we are, right? There are VPs of Marketing, Marketing Directors, CMOs around a whole bunch of industries who's backgrounds are in SEO, which is great.

The downside is most of them were practitioners in 2012. And I think that what I've been trying to encourage them to learn a bit more about since then is the growth of the machine learning side, on Google's side, so less predictability, more need for all the stuff we just talked about, all the testing and so forth. That the SEO is hard, and getting harder. But just as necessary as ever, right? The organic channel is massive for a huge range of businesses. So they need to structure their investment. And I think this is probably the key thing. They need to structure their investment and their team in such a way that they can sustain it for long enough to win.

And probably the biggest mistake I see is kind of a lot of chopping and changing of strategy, a lot of doing a bit, not doing it for long enough for it to work, stopping and doing something else. And none of them, none of those things stick. So I think what my advice, therefore, is to build the reporting, build the expectations, build the communication to senior management, senior leadership about how long it takes, about what data is available. I talked about bringing opinions to a data fight. What SearchPilot is trying to do is help those folks bring data to those conversations.

And to be able to say, "Look, here are the hypotheses, here's the test results, here's the data that we're getting." And then the final piece is actually, I think looping all the way back around to where we started, is the user centric stuff, and the future of SEO being more CRO-like. So, not only invested in CRO, not only structure your initiatives and your hypotheses in similar ways, but make sure you access that data, and make sure you are being user-centric. And while I definitely believe in following the test results and the data, remember that the actual point is impressing humans still, the robots aren't buying anything. So I want to be part of building better web experiences for as many people as possible, and I feel like that is what the industry has pushed towards over the years. And so I guess, putting those things together, that's where I hope marketing leadership gets, is sustainable sustained SEO investments, directed at creating great user experiences.

MWC: Will, thank you so much for joining us. What's the best way for people to connect with you, find out more about SearchPilot, have conversations with you about this kind of thing?

WC: I'm probably most accessible on Twitter, @WillCritchlow on Twitter, my DMs are open, I'm pretty active there. You can also check out SearchPilot.com. That's where all of that kind of official stuff is. And in particular, the case studies I mentioned that we publish every couple of weeks, and the email list that goes with that. So some combination of those things, my email address is pretty easy to find if people want to drop me an actual email as well.

MWC: Brilliant. Will, thank you again. Really appreciate you taking the time to join us, talk to us about A/B testing, marketing leadership, SearchPilot.

WC: It's been great, thanks for having me on.

MWC: Hope you enjoyed this episode of Search with Candour with Will Critchlow from SearchPilot. We'll be back, of course, as usual in one week on Monday the 27th of September. If you aren't already, please think about subscribing to the podcast. I try my best to keep it interesting for everyone working in and around search. If you've got any suggestions of course, please let me know. Otherwise, I hope you have a wonderful week.