Episode 13: Google core update and a silent Google Ads location update

Mark Williams-Cook and Rob Lewis will be discussing the Google core update...

Or get it on:

We give some much demanded advice regarding the Google core algorithm update which hit recently, and further discuss some of Google's own best practises and if they are best practises for you or them. Finally we jump into some questions sent from all you incredible listeners!

Show note links:

John Mueller Google webmaster hangout: https://www.youtube.com/watch?v=x0qLJRivdmY&feature=youtu.be&t=1572

Webmaster blog - 23 questions to ask about your site:https://webmasters.googleblog.com/2011/05/more-guidance-on-building-high-quality.html

Article about Genius accusing Google of stealing content: https://www.wsj.com/articles/lyrics-site-genius-com-accuses-google-of-lifting-its-content

MC: Welcome to episode 14 of the Search with Candour podcast recorded very late on Monday the 17th of June 2019 my name is Mark Williams-Cook and I'm flying solo today. I'll be talking about the Google core algorithm update and some of the latest advice from Google on this; also a thought piece on should we actually be doing exactly what Google tell us all of the time? We've also been asking you to submit your own questions to the podcast, I’ve got a couple of your questions that I'm going to answer about SEO. Hopefully a great episode for you!

The weeks are rolling on now since Google released their June core algorithm update, there's still no hope in sight sadly it seems for the Daily Mail. It was confirmed it was their director of SEO that posted on the Google Help forums looking for some kind of feedback as to why they had lost 50% of their organic search traffic overnight and 90% of their discovery traffic.

I've been having a read through the feedback other experts and Webmasters have been giving to the Daily Mail, and to be honest it's not great advice a lot of it. There's some really odd stuff suggesting that the Daily Mail site isn't mobile-friendly or they haven't included some information in the schema about organisation, or really specific pieces of advice which I think it's a hard stretch - to say it's a very small thing that's caused a 50% drop in traffic in 24 hours and it does actually go against what Google have been telling us. The definition of a broad core algorithm update which is that it’s very unlikely there's going to be any one single thing you can just fix and pop back if you have had a loss in rankings. They're saying that if this has happened to you it may be just because you weren't meant to rank there before and other sites are now getting basically the credit that they deserve.

Now if you watch the Google Webmaster Hangouts you'll no doubt be aware of John Mueller, who is kind of probably the main conduit between webmasters and Google, is very active on Twitter he's at he's @JohnMu so highly recommend you follow him if you're not already. He’s a really nice, helpful chap who's helped a lot of people and he also takes part in webmaster hangouts which are basically video calls that normally last around an hour or so and webmasters can shoot in questions and he'll do his best to give the answers he can or is allowed to. He had unsurprisingly a question in the last webmaster hangout about the Google core algorithm update just asking if as webmasters we can have any kind of guidance on what we should be looking at - maybe what verticals are affected and where can we go if we have lost traffic. John gave an answer again explaining a broad core algorithm update is just that it's broad we're not looking at any one particular area necessarily, such as links or such as certain technical fixes, the advice he did give though was quite interesting as he said “we can't give any specific advice” and then he gave some fairly specific advice.

He said perhaps get people who are not associated with your website to look at it and compare it to other websites, ask them if they feel they can trust it and specifically he said things like “does the design look outdated, what's the general feel of the site, what's the overall picture of it” so Google is obviously measuring these kinds of trust authority signals in many different ways. That’s why it's so difficult to translate them down into individual ranking factors, and John's absolutely right - it's an approach we take even when we're building sites which is if you're currently running a website, whether you like it or not you will be bias as to your view of that site, and now perhaps you don't think it's the best but a lot of the time you're not seeing how your end users see it. Which is again what Google's algorithm is trying to look to do, so we said to look at things like can we trust the site, and does it look outdated and one thing I got quite excited about was John specifically mentioned does it does it use stock photos, or do they have their own imagery.

It's a discussion I've had previously with other SEO’s, is when we've been looking at just how good Google's image recognition Is. You can upload a picture of an animal and it will tell you not only is it a dog, but it will say ‘okay that's a black Labrador’ specifically. One of the interesting things that came up in conversation was as humans is quite easy for us to spot stock imagery, you know they're in a really well lit cheesy office photo where people are gathered around the computer pointing and have cheesy smiles. We've all seen them! I was raising the point that it's probably possible for Google to identify what is or what is not a stock image, not just through how often the image appears across a different range of sites but by how the image looks. If you're selling a product or if you're explaining a service, I can't help but lean towards - I would bet that the websites probably perform better if they used their own photography rather than cheap stock photography that's not quite on point.

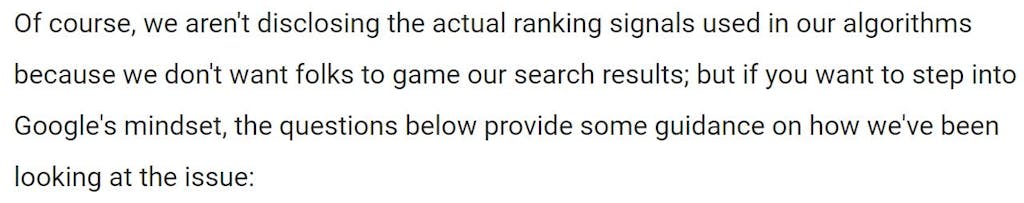

That was really interesting what John said, getting someone else to have a look at your sites - can they trust it, does it look up-to-date, would you use that site against your competitors? He also pointed us towards an older blog post by Amit Singhar and I've linked to this in the show notes which you can get at search.withcandour.co.uk and this blog post from the Google Webmaster blog outlines 23 questions you should be asking about your website and the blog post starts off saying:

I think it's worth just whizzing through these questions so, as you can listen to this, I would be thinking about your own websites all the websites you work on and what the answer to these questions might be. So, the questions are:

Does the site have duplicate, overlapping, or redundant articles on the same or similar topics with slightly different keyword variations? That's quite a common one, especially as sites get older and there maybe isn't a particular content plan people are following so you get the same content written repeatedly in different ways cannibalising itself. So rather than one good article you have a dozen not so good ones.

Would you be comfortable giving your credit card information to this site? I think that's a really fascinating question because there's so many nuances to that as John's pointed out about things, like how professional your logo looks is going to going to help people make their decision on whether they would trust your site enough to give them credit card information. Apart from the technical things like “is it a secure site, is it on HTTPS, does this admin have spelling, stylistic, or factual errors, are the topics driven by genuine interests of readers of the site or does the site generate content by attempting to guess what might rank while in search engines.” So, I think this is going to be about when sites are procedurally generating questions for instance or if back in the day, we used to get the exact search term people used sometimes they'd generate a page about that.

“Does the article provide original content or information, original reporting, original research or original analysis?” that's a really interesting one again. Are we just kind of taking other people's data and reshuffling what we've already heard, or are you the actual source of that information? That analysis, that thought “does the page provide substantial value when compared to other pages in search results?” That's my favourite question on this whole list.

When we talk to clients about SEO, when we talk to them about content strategy, I always ask clients what it is they would like to rank for. They'll give me a search term and then we'll have a look for which page on their site should rank for that search term, and I ask them “do you genuinely think that this page is the best page on the web for this search term?” If they're honest the answer's no and in reality, you're not normally in the top 10 or top 100, so having a look at if you deserve to actually rank there.

“How much quality control is done on the content, does the article describe both sides of the story, is the site a recognised authority on its topic, is the content mass-produced by, or outsourced to a large number of creators, or spread across a large network of sites so that individual pages or sites don't get as much attention or care, was the article edited well or does it appear sloppily or hastily produced, for a health-related query would you trust the information on this site?” So that's specifically dealing with the, ‘your money or life websites’ where if the information is not correct it can cause actual harm.

“Would you recognize this site as an authoritative source when mentioned by name?” It's about entity recognition.

“Does this article provide a complete or comprehensive description of the topic, does this article contain insightful analysis or interesting information that is of beyond obvious, is this the sort of page you'd bookmark and share with a friend or recommend, does this article have an excessive amount of ads that distract you from or interfere with the main content?” I know lots of sites that are guilty of that.

“Would you expect to see this article in a printed magazine, encyclopedia or book, are the articles short, unsubstantial, otherwise lacking in helpful specifics, are the pages produced with great care and attention to detail vs. less attention to detail?” and finally “would users complain when they see pages from this site?” So, that's quite a big list for you to go through and I'd be surprised if anyone listening to this could hand on heart answer ‘yes’ to all of them. The post ends off by saying:

John Mueller did say they will look at maybe doing an updated version of this in context to the latest updates but I think again it highlights the futility in 2019 of trying to chase individual ranking factors, because to cover those questions there's hundreds of elements that are going to be related to each one of these questions and how they interact together.

So the Daily Mail had 50% of its traffic gone overnight, other sites have gained, other sites have lost – there may have been a business case at some time to contravene some of these guidelines, such as the ones that stick out are the one about adverts. Are there excessive kind of ads that distract you from the content? Yes there are, but these are what pay the bills. This is what's driving revenue, this is how we’re hiring people, this is how we're producing more content, and look we're ranking really well. So it's really easy to see how you can get caught in this trap of looking at the metrics of what's working for your business and just saying “well okay Google's telling us to do this and you're telling us to do this as an SEO” but actually we're ranking really well and we're making lots of money so we're fine how we are thank you very much.

The point here is it's not always going to be that way, the only safe long-term strategy is to align yourself with Google and this may be what's happening with this broad core algorithm update, which is that people who have previously ranked well because they haven't been doing a bad job - there's nothing awfully wrong but they're not going with the spirit of these 23 questions.

Google's now pushed a broader algorithm update because they found with a combination of factors across the board, they can better match these questions, that's what's happened which means it isn't necessarily going to be a quick fix for everyone.

While we're talking about Google's advice, I thought it might be interesting just from an SEO point of view to ask the question “should we be doing what Google says all of the time?” What prompted this line of thought, which always does “cross my mind.” Like many SEO’s have been doing SEO for a long time, I've done black-hat SEO, done white-hat SEO and you know there is a realisation that Google's rules are just Google's rules at the end of the day. You don't have to follow them, they're not the law you can do as you wish but you have to be aware that if you don't follow Google's game-plan they also have the right to kick you into touch and the thing that just got me thinking about this again. Recently was a case from Genius which is a song lyric website; so one of the websites that hosts a large database of song lyrics so you google a song and then they'll provide you with the lyrics, and they've had a case going back and forth with Google for a few years now where they've been claiming that Google are just copying their lyrics straight out into the search results.

Ben Gross who is Genius’ Chief Strategic Officer said over the last two years we've shown Google irrefutable evidence again and again that they are displaying lyrics copied from Genius, and I had a little look at this story and it was really quite clever what they did. So, Genius are hosting these song lyrics and what they did was they switched between the two different types of apostrophe in their song lyrics because this way they've got a watermark/footprint of where they can tell if the lyrics that are appearing directly in Google have come from their site because they'll have this pattern of matching different types of apostrophe. To take it one step further, Genius encoded these different types of apostrophe so if you convert them to dots and dashes, they read as ‘red-handed’. In Morse code they read as ‘red-handed’ to prove that Google had actually taken this data directly from their site, and Genius said it can provide more than 100 examples of Google doing this, and I saw this thread on Twitter. Actually another company that I wasn't aware of at the time jumped in on a thread called “celebrity net worth” which was founded by a chap named Brian Warner and he explains he started this celebrity net worth website after trying to Google how much some celebrities are worth and realising there wasn't actually a good source of information for that. So, he did what any good person would do - he went and made a website about it and became one of the biggest databases of celebrity net worth information on the web and he hired people to do good research to make sure his data was solid.

His tweet says in 2014 Google asked celebrity net worth for free access to their API. An API is an application programming interface, basically a programmatic way for Google to access your data and Brian said that we declined to do that. We didn't want to give them direct access, however it did appear that Google was, or just started to after that take the information from their site anyway so what Brian and his team did was he published ten net worth pages for conjured up fake celebrities with fake names that wouldn't appear anywhere else on the web and he found by early to mid-2015, that all of their net worth information including fictional pages that were scraped from their site were appearing in Google with no attribution. There's a link to this story on the show notes along with the Wall Street Journal story of Genius as well, so you can read more about that and these just prompted my thoughts again on “should we be just following Google's advice blindly?” I've embedded in the show notes again a really nice chart by a fantastic SEO called Lily Ray, so she published this on a tweet and it's a screenshot of the Google search console graph that shows total clicks and total impressions, and she says:

The unintended consequence of adding FAQ schema. It looks so pretty in the SERP... but where did all our traffic go? 🙄#seo #structureddata #schema #google pic.twitter.com/yy93nGo6m8

— Lily Ray (@lilyraynyc) 13 June 2019

and she's showed that essentially as soon as they added the FAQ schema and the FAQ results came up, that impressions went up meaning that her data's being seen more and more in Google however the total clicks have died off and we're talking over the period of the graph she's published - almost 16,000 clicks. So, it's a fair amount. Now there are two sides to this story, so someone else has posted a really good example of comparethemarket on the search term ‘car insurance’, how they rank second and they have these FAQ boxes as well as their organic listing. So, they're just adding to them and what that's done is push their competitor further down the page and there are great examples as well on having FAQ schema on conversion type pages. So, the user has to click through anyway.

But it did raise this conversation again about Google's asking us to do all of these things, are asking us to add schema in all situations so that they can push forward and they can give us access to things like Google Home and give us the correct answers quickly but we have to realize that everything Google is doing they are doing for their own interest, for their own bottom line and some of the replies to this thread were from other SEO’s. Like with you of course what do you think is going to happen if you follow Google's advice blindly, why would you even bother hiring an SEO if that's all you're going to do.

It does raise a point that that is in some ways the job of an SEO, which is while they're aware of the best practice it's knowing the very fine line on what you can do to hold on to your profit margins and when actually you will need to fall in line and as I said earlier I believe the only long-term strategy is to, if you want to do well in Google at least, is to fall in line with Google with their plan but there are ways you can diverge from that as you go along and protect as Lily's pointed out.

Here she's going to be removing the FAQ schema in this instance on these pages because it's damaging their business, but there may come a point in the future where she wishes to re-add it so I think it's a really interesting conversation to have with your marketing and SEO team.

Time is flying on and we have some Q&A from our very lovely listeners. I asked you if you had any questions about SEO to message them to me on LinkedIn, or another easy way is where we are @candouragency on Twitter feel free to message us, DM us with questions for the podcast and I will try and answer them for you.

So first two questions I'm going to answer for you, here the first one is from Orion Mendes and she asks:

“Does the IP address (location) affect searching ranking? Hypothetically a US-based website vs. UK website, same everything else… Which would perform better?

So, Orion’s basically asking does the geographic location of where your website is hosted affect its performance in or outside that country, and that's a really interesting question basically because I haven't had to think about it for quite a while. We live in a world now where we have free services like Cloudflare and content distribution networks that allow us to, in many cases for free, deliver our website from the nearest geographical place to our user, so it's quite rare now. Well I wouldn't say it's rare but it's a lot less likely or going to be after dealing with these kind of issues, and I put it out onto Twitter to some of my other SEO friends for their opinion, and the poll came back really mixed - almost 50/50 as to yes it does matter or no it doesn't matter.

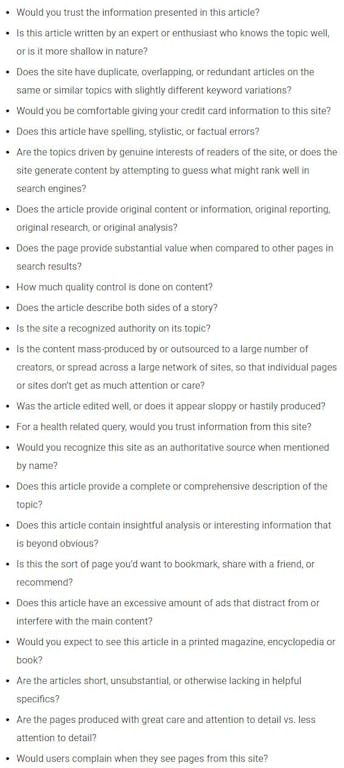

So, I thought it was worth doing a little bit more research and seeing with the latest most up-to-date information was now many people will refer back to very friendly Matt Cutts who helped us with SEO back in 2013 when he posted a YouTube video saying in the ideal world it would be great if you could have a separate country specific IP address for each of your different top-level domains. So a UK server if you’re on .co.uk, a German server on .de etc. John Mueller answered a similar question saying (below):

So, this is one issue I just want to ignore for now which is basically because that's a performance question so we know Google likes fast, well performing websites so we're going to run on thinking that whether our website is hosted in the US or the UK. For the hypothetical of this situation we're going to say that they perform the same, so the speed is the same, so that's not a discerning factor here. John goes on to say:

So if you haven't heard of those terms, ccTLD and gTLD. So ccTLD is CC country-code TLD top-level domain for instance, .fr, .co.uk. gTLD is generic TLD which is a top-level domain that doesn't specify a specific country like a .com for example. From this I would say the answer we've got is that the performance is the most important thing, so if you're not using a content distribution network and you've only got servers in different countries then there's going to be an inescapable performance difference there and performance does matter so you need to have your website being served to by a server that's close to your user.

The easiest way to do that is normally with a content distribution network or CDN, Cloudflare - that's a really cheap, fast, easy way to achieve. Aside from that it basically isn't that important because there's so many ways now for you to tell Google whether it's through a country code top-level domain, whether it's through location mark-up or through search console, in Google Search Console you can specify the country there and everyone should have Google Search Console if you're doing SEO so there's lots of ways you can tell Google if there's location intent to a site.

What John's saying here is if you're doing that then you don't need to host your website in any specific geographic location. If there was the very odd scenario where the performance was equal but you had a generic top-level domain, you had no mark-up location, no search console location, no other way for Google to tell then yes it might be a very small ranking factor, but for all intents and purposes I'd say no.

The second and last question we can answer on this episode is from Caleb Rule and he says:

“I am curious, off the top of my head if there is any discernible SEO benefit to UX improvements like using anchor text to allow people to jump to a section in a page?”

That's quite a broad question there, if we look at it in two parts I'll start with “is there any discernible SEO benefit to UX improvements?” I think I've probably covered that really well earlier in the podcast where we've talked about people trusting a website, comparing it to another website - does it feel right, there are so many ways Google is trying to measure this user experience. How they feel about a site, that I would say all of these combined is what's going to help you with your SEO.

I mean UX improvements should be done anyway because they improve user experience, the SEO benefit is going to be like a side-win for you if you get it. You should be doing them anyway and it's always going to be quite hard to measure, and to quantify that I would say that we've been told by Google if you make improvements to your site, whether it's significant speed improvements or whatever, it's usually going to take months before Google comes around and rewards you for those changes. It's not like you speed your site up and two days later, they want to see these changes consistently over time and various ones as well have to bed in with the link graph, so yes they're worth doing, yes they'll help you with SEO. Whether they're going to be discernible and you're going to be able to say I did X, and Y and came out very unlikely no.

In the specific case you've asked here, using anchor text to allow people to jump to a section in a page, so for the listeners if you don't know that's when you've clicked on a link on a page and it jumps you down to a specific part of the page, and what I would say about this is those links work by having the hashtag in the URL and Google will basically ignore everything after a hashtag in a URL. So from a technical point of view it's essentially like you're just linking back to your own page basically, so we're just going to fall back on PageRank here and how internal links work, in that anchor text it’s great. It helps users understand the content of the following page and it'll help search engines do the same, so if it's helpful to the user I would say do it.

Yes there's certainly going to be some fringe benefit to linking to the same URL with varied anchor text, maybe to give search engines better understanding the questions that are answered on that page. Definitely as we've talked about in this episode before it might be worth investigating with you can use schema to mark-up the information as well but yes it will help.

Okay that's all we've got time for in this episode you will hear from me again on Monday the 24th of June 2019, if you do have any questions that you'd like to submit to the podcast yourself please do so you can tweet them to me @candouragency and I hope you have a great week!

Mark Williams-Cook and Rob Lewis will be discussing the Google core update...

Get some great insights into techniques and real-world examples for outreach...