11.08.2020

32 min listen

With Mark Williams-Cook

Season 1 Episode 73

Episode 73: GSC API changes, quality rater update and what is not a ranking factor

In this episode, you will hear Mark Williams-Cook talking about GSC API updates: If you're using the Search Console API, Google is making changes you need to be aware of, Quality rater information: Google has published an up to date guide on their search quality raters and what is not a ranking factor: Barry Schwartz has published a cited list of ranking factors Google has said they do not use.

Play this episode

01

Show notes

Infrastructure changes to the Search Console API https://webmasters.googleblog.com/2020/08/search-console-api-announcements.html

New search quality rater information from Google https://blog.google/products/search/raters-experiments-improve-google-search/

Myth busting SEO ranking factors https://searchengineland.com/seo-myth-busting-what-is-not-a-google-search-ranking-factor-338741

02

Transcription

MC: Welcome to episode 73 of the Search with Candour podcast, recorded on Friday the 7th of August 2020. My name is Mark Williams-Cook and today we're going to be talking about the infrastructure changes to the Search Console API and who might be affected by those, we'll talk a little bit about the new search quality rater information that we've seen published from Google and what that means, and in a related theme, we're going to be talking about myth busting SEO ranking factors.

This piece of news probably only affects a small percentage of our listeners, but for those that are using the search console API hopefully you already know about this announcement, it was only made yesterday at the time of recording, so it was made yesterday on the 6th of august, but it's pretty important just to be aware of. so again as usual i will link to the google announcement on our show notes which you can find at search.withcandour.co.uk, but this blog post just talks through three things that are going to be changing with the search console api - two of them it looks like you might need to take action for, one it seems unlikely.

So if you're not someone that's querying the search console API yourself, or you don't look after a tool that does that, then you don't need to worry about this bit, it can be purely of interest for you. If you are creating the API or doing stuff with your own data with it, maybe for like a WordPress plugin, there's three changes you need to be aware of. So this is what the blog post says about the three changes. So number one is, changes on Google Cloud Platform, Dashboard and from this it says, we're going to see a drop in the old API usage report and an increase in the new one - that doesn't actually seem to directly impact anyone in terms of you need to do anything, but the next two changes you may well need to change something at your end. So the second one is API key restriction changes, so if you've previously set up API key restrictions, you might need to actually change them to make them continue working. I’ll talk a little bit more about that in a second. And their third point is discovery document changes which they say, if you're querying the API using a third party API library, or querying the webmasters discovery document directly, you will need to update what you're doing by the end of the year. There is a note that other than these changes, the API is backwards compatible, so this means as long as your API key stuff is sorted and the third-party API querying is sorted, nothing, fingers crossed, should break.

So I'm going to skip over the first one, as it just seems to be a reporting thing but the API restrictions and discovery document changes, I'll quickly go over now and tell you what they have to say. So the API key restriction changes the blog post says, as mentioned in the introduction these instructions are important only if you query the data yourself or provide a tool that does this for your users. To check if you have an API restriction active on your API key - they give you a link to follow some steps - but basically you need to make sure that your search console api key is not restricted. If you have a restriction on your API key, you need to undo that and make it unrestricted by August the 31st - so that's only actually three weeks time, so not very long to change that. It also says, in order to allow your API calls to be migrated automatically to the new api infrastructure, you need to make sure that the API is not restricted - so that's the reason essentially for this. So, previously you could lock down your API calls to be restricted to certain processors that you define and I guess, Google wants to run this update to upgrade everything for you, but they can't do that if you set their key as restricted. So they give you a guide, which we'll link to on how to do that, and second is the discovery document changes, which I've read through this and it's not crystal clear to me what's happening here. So they say, if you're querying the search console API using an external api library, or querying the webmasters api discovering document directly, you will need to take action as we'll drop the support in the webmasters discovery document. Our current plan is to support it until the 31st of December 2020, but will provide more details and guidance in coming months. So it looks like they are dropping support on some external API libraries, although that's not a hundred percent clear. So very much worth keeping an eye on that and any future announcements and guidance they do give as to how you need to now access the Search Console API.

These next two segments are kind of related and they're quite interesting, so this week on the 4th of August, which was Tuesday, Danny Sullivan made a post on the blog.google called ‘How insights from people around the world make Google search better’ and of particular interest in this post he did was talking about search quality raters and I strongly suspect that this clarification, because there's nothing particularly new in here, but this clarification probably is coming up, as in our last episode even we were talking about Google search ranking factors and how they use data from users and click data, especially with the commission hearing that's been happening with the online marketplace dominance, and I think Google's just trying to solidify their position about what they actually do and what data they use and it's good to have an up-to-date source uh stating this, I think, fairly clearly. So I'm just going to read this verbatim as I think it's important that, as with anything Google publishes, there are several ways you could interpret this and I'll give you my interpretation along with the next segment that's related to this.

So the post says, every Google search you do is one of billions we receive that day. In less than half a second, our systems sort through hundreds of billions of web pages to try and find the most relevant and helpful results available. Because the web and people's information keeps changing we make a lot of improvements to our search algorithms to keep up. Thousands per year in fact, and we're always working on new ways to make our results more helpful, whether it's a new feature, or bringing new language understanding capabilities to search - and that's linked to their upgrade using BERT that we covered before - the improvements we make go through an evaluation process designed so that people around the world continue to find Google useful for whatever they're looking for. Here are some ways that insights and feedback from people around the world help make search better. So there's a couple of sections here, I'll go through the three sections.

So our research team at work changes that we make to search are aimed at making it easier for people to find useful information, but depending on their interests, what language they speak, and where they are in the world, different people have different information needs. It's our mission to make information universally accessible and useful and we're committed to serving all of our users in pursuit of that goal. This is why we have a research team whose job is to talk to people around the world to understand how search can be more useful. We invite people to give us feedback on different iterations of our projects, and we do field research to understand how people in different communities access information online. For example, we learned over the years about the unique needs and technical limitations that people in emerging markets have when accessing information online. So we developed Google Go, a lightweight search app that works well with less powerful phones and less reliable connections. On Google Go we've also introduced uniquely helpful features, including one that lets you listen to web pages out loud, which is particularly useful for people learning a new language or who may be less comfortable with reading long text. features like these would not be possible without insights from the people who ultimately use them.

So I think that's fairly outside of SEO in a way and I don't think that should be unexpected from anyone that Google obviously does this qualitative research, kind of on the ground, with different users and it's certainly something we've seen, they've linked to their work they did with BERT and understanding queries, and it's always interesting that the English language is still their primary language they work with, so a lot of these new search features happen in English first, you get better results in English, the systems are trained on English, and then it slowly rolls out to other languages.

So it's this second part of the document I'm particularly interested in, because this is talking specifically about search quality raters, so what they say about search quality raters is a key part of our evaluation process is getting feedback from everyday users about whether our ranking systems and proposed improvements are working well, but what do we mean by working well? We publish publicly available rater guidelines that describe, in great detail, how our systems intend to surface great content. These guidelines are more than 160 pages long but if we have to boil it down to just a phrase we like to say that search is designed to return relevant results from the most reliable sources available. Our systems use signals from the web itself, like where words in your search appear on web pages or how pages link to one another on the web -so they're talking just about their kind of text analysis there and page rank and the other systems they've got for analysing how sites link together - to understand what information is related to your query and whether it's information that people tend to trust, but notions of relevance and trustworthiness are ultimately human judgments.

So to measure whether our systems are in fact understanding these correctly, we need to gather insights from people. And I think that's a really interesting point to make and for marketers, for web content writers, for SEOs to think about, which is that this notion of what is relevant, what is trustworthy, and what is a good result is subjective, it will change from person to person. So you could ask 100 people if you think a particular search result is good and you may get different answers from all of them, so it's very difficult for them to train or build a search system that has the “correct ranking” when you know humans themselves can't even agree. And this is what I spoke about in the last episode, it didn't particularly surprise me to hear people talking about this search quality rater data being used to train this system, in terms of, if lots of people say this set of pages is good for a particular reason that you've got models that can then work out maybe what are the commonality between these pages that wasn't being considered before.

So they go on and say, to do this we have a group of more than 10,000 people all over the world we call, search quality raters, raters help us measure how people are likely to experience our results. They provide ratings based on our guidelines and represent real users and their likely information needs, using their best judgment to represent their locale. These people study and are tested on our rated guidelines before they can begin providing ratings. and then they go on to how ratings work, here's how rated task works - we generate a sample of queries, say a few hundred, a group of raters will be assigned this set of queries and they're shown two versions of results pages for those searches. one set of results is from the current version of Google, the other set is from an improvement we're considering. raters review every page listed in the result set and evaluate that page against the query, based on our rate of guidelines. They evaluate whether those pages meet the information needs based on their understanding of what the query was seeking and they consider things like how authoritative and how trustworthy that source seems to be on the topic in the query. to evaluate things like expertise, authoritativeness, and trustworthiness, sometimes referred to as EAT - eat - raters are asked to do reputational research on the sources. Here's what that looks like in practice: imagine the sample query is carrot cake recipe, the results set may include articles from recipe sites, food magazines, food brands and perhaps blogs. To determine if a web page meets their informational needs a rater might consider how easy the cooking instructions are to understand, how helpful the recipe is in terms of visual instructions and imagery, and whether there are other useful features on the site like a shopping list creator or calculator for recipe doubling. To understand if the author has subject matter expertise, a rater would do some online research to see if the author has cooking credentials, has been profiled or referenced on food websites, or has produced other great content that has garnered positive reviews or ratings on recipe sites. Basically they do some digging to answer questions like, is this page trustworthy? And does it come from a site or author with a good reputation?

And here's the key thing, I think that gets misunderstood, so they've titled up the section that says, ‘ratings are not used directly for search ranking’ - so once raters have done this research they then provide a quality rating for each page, it's important to know that this rating does not directly impact how this page or site ranks in search. Nobody is deciding that any given source is “authoritative or trustworthy”, in particular pages are not assigned ratings as a way to determine how well to rank them. indeed, that would be an impossible task and a poor signal for us to use. With hundreds of billions of pages that are constantly changing, there's no way humans could evaluate every page on a recurring basis. Instead, ratings are a data point when taken in aggregate helps us measure how well our systems are working to deliver great content that's aligned to how people, across the country and across the world, evaluate information. And if they go and say last year alone we did more than 383 605 search quality tests and 62,930 side-by-side experiments with our search quality raters to measure the quality of our results, and help us make more than 3,600 improvements to our search algorithms. So I think that's obviously always interesting as well. So people talk about, oh has this google update happened, has this google update happened, you know as an industry we're referring to what we call core ranking updates which are essentially you know noticeable big shifts in search that maybe target specific pain points, but actually in the background we have - you know this is averaging out at 10 changes to the algorithm per day, and all of those incremental changes, over the period of the year, are likely to slowly change search quite drastically. So it does become harder as well to get a grip on if something is changing, if your competitors start out ranking you, or you start ranking better if actually that was something you did, or whether it's just the algorithms have adapted and now you're getting credit where it's finally due.

Lastly they talk about in-product experiments, and they say, our research and rate of feedback isn't the only feedback we use when making improvements, we also need to understand how a new feature will work when it's actually available in search and people are using it as they would in real life. To make sure we're able to get these insights, we test how people interact with new features through live experiments, they're called live experiments because they're actually available to a small proportion of randomly selected people, using the current version of search. So just last month we were seeing some live results or some live experiments that people were reporting on, where the first place Google Ad and the first place organic ranking were both using a much larger font size. So they go and say, did people click or tap on the new feature? Did most people just scroll past it? Did it make the page slower? These insights are helpful to us to understand quite a bit about whether a new feature or change is helpful and if people will actually use it.

So this is again another way that Google is saying, and has always said, look we are using user data, click data, for search quality and they're talking about this in live experiments they're saying, look we use how people interact with these different features and layouts and ratings and whatever to to see if it's working. And they go and say, in 2019 we ran more than 17,000 live experiments to test out new features and improvements to search. If you compare that to how many launches actually happened, around 3,600 you can see that only the best and most useful improvements make it into search.

So I thought this was again, hopefully this shouldn't be anything new, or kind of groundbreaking, for anyone but the key points I think to take away from this are that you know yes, Google is still obviously using, as they have done now for years and years and years, these search quality raters it does seem like that is now a very tried and tested way for them to improve their search quality and maybe look at ways to use their internal tooling to make their algorithm better, based on that labeled data. I think it's particularly interesting how they've broken it down in this post saying with their rated guidelines, they do things like rate how well how good the page information is, and then they look at whether the author is trustworthy, so they seem to have almost broken down - maybe there's this part of the algorithm that does this, and this part that does this, and they're getting humans to label data within those categories, so maybe they could feed that back to those parts of the system to see how they're performing and where the gaps are emerging or aren't.

You know, not surprised that user or internal data is being used to improve search quality. Same with the live experiments, you know they're saying yeah we're using click data, we're using things like load times to judge if these are working and the takeaway is that these changes are small and happening all the time. It's not like we're just getting one or two Google updates per year and everything else is just changing because of SEO and website activity.

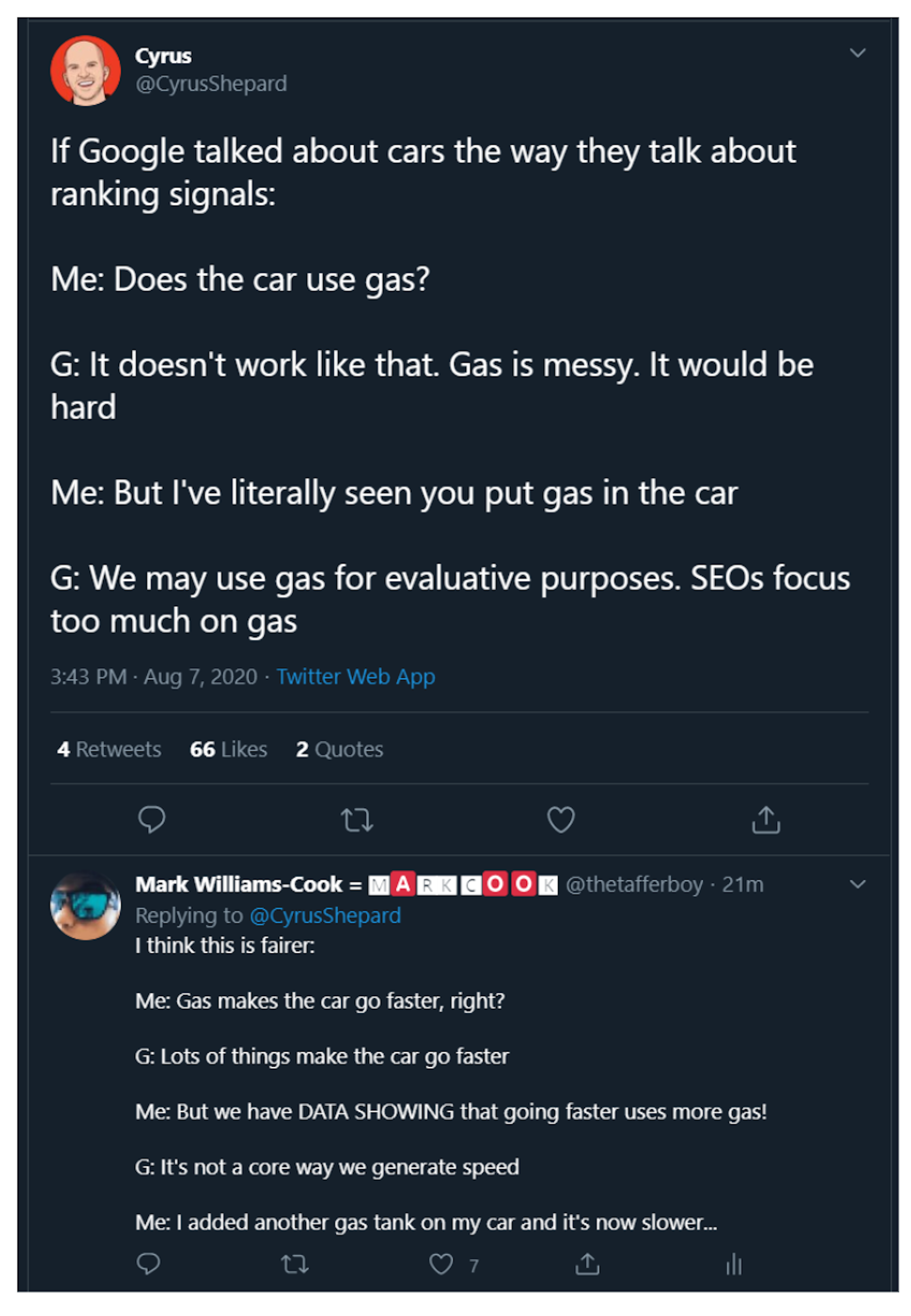

So out the back of all this, I want to finish on a segment that's basic, it's kind of related and I want to start with a tweet I saw from Cyrus Shepard that said, if Google talked about cars the way they talk about ranking signals,’ and then he's kind of put a me, as in him ,and Google conversation. So he says, me: does the car use gas? Google: it doesn't work like that gas is messy - it would be hard, me: but I've literally seen you put gas in the car, Google: we may use gas for evaluative purposes, SEOs focus too much on gas - and you know, I understand this is a joke and this is you know cyrus's way of saying that you know google can talk in a very roundabout way, about how they are using certain things within search and I probably am defending Google more recently than I ever have before, and not because I think Google's super straightforward about everything, they're certainly not, and as I said before it's definitely in their interest for the wider population not to know how certain things are working.

MC: I've put my own version of this, which is I think's fairer. So my conversation if Google talks about cars the way they talk about ranking signals would be… me: gas makes the car go faster, right? Google: lots of things make a car go faster, me: but we have data showing that going faster uses more gas, Google: it's not a core way we generate speed, me: well I've added another gas tank on my car and now it's slower. And I think, hopefully, this example highlights because maybe we understand cars a bit better or some of us do, maybe not me, I probably know more about Google than I do about cars, but hopefully in general people will know more about the working of cars than Google's algorithm. And I think it's interesting maybe as a thought experiment to sometimes see how we get the wrong end of the stick. So obviously if you whack your foot down on the accelerator, your car's going to guzzle more petrol, gas depending whether you’re in the states or in the uk, but it's going to use more gas, and as SEOs we kind of see those factors from the outside, you know there are certain things we can observe and someone might do one of their correlation reports and they say, hey look we've worked out that all these cars that are traveling fast all of them are using more gas, so we can then come to the conclusion that because we don't know anything else about the workings of the car that well, we just need to put more gas in and it will go faster, but obviously knowing about cars, we can think it's got to do with engine size and it's got to do with things like the aerodynamics of the car, and whether we're driving uphill or downhill, or off-road and the weight of the car, - so there's lots of things that do contribute to the speed of the car. And I think, sometimes, this is why Google is trying to carefully word what they say because it can be very easy for a majority of SEOs - and the majority because it's still a fairly young discipline in terms of other types of marketing, might end up doing things that are really not helpful for them.

So if Google, in this example, said well yes you know faster cars use more gas, then you would have people doing the equivalent of stellar taping a second and third gas tank onto their car, thinking it's going to make them go faster and obviously this is a complete waste of time for everyone.

So I just wanted to share that with you, because it did kind of tickle me and it brings me on to a search engine land post that Barry Schwartz put together which is called ‘SEO myth busting - what is not a Google search ranking factor’ and I'll link to this again in the show notes at search.withcandour.co.uk and I wanted to bring it up because I think it's a nice compilation of all of the things that Google says it doesn't as signals when it's trying to work out where to rank your site in organic search. So i'm just going to read through this list, and then I'll talk a little bit more about it.

So here is the list that Barry's put together of signals that Google have said at some point are not direct ranking signals in its search algorithms. So, we start with search quality rated guidelines ratings as we just spoke about - Google Ads, social media mentions or likes, click through rates from search results, pogo sticking back to the search results page, dwell time on a page, bounce rates, user engagement data on your web pages, user behavior, chrome data outside of core web vitals, Google analytics data, toolbar data, traffic on a website, shopping cart abandonment, eat that's expertise authority trustworthiness, we just spoke about, responsive design, amp, content accuracy, author bios, structured data markup, word count, outbound links, product prices, URL length, accessibility, stars ratings and reviews, better business bureau, trust organizations and badges, domain age, 3d and augmented reality images, email newsletter sign ups, Google Plus Ones, real life user signals, higher page counts, content frequency, moz domain authority.

So that's the big list. Now, I think most of these should be fairly obvious and straightforward, some are - especially when it comes to things like click-through rates and dwell times - some are debated a lot more hotly than others. The only thing I think is helpful to frame this whole conversation is that I have always noticed when Google talks about these things, and I may be wrong on this but it's just something I've noticed, they've always talked about their core search algorithm. and we saw and I did speak about this many episodes ago now when there was that dump of internal Google ranking documents, and it mentioned things like Google's twiddler framework, as they called it, and if you haven't heard about that - essentially, it was, this twiddler framework was a way for you to add extra bits of code, if you like - I'm sure the Google engineers if did listen to this would be cringing, but it looked like this twitter framework was a way for you essentially to add code on top of the core algorithm to fine-tune results.

So certainly we've seen things like, when certain results have achieved a lot higher click-through rate than news or lots of clicks, we've certainly seen temporary ranking shifts, and this is an example I use because it does make logical sense that if a particular subject or company is mentioned in the news, for instance, and lots of people search around this, it does look like or it does change the search intent, and it's a good idea and the search engine should change the results it's showing to match this. All of the studies I've seen with click-through rate seem to be temporary effects in that yes, I've seen websites change ranking sometimes it looks like because they've received a lot more clicks than they normally would have, but that never seems to last from the experiments i've seen, if anything happens at all.

So this, in my opinion, could be other ways that Google are adding things on top of what they consider to be their core search algorithm. And certainly, we know for a fact because of brilliant talks that Paul Harr has done, that things like the features we see in search are added or generated after all the core ranking stuff is done and that's arguable then, from a user or SEO point of view, whether we consider that to be a ranking factor or a core ranking factor because at the end of the day, it's what people type in and what they see first in Google. So whether or not you would consider that a core ranking factor, if you've got that big carousel at the top that everyone's clicking on, that's what we're interested in, but anyway I'll link to this post - I just thought it was a nice list, and every single one of these things that's listed on here has a link to a source which has got a Googler normally making that statement fairly clear. So it's a good thing to ground yourself with and maybe save you some time if you are going too far down a path, just in the name of SEO.

And that's all i've got time for this week, I will be back on monday the 17th of August, if you're enjoying the podcast, please leave a review, please subscribe, share it with a friend, share it with your mum and I hope to hear from you, or hope you're listening and hear me next week.