26.10.2020

31 min listen

With Mark Williams-Cook

Season 1 Episode 84

Episode 84: Mobile-only indexing, AI for alt tags and QRG updates

In this episode, you will hear Mark Williams-Cook talking about the new features coming to Google as announced in SearchOn, Ads in Suggest, and more workarounds to Google's current indexing issues.

Play this episode

01

What's in this episode?

In this episode, you will hear Mark Williams-Cook talking about:

Mobile-only indexing: The difference between mobile-first and mobile-only indexing with Google's new deadline announced

Google Discover tips: Some new advice on ranking in Google Discover

AI for alt tags: Microsoft's new AI available to developers to automatically describe images

QRG updates: Google has updated their Quality Rater Guidelines in October

02

Links for this episode

Cindy Krum & Patrick Hathaway tweet /HathawayP/status/1319578131792056320

Adam Riemer tweet /rollerblader/status/1316383206653014018

Lily Ray tweet /lilyraynyc/status/1316386331438837760

Portent study on meta descriptions /blog/seo/how-often-google-ignores-our-meta-descriptions.htm

03

Transcript

MC: Welcome to episode 84 of the Search with Candour podcast, recorded on Friday the 23rd of October 2020. My name is Mark Williams-Cook and today I'm going to talk about mobile first to mobile only indexing, some new information on Google Discover, auto generating alt tags, and some updates to the quality rater guidelines.

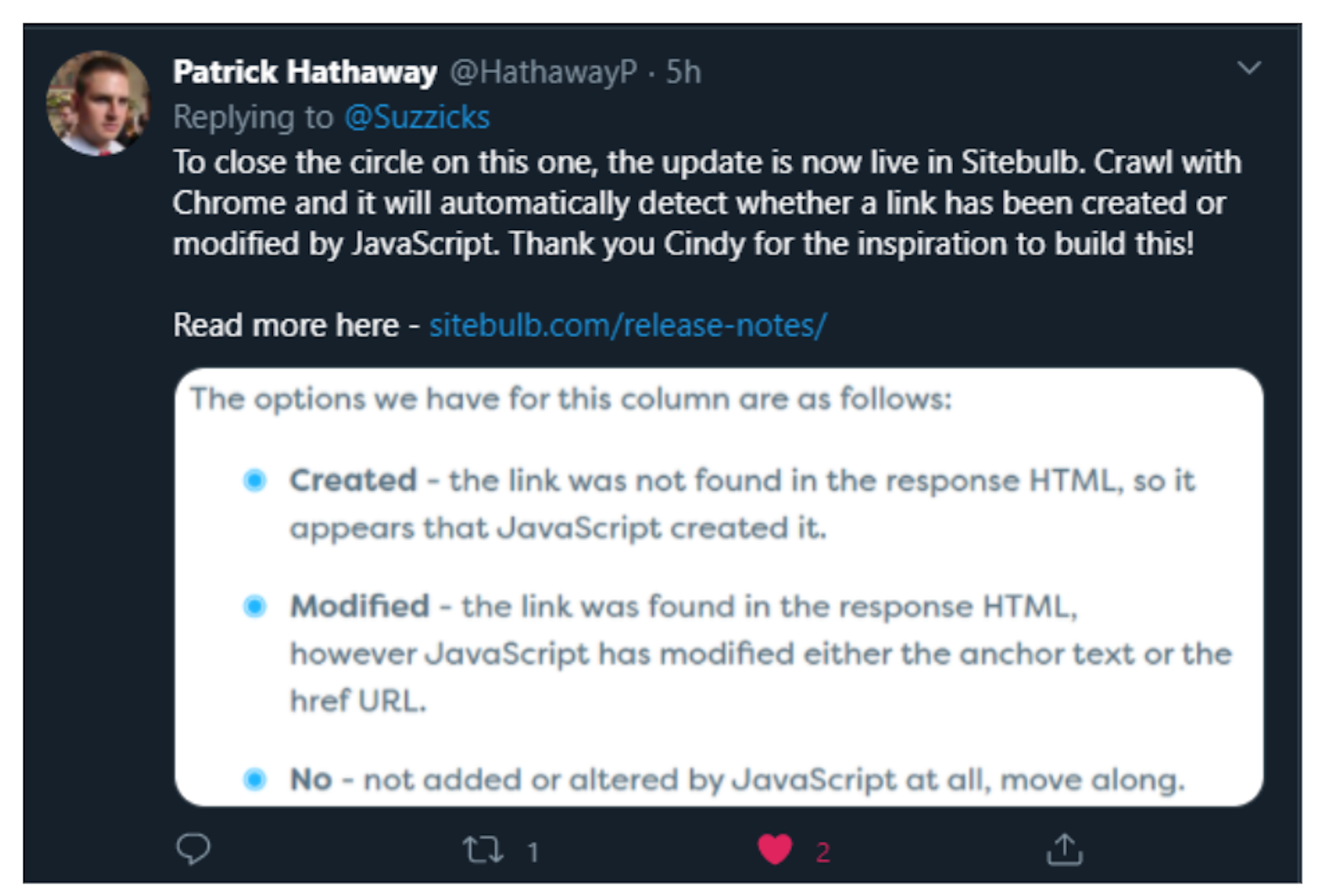

Before we get going, I want to tell you all that this podcast is very kindly sponsored by Sitebulb which is a desktop-based Windows and Mac SEO auditing software. It's a great bit of software, I've used it for quite a long time now, we use it in Candour agency and as regular listeners will know, I always go through one of my favourite features of Sitebulb, we'll talk about one of the features that I've been using that's particularly helpful and they've made it really easy for me this week and I'll tell you why.

At the beginning of the month, Cindy Krum, a quite well known SEO tweeted this, “SEO and Javascript experts, what's the fastest way to find non-html internal links on a site at scale without searching page code one at a time? I can't find this function in the crawlers I normally use. Normally I just look at a page at templates but that won't work here, any good tips?”

So people came back with various different responses of how to do this. Most of them essentially were saying you need to do a Javascript and none Javascript crawl, export all the URLs and then look at the differences basically. Sitebulb got in on this conversation and on the 23rd, so three weeks later, Patrick from Sitebulb tweeted, “to close the circle on this one,” - so obviously there'd been a little bit of discussion in between - “the update is now live in Sitebulb crawl with Chrome and it will automatically detect whether a link has been created or modified by Javascript.” So there's now when you complete this crawl with their Chrome crawler, you'll get three options around the on the column with links which is created. So the link was not found in the response html, so it appears that Javascript created it. modified, the link was found in the response html, however, the Javascript has modified either the anchor text or the href url, or no, which is it wasn't added or altered by javascript at all.

So really helpful because as I'm sure you all know there are various content management systems or single page applications which will create links with javascript which can be an absolute nightmare when it comes to getting pages, especially new pages, indexed quickly and getting sites fully crawled. and the modified one is really interesting as well; certainly with “legacy platforms” we've had to deal with i've certainly used google tag manager to sometimes rewrite the href of a URL if i want to change a template URL and I can't actually, for whatever reason, get to the source to do that, or if I want to add attributes like no follow-on to URL, you can do all of that through Javascript. So all of that now is out of the box, don't need to mess around with excel to get that done. It's all built into Sitebulb. They've got a special offer on for Search with Candour listeners. If you go to sitebulb.com/swc - so sitebulb.com/swc. If you download a Sitebulb from that URL, you will get an extended 60-day trial with no credit card required, so no excuse. Go and give it a go.

This one was a little bit of a head scratcher, certainly for some SEOs, so I thought it would be worth talking about on the podcast, which is this mobile first crawling becoming mobile only crawling. The thread on Twitter, as you know I get all my information from Twitter - the thread on Twitter that I saw that started this conversation was by Adam Riemer; so I'll link to his tweet, you can get all of the links that I mentioned during the show at search.withcandour.co.uk and he says, “wow @johnmueller just verified that if you have anything on desktop, not on mobile…” so examples he gave with things like content comments reviews etc “...it will not be indexable starting March 2021. If it's not on your mobile page, it will not count for indexing” - so this was a Pubcon 2020 talk.

Now this kind of diverged people into two camps of whatever you say about SEO there's always going to be the, ‘yeah we already knew that camp’ and there's going to be the, ‘oh wow, this was unexpected or this was a particularly big change’. So to rewind a little bit everyone knows, or hopefully knows, that for a long time now - I don't even know the date - it's been quite a while that Google's been having this mobile first indexing approach which is, they are going to crawl your site as if they're on a smartphone and that's the first version they're going to look at, and you can see there's a different Google bot user agent for smartphone that you can actually identify in your crawl logs, and if you look back in the messages in your Google Search Console you will likely see that over the last 12 months or so, you've probably had a message that says, ‘we're now crawling your site on a mobile first basis.’

Now the plan was always for Google to switch everything over to mobile indexing, and looking back at the dates for that it was actually March 2020 Google announced everything would switch over by September 2020 but this got postponed, like a few other things that Google was working on due to covid. We covered that in a previous episode when we were going through various COVID updates and it's been announced that this deadline now has been pushed off to March 2021, so that's about four or five months away now. So they've got this hard deadline at the moment. If you look at your crawl logs, you will likely see that the vast majority of the visits from Googlebot are from this mobile user agent, which means it's looking pretty much at your site, mostly anyway, through mobile. So even if, at the moment, you had some of your content or comments hidden, it's still not going to find them unless you are getting some visits from the older Google bot which is this desktop-based one and then it seems at the moment Google is using a mishmash of the two. It's a healthy way to think about your site, hopefully you're already there, but from now certainly as if it is mobile only. This is always where we were going to go, this was the point in going mobile first. So everything should be accessible on mobile that is on desktop.

That's actually given us a little bit of bandwidth certainly with design, we've mentioned previously before Google's reversed various recommendations for things such as tabbed content. So originally when we were still desktop crawling the advice was, well ideally you shouldn't have content tabbed because we're gonna say it's probably not that important, so we might not index it. That was reversed and Google was saying, we understand that the real estate on mobile screens is smaller, so tabs content shouldn't cause any issues, it should be fine. But this is what we should be considering, what you should be considering.

I've only seen a few sites, thankfully none of the ones I have to actually work on where a mobile site was bolted onto the top and that's been like a limited content version of the full site. So certainly if that's you in that situation, you need to get onto fixing that because five months isn't a long time and you're probably already suffering from that now. But hopefully that's cleared that up for you, if you thought it was a big cliff edge news, we are already over that cliff edge really, and this is the way we've already been going, but I understand that mobile only, mobile first, may have confused some people.

I've got some Google discover news as well, which has also come from Pubcon presentations this time this is from a tweet by Lily Ray, who I've mentioned on the podcast before, excellent SEO, talks a lot about E-A-T.

For those of you who maybe haven't encountered Google Discover, it's been around a few years now, but I still commonly talk to people, especially outside the SEO industry, that haven't encountered it or maybe they don't use it on their phone. So I'll just read to you quickly the definition of what Google Discover is from Google if it is new to you. So, “discover is a feature within Google Search that helps users stay up to date on all their favourite topics, without needing a query. Users get to discover their experience in the Google App on google.com, mobile home page and by swiping right from the home screen on Pixel phones. It's grown significantly since launching in 2017 and now helps more than 800 million people every month get inspired and explore new information by surfacing articles, videos, and other content on topics they care most about. Users have the ability to follow topics directly, or let Google know if they'd like to see more or less of a specific topic. In addition, Google Discover isn't limited to what's new, it surfaces the best of the web, regardless of publication date, from recipes and human interest stories to fashion videos and more.”

So there are a few guides already out there on how to optimise for Google Discover. We covered it ages ago, it was episode five, I think when we first covered it maybe. In episode five, we talked about how they just introduced a new report filter in Google Search Console, so you could isolate traffic from Discover. and Ii'd actually been working with it a bit before that when we'd had a client, kind of by accident - well totally by accident - actually receive a significant spike of traffic, from Google Discover, which like many things in SEO sends you down that rabbit hole of, okay how can we repeat this, how can we do it more, how can we build it into our actual process maximising chances to appear in Google Discover. So it's essentially, as Google says, a way to basically guess what a user's interested in without having them having them do a search, as like what their next search would be maybe.

So Lily has tweeted basically, “Google's Discover is an amazing source of SEO traffic. Large images are required for Discover” - that's been in their recommendations for quite a while - E-A-T is also crucial for appearing in Discover” so this is Google's, it's not a single metric, it's Google's overall view of a site's Expertise, Authority and Trust and she screenshotted a slide here from the presentation that says, “discover traffic can be really useful for newsy sites in particular. New info on Google Discover in the help centre is, so one, Discover is less dependable. So certainly you can optimise for Google Discover, but it's not going to be guaranteed that you're going to be appearing in there, it's not as dependable as these things you would do for regular SEO and your rankings will improve and you'll get more traffic. There are separate Discover content policies which Google also published. The other recommendations - three and four are to be timely and unique, use dates and author information, and the fifth one, which I think is one of the most important ones is, use high quality images.

So there's a couple of things you can do there which is using images above a minimum of 1200 pixels or ideally above, and there's also a meta robots content tag you can put in for max image preview and you can specify that as large which seems to help get your content surfaced in Discover as well. Very mysteriously John Mueller put here, “think about Expertise, Authority, Trust” and Lily obviously put that E-A-T is crucial for appearing Discover. As I said, E-A-T isn't a singular metric, it's really wrapping up everything you should be doing in SEO anyway - it should revolve around Expertise, Authority, Trust - it's going to help you in all aspects, but I thought this was interesting new information that Google's been pushing forward on Discover. There’s certainly no reason why if you've got the images that you can't put this meta tag on there and actually make sure your images and your content is optimised for Discover as well.

My favourite piece of advice I ever received around computing was, “if you're doing a repetitive task on your computer, you are using the computer wrong” and I was told that maybe 20 years ago now, almost 20 years ago, and it stuck with me as a maxim I live by of, okay I'm doing this on computer, oh this is boring, hang on a minute, is there a way I can automate it because that's what computers are really good at right? Repetitive tasks.

So this story really interested me. I picked it up again from the TLDR marketing newsletter, so I'll put a link to their article on this and it's about Microsoft's new AI - artificial intelligence - for image capturing. So this really interested me from, not only an accessibility point of view which is what it's designed for, but from an SEO point of view as well. So there is obviously alt text that is one of the things search engines are going to use to help decide if they should be ranking an image for a specific query or not, although, Google especially, has got very good at looking at images and working out what's in them anyway. So we're certainly not in the situation we used to be where search engines need us to do this and that's actually demonstrated by this AI existing in the first place.

So this is a model called Seeing AI, which is a free app for people with visual impairments that uses a smartphone camera to read text, identify people, and describe objects and surroundings. The news is that it's also now available to app developers through the computer vision API in azure cognitive services, and it's going to start rolling out into Microsoft Word, Outlook and Powerpoint later in the year. So this model can generate alt-text image descriptions for web pages and documents and as I said, Microsoft point out this is an important feature for people with limited vision and alt text is something that is really commonly overlooked with web builds. And it does pain me when people say, okay well we need the alt text for the SEO right, and it's like, no, you need the alt text to meet accessibility standards and help people who can't see the images describe what's in them.

But from an SEO task point of view, there's normally a couple of things that we have to do which fall into this repetitive task thing. And the other one that crops up always is the meta descriptions, and we've talked before and most SEOs will tell you meta descriptions aren't directly impacting rankings, they're normally there to help you get a better click-through rate which is part of SEO. I think it was last month I saw Portent published a study for around 30,000 keywords where they saw that over 70% of the time Google was rewriting the meta descriptions that humans had written anyway. I can see why and it just makes me feel sorry for people when you've seen these SEO teams with these huge spreadsheets and some poor person, normally like an intern or something, has got that horrible job of, please write meta descriptions for these 3,000 pages and they sit there and diligently look at the page and try and write a meta description for each of them, and then Google just overwrites and ignores their work 70% of the time.

So there's a good reason for that, from Google's point of view which is well, if someone's doing a search and we understand the intent of that search, it can sometimes be more helpful if actually we pick a snippet of the text out of your page that closer matches what that person's searching for. So they know that content's on the page, and that's actually going to help you and it's dynamic, and it's smarter, than just trying to have a human write almost like an advert as to why people should click on that page. So both of these tasks have been automated in some way. Meta description, the most common example for products is you will have some kind of approach where it just has product name, order online, free delivery and it will just use the information it has in the database to generate a fairly bland, but accurate, and on intent meta description which is fine.

Doing that with alt text has always been more challenging because that’s a much more human perception task of labeling what you see in an image. Because now we're getting to the stage where these models are publicly accessible, especially for large sites, it really makes sense, I think, to have this as part of your workflow, your marketing or your build, which is using these APIs to assist you with these repetitive tasks.

The benefit of things like meta descriptions is limited, so you do have to ask yourself how much is it worth paying people to go through and do these things manually, especially if Google's replacing them a lot of the time, when you can get most of the benefit of having them done in an automated fashion. Now when I read through the Microsoft post about Seeing AI, it is quite upfront about how it outperformed humans in some tests but not obviously in any general sense and the AI is very one-dimensional, in terms of how it wants to label an image. So if it gets it wrong, it's not going to try and take another angle and think about it differently, it's just I think the image is this, sorry. So I think it would still need some supervision. But if you've got thousands of images on your site, this is definitely something I would look at to just burn through you know 70/ 80 percent of that task with good accuracy. I think that's something a lot of bigger sites need to be looking at doing, if they're not, which is rather than outsourcing or giving repetitive jobs to the lowest paid person, is how can we do this smarter and use the computers for what they're meant to be used for.

Lastly there have been more updates to the Google Quality Rater guidelines. So the Google Quality Rater guidelines, as hopefully we all know, is the document that helps the remote dispersed teams that are helping Google assess how well their algorithm is doing. I've seen Jennifer Slegg has written a really nice, and very detailed, write-up of what's changed in these quality rater guidelines. The cool thing actually about these guidelines now is they finally included a change log, so they're telling us what they have to change so we don't have to do a diff on the old, the new, and have someone manually scour through and see what's changed. I'm really pleased to see that they've put this text in the quality rater guidelines which is, “your ratings will not directly affect how a particular web page, website, or result appears in Google search, nor will they cause specific web pages, websites, or results to move up or down on the search results page. Instead, your ratings will be used to measure how well search engine algorithms are performing for a broad range of searches” and I don't know how many times I've had to explain this to people, it's a lot and I'm sure a lot of other SEOs will feel the same because there's a common misunderstanding that the Google Quality Rater team is there voting websites up and down. That's not the case, they're being used as a human assessment, a human check, on how the algorithms are performing to the end goal. So the end goal is basically what the quality rated guidelines are saying, this is the standard, this is the thing we're trying to achieve. It's not got any input into how they achieve that. Obviously if the quality rated guidelines are followed and the feedback is well, our algorithms are ranking highly, people are rated manually as poor then Google knows they need to go back to the drawing board and look at what's happening there.

Jennifer does put her own thought alongside this, which I thought was quite interesting which says, “it's important to remember that just because quality raters can't directly impact how well your site is doing in the search results, if quality raters are consistently ranking your site poorly, or sites similar in quality to yours, then you need to be aware that Google is actively working on algorithms to make it so that your type of site won't be ranking as well in the search results.”

I think it sounds obvious, but it's a really good point to make, which is the sites that are similar to yours as well - so this is what the algorithm is doing is trying to find out what the commonalities between good and bad sites are, and this is why we have statements from Google when there's a large core update saying, there's probably nothing you can do to fix your site, if you lose those rankings it's normally not just some technical tweak you need to do, it's that we've decided that actually your site just isn't up to scratch because this is the standard that we're going for and sites like yours are demonstrably worse from the feedback we've got and verified from this kind of testing. So you can't get away with having a bad site, this is about having a long-term view, like lots of things in seo, a long-term view on what's going to make our site better, what's going to work well for our users.

The updates to the quality rated guidelines yourself, hopefully this podcast will save you a lot of time, because in my opinion there was only one thing in there that was really worth talking about, which is that Google is focusing more on their needs met part of this quality rater guidelines. So the needs met; basically it refers to how well your page satisfies the user's query and a rating of highly meets from the equality rater is assigned basically to the best results, that's the perfect fit for the query - so it may be that someone's done looking at your site and they decided this cannot be better. The site as well it's authority, it's high quality, it's entertaining, it's recent, that's the gold standard and Google's really doubling down on that. And I think most professional SEOs are in that line of thinking now; they've moved on from this keyword-centric thinking to this needs and intent thinking. So that's a big difference I see when I speak to clients that have done some background reading about SEO, or they have in their mind what it is, and the conversation immediately goes on to keywords and what keywords do we want to rank for, and what keywords do we need in our copy to make us rank for this. When the conversation now really needs to be, looking at the intent of groups of searches and not does our web page have these keywords, but does our web page answer the intent for this query? And that leads you on to more interesting topics, such as when people say content, immediately a lot of people think about text or just copy, but content can be video, it can be podcasts like this, it can be images and thinking about what format of content maybe answers the query best.

So a common example I give is something like how to recharge a car battery. A lot of the time, hopefully still now if someone checks, if you do a Google search for instructions on how to do that, you'll surface a video result highly and that's because that type of content is normally best delivered by video because it's very helpful to have it visually there and it's very helpful because you might maybe prop your phone up on your engine as you're doing it to follow that guide. So it's not just about keywords, it's about intent and how you can meet that intent. So I think that's always a good conversation to have, internally, if you're in charge of SEO. If you're an agency, which is not just what content, what articles, what blog posts do we need, but what's the best format as well to do this.

The other change that I found interesting, this i imagine is going to affect some people more than others, and jennifer had written about this and i saw how to think about it which was, the guidelines just before this had said, if the rater is unable to evaluate page quality because it's in a foreign language and they didn't understand it, they should just assign a medium rating, so an on-the-fence rating and and that's gone now, so they're telling people not to do that. and Jennifer said she was quite surprised that a page automatically just gets a medium rating if it can't be rated. It just got me thinking back to some spammers I was talking to who were having lots of success with foreign language spam. So they had set up lots of copied and spun content in non-english languages, and they found they were having much, much greater success with those sites than in English speaking search results. And I'm sure there's a whole host of reasons why that is, including English search results tend to be very competitive in most regions at least, and we always see Google features like BERT for instance. When we first covered that, it always seemed to roll out in English first, and then they extended them to other languages. The same with Google translate as well, we certainly saw the best results to and from English than other languages. So I do wonder if this is now maybe going to start to impact how foreign language spam sites are handled and how the algorithms looking back at that, but that was just one other interesting point I saw.

The headline to this though is, you need to be doubling down thinking about needs met, thinking about intent, doing that research and like we always try and get people to do in SEO is play that long game.

That's everything we've got time for in this episode. I hope you enjoyed it. I'm going to be back in one week's time which is breaking us into November. So it's going to be Monday the 2nd of November, so we're approaching the end of 2020, finally. I hope you all have a great week. If you're enjoying the podcast, please do subscribe, tell a friend, like it, link to it, or anything that might help.