13.01.2020

39 min listen

With Mark Williams-Cook

Season 1 Episode 43

Episode 43: IndexWatch 2019, Medium’s organic fall and listener Q&A

In this episode, you'll hear Mark Williams-Cook talk about 2019 Sistrix IndexWatch, Medium's organic fall and also a listener Q&A on how to help Google trust canonical signals and link building multiple links from a single site.

Play this episode

01

What's in this episode?

In this episode you'll hear Mark Williams-Cook talk about the 2019 SISTRIX IndexWatch and who the SEO winners of 2019 were, Medium's organic fall: an overview of the investigation into Medium.com's loss of half their traffic and there's also a listener's Q&A: Questions on how to help Google trust canonical signals and link building multiple links from a single site.

02

Transcript

MC: Welcome to Episode 43 of the Search with Candour podcast! Recorded on Sunday the 12th of January 2020. My name is Mark Williams-Cook and in this episode we will be covering the 2019 SISTRIX IndexWatch, talking about which site saw the best improvements in 2019. We'll be covering why big content publisher Medium has lost almost half of its search traffic and we've got some listener Q&A, talking about canonical tags and link building.

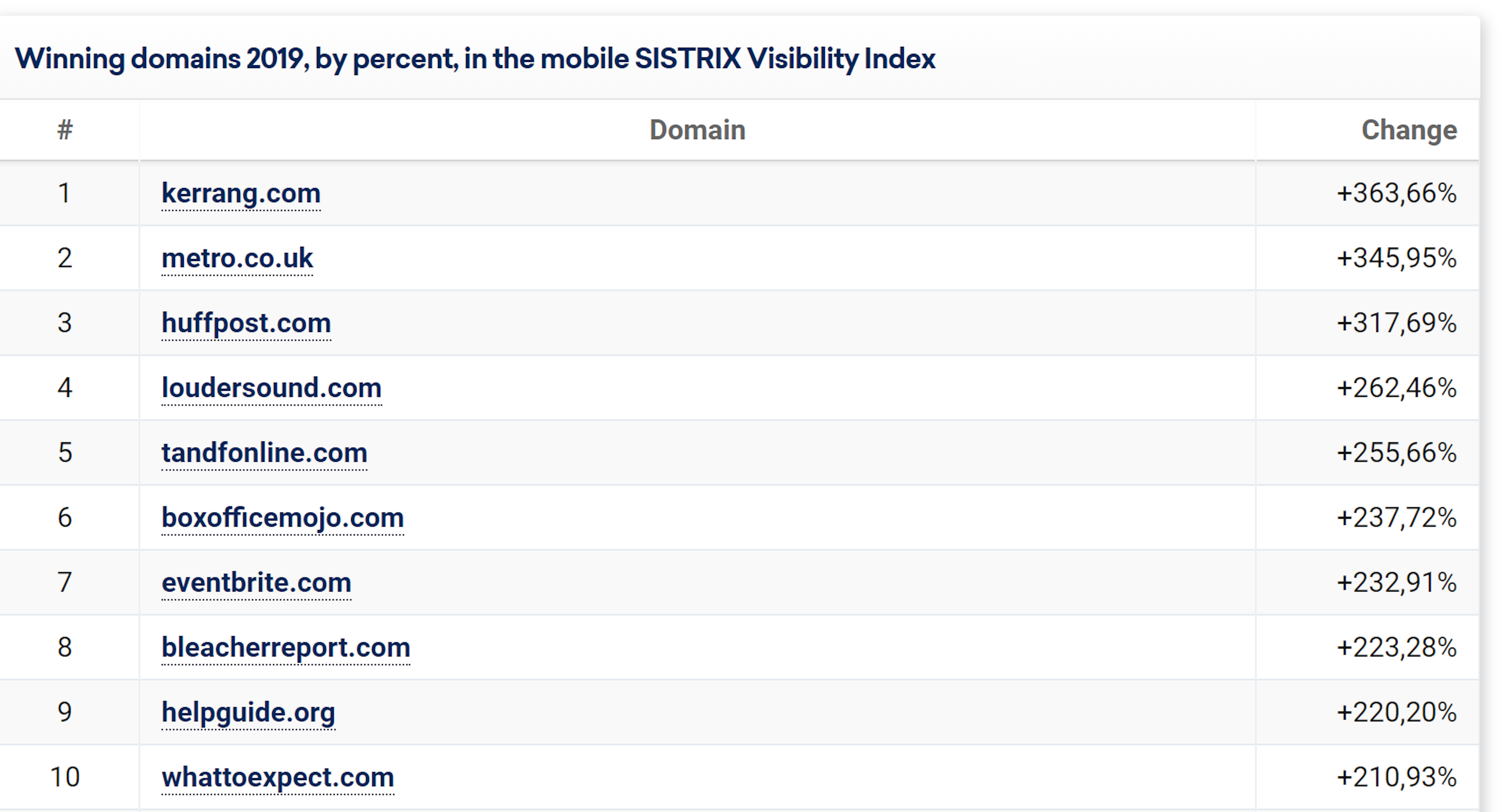

I thought it would be nice to start this episode talking about SISTRIX 2019 IndexWatch as I still feel 2020 is still starting for me, so I don't mind looking back at 2019 at the moment. So this was published a few days ago by Steve Paine of SISTRIX, I'll put a link in the show notes at search.withcandour.co.uk and their yearly report which they've done for, I think the last four or five years at least, covers the winners basically of the last year in terms of actual growth and in terms of percentage growth in organic search visibility as SISTRIX score it. So they're top 10 winners for 2019 were kerrang.com, metro.co.uk, huffpost.com, loudersound.com, tandfonline.com at number five, boxofficemojo.com at six, eventbrite.com at seven, bleacherreport.com at eight, helpguide.org at nine, and whattoexpect.com at ten and the article summarises the quite jarring fact that in 2018 Google made more than 3,500 algorithm changes.

Now obviously a lot of those changes are very very minor, they’re tweaks that you probably don't even notice them happening but it's still really brings home that they're making you know like ten changes a day to this algorithm and all of those changes stack up and they can impact each other as well. They do give me the article a very quick run through of some of the major events of 2019, because 2019 from an SEO point of view was quite busy, we had core updates; so core updates are updates that affect the core search algorithm, which means they can have impact on lots and lots of sites. We had core updates in March, June, and September. We had indexing issues which we reported about quite a lot last year and gave us lots of content for the podcast, talking about Google struggling to index pages. We had Google changing how they treat nofollow tags, which will change how the link graph of the web impact search results. We had BERT rollout for Google in English and other languages, so it was a really really big year for search for SEOs. We also saw some big sites take falls and actually come back after updates as well.

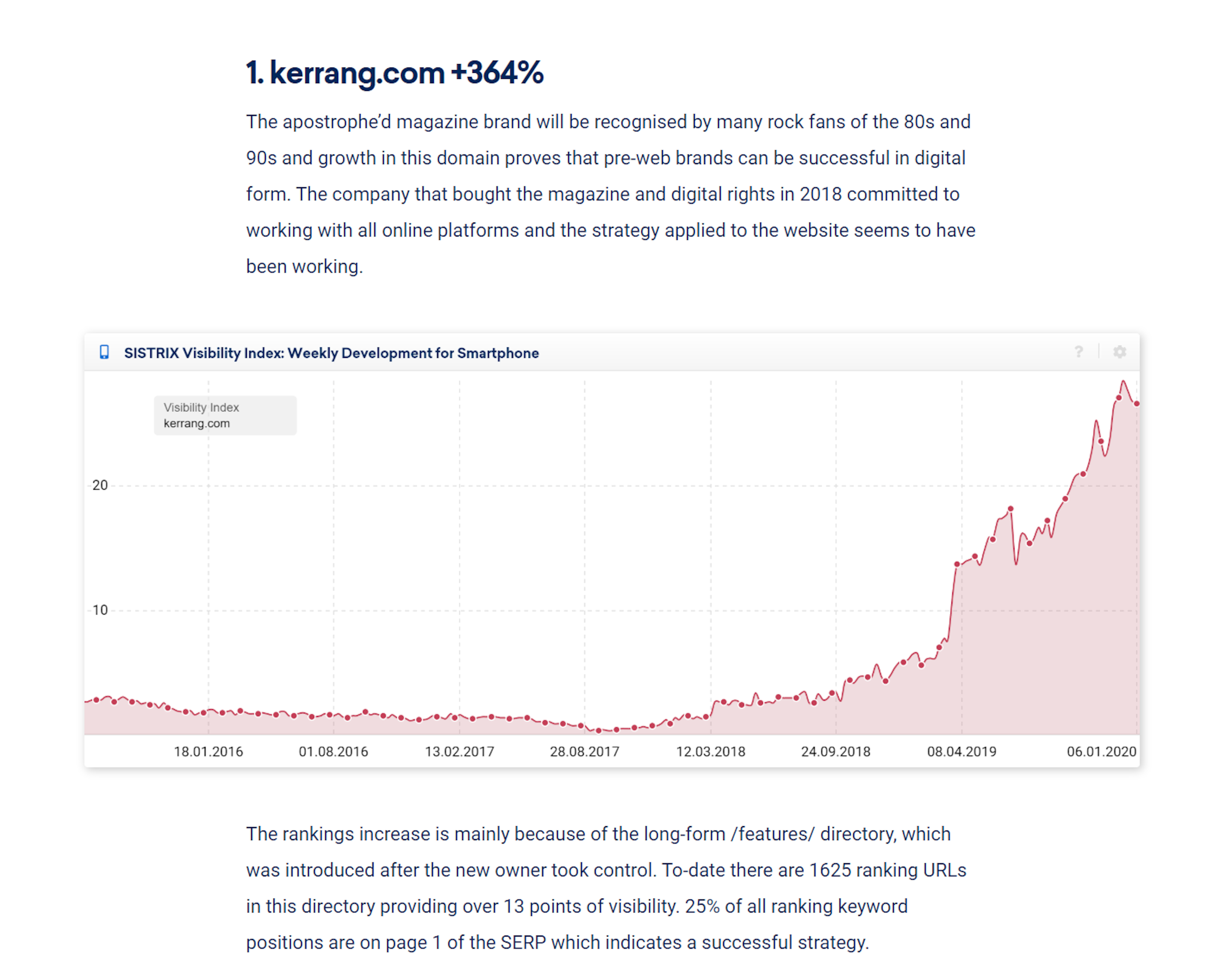

So there's a couple of the little overviews or breakdowns that SISTRIX have written about that I want to go through, I think are worth noting. So the top site Kerrang was number one of their percentage visibility increase. So SISTRIX reported Kerrang having a plus 364% increase and SISTRIX wrote, ‘the rankings increase is mainly because of the long form features directory which was introduced after a new owner took control. To date there are 1625 ranking URLs in this directory, providing over thirteen points of visibility. 25% of all ranking keywords positions are on page 1 of the SERP which indicates a successful SEO strategy.’

So I think that's quite interesting that Kerrang have taken this long form view on content where they're making these long detailed posts and not only are they URLs ranking but you've got quarter of them ranking on the first page, because that metric where visibility tools will say, this is how many keywords our site or a page will rank for, can be quite misleading because they normally are taking the top 100 results for that, so it means that you could say oh you know this page ranks for a thousand different keywords but you could still get almost no traffic because one or two of those might be on the front page and the other 997 of those are on page 7, 8 ,you know in position 83, 84 which means you're not gonna get any traffic from them, so this is quite this is significant and that's when most of Kerrang’s increases come from.

I want to actually skip over some of the two, three positions are new sites that have had growth in 2019 because they've essentially made recoveries, so I'm not as interested in those. I think this was more a case of Google making algorithm adjustments and then possibly them having not quite the effect they wanted, because you have to remember when you get sites or big site specifically that takes a big dip in traffic after one of these updates, if the site hasn't had a penalty which is normally the case we discussed it before, the difference of a site having a penalty versus being impacted by an algorithm update, that's not because Google has targeted that site, it's just that the algorithm has adversely affected that particular site, which may not have been their intention. So this is why sometimes we see major sites quite, as a surprise, take a big nose dive after an update but then they do recover because they are a quality site or they are popular site, people want it in the SERP and it just so happens that the the way the algorithm turned out, it didn't quite work out for them. So Google has obviously gone back and adjusted some levers and knobs and dials to make those sites come back.

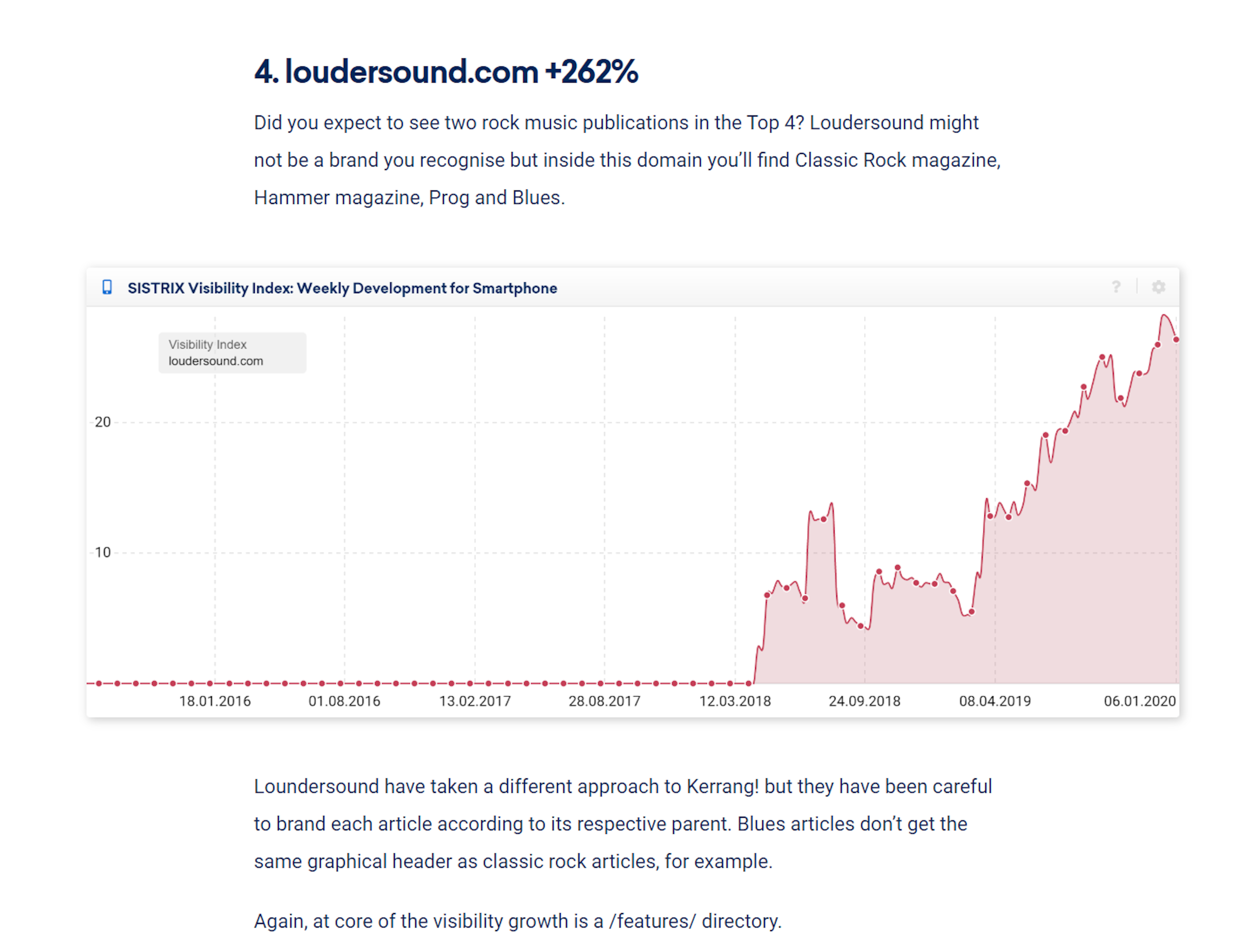

The other one that did interest me was Louder Sound which had a 262% increase and that was 4th on the list of SISTRIX site and SISTRIX said, in regard to Louder Sound you also see an amp directory ranking well in the the graph, in their post. it holds news items which give a clue as to how page speed and advertising is being managed across different types of content. Interestingly, it's the same approach that's being used with Kerrang com, so whatever these two sites are doing it's working well. So on my list of things to do at the beginning of this year is maybe take a closer look at some of these sites like Kerrang, like Louder Sound that have been doing particularly well in search and just see what they're doing. You know what they're doing, it's the same as each other and what we can maybe conclude is it's working particularly well.

So SISTRIX also says, ‘finally it's worth taking another look at Purina pet food brand of Nestle that has built a significant visibility increase by offering useful content for the pet owning community.’ That's amazing, that almost reads like a generic SEO strategy is that what we're going to do we're going to and we can increase the visibility by offering useful content for the pet owning community and wow it's actually worked! So while some might label - this is what the article says on this SISTRIX site - ‘while some might label pet health information as a potential, your money, your life topic and therefore a risky area. This site is a shining example of success where consumer brands and SEO are concerned. An article on dog poop is currently the most visible in the /dog directory and within the content there isn't a single mention of a Purina product, so that's worth reading, that's worth thinking about.

Yes, that's probably appeared in every single SEO strategy document over the last decade of, oh we need to just build some useful content. The thing I think is interesting to take away from this is, this hasn't been some meteoric rise, they've had this steady march forward for the last four or five years where they've been building out this content base. So they've had consistency, these aren't flash in a pan PR stunts they're doing; it's well-written content, well structured content, and they are adding to it week on week on week on week, so they're building up a really useful base, it's cross-linked really well within the articles and as SISTRIX have pointed out, they're not just mentioning their own products. You know it's not that kind of show content that you read and is like, oh have you got this problem?Well it just so happens that our product is the perfect solution - they're actually writing the best value content for the users, which is why they're getting links and why they're ranking.

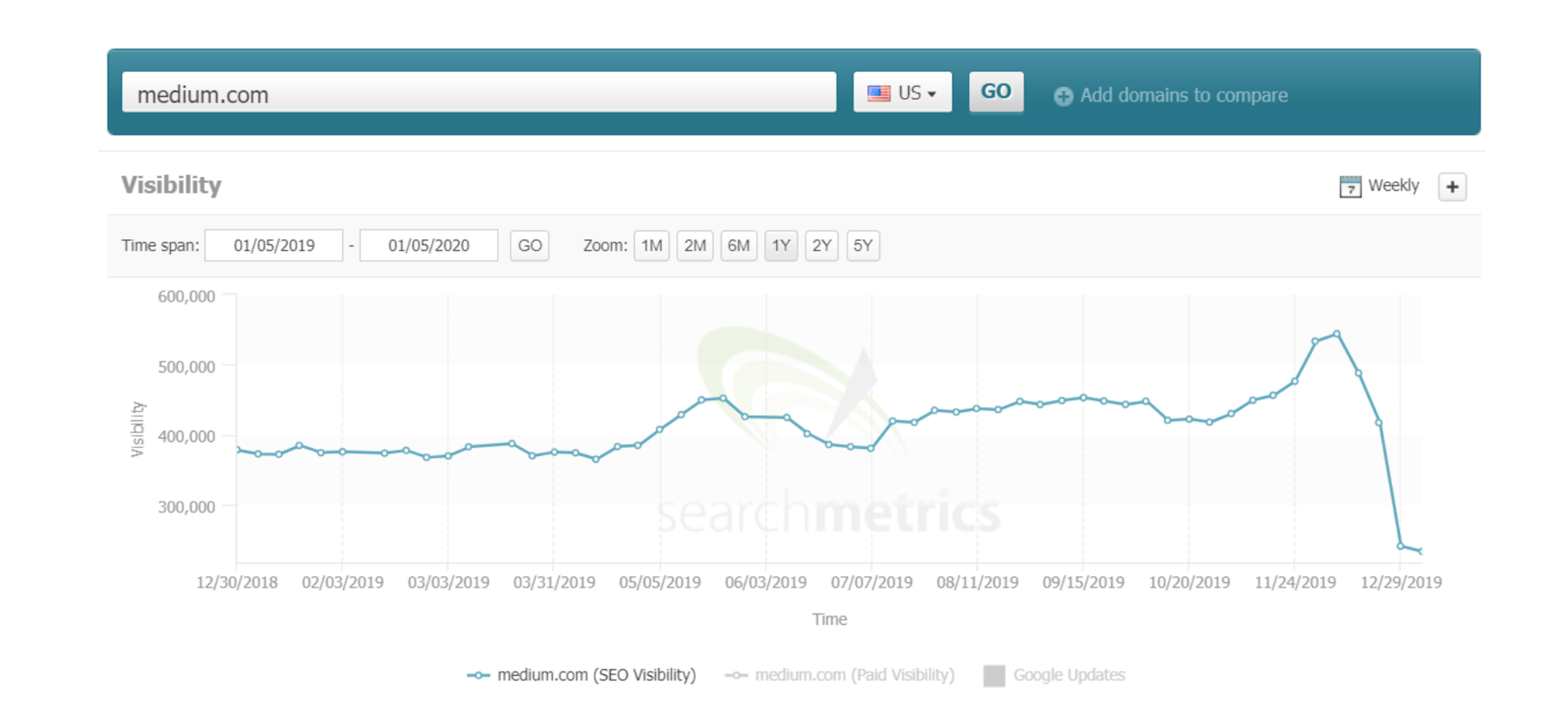

I imagine quite a few of you have heard of the platform Medium.com. So Medium is a trusted user-generated content publisher and it's a place where lots of people will publish content, maybe if they don't have their blog and they just want to share something outside of you know if they're working for a company they just want a personal piece. It's a platform for lots and lots of people, it's seen regularly and I saw a co-authored piece on Medium by Izzi Smith and Tomek Rudzki and they are writing about why medium.com has lost almost half of its search visibility. So very recently, across both tracking platforms SISTRIX and Searchmetrics, we can see that Mediums top-level domain saw a 40% drop in organic visibility in desktop and 50% in mobile, which is a huge amount of traffic. So what Izzi and Tomek did was they have done a bit of analysis on Medium and spelled out what they think the SEO issues are that might have caused this drastic, drastic loss. So Medium makes its money by paywalling some of its content that it then goes on and pays its contributors to, so their traffic is completely vital for the survival and revenue generation of their site.

I really liked this write-up. The first thing that Izzi points out as a potential issue with Medium is, well it’s a massive thing, which is that their home page is actually cloaked, which means that if you as a user go to medium.com, you will very likely see what is primarily a sales page of get started here and and it's all at the top of a big funnel to get you to sign up, so it’ll show you some topic areas but pretty much every call to action there is going to lead you down a funnel to sign up. If you change your user agent to Googlebot and you then visit the medium.com website and you're telling the website that your Google, so you're not a regular user, you actually see very different content. So rather than seeing this form that saying ‘hey sign up’, you're seeing links directly to all of the featured, very useful articles all over medium and this is what cloaking is. So you are cloaking your content for search engines and it's showing something different to users and significantly different to search engines and this is listed in Google's webmaster guidelines as a black hat, a no-no technique, because you're essentially saying to Google, hey look I'm gonna show users all of these useful articles when they visit our website, so Google is like ‘brilliant, we'll send them there’ but actually what happens when they get there is we're trying to get them to sign up for something and you're not showing them that.

Izzi's research shows that there is, it looks like maybe some a/b testing going on on the site, they've used a wayback machine and she's seen quite a coincidence in when this actually happens. So the huge visibility drop started on the 8th of December and using the wayback machines archive, there's this home page variation that was implemented in early November. So to me, from the several things they've actually listed in their write up and again, I'll link to the write-up on the show notes at search.withcandour.co.uk, from the several things in the write-up, I think this is by far and away the most likely thing that could have caused this drop because this is very blatantly a thing you shouldn't really be doing, it's not particularly difficult for Google to detect if they want to and as Izzi says the timing is very significant, that within a few weeks of this change going live or it looks like this change is going live, that we've seen this drop. They have though between them also pointed out a few have a quite major SEO issues with the site which I found - you know, I don't think I've ever published anything myself on Medium, I've read quite a lot of content on there and I've never particularly looked at their site from an SEO point of view - they've got several things in their write-up and I just wanted to bring up another one or two that Izzi and Tomek mentioned.

So Izzi’s second thing was a major issue with low relevance, indexable URLs and basically what Izzi has pointed out is that for each comment that goes on an article on medium there is an individual URL generated, meaning that you can send someone a link to an individual comment so they can see it which is fine and that kind of makes sense that you might want to do that, so if you can say, hey look at this comment here, it's ridiculous kind of thing, but what you don't particularly want to do is waste Google's time indexing individual URLs for every comment. So Izzi found there were around 7.5 million URLs in Google's index that shouldn't really be indexed, but they are. So this is Google trying to click on all the links on the site, trying to find good content and it's just getting bogged down and down and down in individual comment links and some of these articles got hundreds of comments on and this relates to a point that I think that Tomek pointed out, which is that he took a random sample of just over a thousand URLs and he found that only 16% of that random sample, of a thousand article URLs I should say, are indexed. so he found a thousand articles on medium, took their URLs and tried them in Google to see if they were even in the index and he found that only 16% were. So this means 84% of these random articles have zero chance of appearing at Google, because Google hasn't even got to them, hasn't even indexed yet and that's very likely connected to this issue of, Google's just basically used up all its crawl budget, it's got fed up of crawling the site before it's actually reached important content so this is highlighting, especially on large sites, why it's important to have a strategy around how the site is crawled and how the site is indexed.

The other related point that Tomek raises is there's parts of the site that powered by JavaScript as well and that's something we've talked about before as well, in quite a bit of detail, about how the first pass on a site in Google and most of the high speed crawling and indexing is done without JavaScript and it's only days or weeks later that JavaScript is processed, if at all and he found that a lot of the kind of related articles, sections are powered or all of them are powered by JavaScript, which means that there's a big chance that on these pages, that maybe only getting crawled once or twice or even at all, the Java scripts not getting executed which means you're losing again these are relevant internal links that go straight to other high-quality pages that you want indexed.

So there's this whole list of things here, that they put together and there's some really funny things are doing with site map generation as well which don't seem to make a lot of sense. Again, it's about they’re generating sitemaps and point in Google towards thousands and thousands of pages that aren't particularly important when they should be using, you know on such a large user generated content site, they should be using these sitemaps to point search engines towards the best content, the stuff that they want indexed, not just every single author page, things like that.

So have a look through, have a read through that, it's an interesting little read. I always find it interesting when you've got these major players, these major platforms, these major sites, that have so many SEO issues and it does come back to bite them and it does make me wonder where Medium would be if instead of being in the low double figures of percentage of content being indexed, if 90, 95, 100 percent of their articles were indexed it makes me wonder how big they might be now.

We've got a little bit of time to do some Q&A; so again if you have an SEO or PPC question do send it to me, so you can email me at [email protected], you can tweet us at @candouragency or @thetafferboy is me on Twitter, find me on LinkedIn, anyway you can find me on social, if you drop me an SEO or PPC question, I don't mind discussing it or trying to answer it. If you don't mind us talking about it publicly on the podcast, happy if you want to be anonymous as well, that's absolutely fine.

So I've had four questions this time, I think we've got time to cover two in this episode and I'll try and get the others done in the next episode. So the first question is from Karl Kleinschmidt and he said, ‘Hey I have a question for the podcast. What are the specific steps for resolving Google no longer respecting canonical links?’ and I did follow this up with Karl but he couldn't give me specific URLs, so I thought I would just see if I can go through some general guidance and that might help shed some light on the issue.

So canonical links, again it's something we've spoke about a few times on the podcast, are used when we have very similar content on different URLs or yeah, very similar content different URLs and we want to signal to search engines which is the page we would like to appear in search and which pages are just variations of that to be ignored. So an example would be if you were selling trainers online and you could filter or order them by price for instance, that would probably generate a new URL but you wouldn't want both of those URLs appearing in search because they're basically identical, the only difference is the products ordered slightly differently so you would specify which page you'd want as a canonical version. The canonical tag there would combine the link signals of both of those pages, so rather than having two pages competing with each other or more, you can get times where you’ve got hundreds of pages competing for the same ranking, so you just get one page that ranks a lot better. So what should you do if Google is no longer respecting canonical links? So things to think about; firstly is that canonical's are hints, not directives which means Google gets the last say. So we've heard many times before from Google they say they use multiple signals to determine which is the canonical version of a link, so it's not just us saying with our canonical tag, hey look page a is the canonical version of this set of pages, it's not like Google reads that and goes, okay that's fine thank you for letting me know we'll obey that. It’s one hint of other many hints and signals that they pick up and make a decision.

So here are some things that you can check and the top of the list would always be if you've got two pages and you've tried to use a canonical tag but it's not working and Google’s still ranking and indexing both, I would actually just look firstly at how similar this content is. In the fairly limited testing we've done, so we did a specific test for this and I'll link to it again in the show notes, where we created two pages and each one was about a made-up fictional soft drink and we wrote some content that was similar but mentioning the name of each one of the brands that we had made up. So we just wrote some generic content about how refreshing it is and it can be used for this and this, so the pages basically meant the same thing the main difference was the name of the brand and both those pages were indexed and they both ranked. They were the only pages in Google that ranked for the made-up brand name because it was unique and then what we did was we set one canonical to the other. So the experiment was, could we get one of the pages to rank for both keywords because we've told Google it's just a variation page and the result of that test was that Google completely ignored the canonical tag and because there was no external links or internal link difference, it was a safe assumption to say Google was ignoring the canonical tag because it found the content too different.

This is a really common thing we see. The most common example is when I see people using the canonical tag on paginated page sets, so this is where you might have all your blog posts or you might have lists of products and you've got page 1, 2, 3, 4, 5, 6, 7, 8, 9, etc etc, and what they've done is they said, okay well if someone searches for this category of either blog posts or this category of products, I only ever want them to appear or to land on the first page of this. So I'm going to set all of these paginated pages to be canonicalised to the first one and generally what happens is they will be completely ignored. So you'll see that all of the paginated pages are still indexed and they were still rank on occasion and this is because this is not the use of the canonical tag. Each one of those pages are different, they link to different articles, if someone wants to share a link and get to one of those pages it's useful for them, it helps you link internally. Now pagination as an SEO issue is really complicated and on big sites, sure I've seen people use noindex, nofollow, all sorts to try and handle you know deep rabbit holes of pagination and we certainly got more options now Googlebot can see lazy loaded content and stuff, but these are the types of situations when you shouldn't really be using canonical tags because the pages are not very similar. they're similar page template but the content on there is not similar. so my first check is always look at the two pages you're trying to, well the page set, you're trying to use the canonical tag for and make sure that the content really is equal.

The other things are and this sounds really basic but make sure the canonical tags you set are actually correct. There's two very easy tools you can use first one is Sitebulb and the other is ContentKing, both are really good tools that will crawl your site and they will alert you if you have issues within your canonical tag specification. So if you said, okay page A is canonicalised to page B, but then on page B you've said page B is canonicalised to page C for instance, you're going to start to mess up your own signals to Google. So if you start messing up your canonicalisation like that, Google is just going to start disregarding what you're telling them. This is another reason why they don't take some of these things as directives because a lot of the signs people can hurt themselves more than they're helping themselves when they use them incorrectly, so I would definitely use one of those tools to go through the whole site and it might flag up some false positives. But it will just make sure that your canonical tags are consistent in what you're saying and what you're giving as hints to Google.

Really important as well is linking, this is another thing I would look at. So if you have two variations of a page, so we'll say again you've got page A and page B and you decide page A is the canonical version, this is the one you want to rank so you set your canonical tags to say, right I would like page A to rank, this is the canonical version. If then within your menus, within your content, you're linking to page B instead of page A, you're definitely going to send mixed signals to Google again. so when you're saying this is the authoritative, this is the original, this is the canonical page, but you're never linking to it, you're linking to one of the variations internally, again, you're sending very very mixed messages as to which is the most useful page for people to land on and the same actually applies for external links as well. so I've seen cases where content has been linked to a lot externally, so a specific page and then later on people have tried to implement canonicals and those canonical tags have been ignored because there's really strong other signals saying to search engines, this specific page, this URL is really good basically. so these are the other types of signals that you have to have a handle on. so internal linking is a really important one. Again, tools like Screaming Frog, Sitebulb, ContentKing, all can look at how many times URLs are linked to.

So Screaming Frog may be a good example, you can take your page set, so it should be canonicalised, look at the variations of the URL and check their in links; meaning check how many links there are internally existing to that page and see if they should be linking to the version you're specifying as the canonical one. The same applies to sitemaps, so your sitemaps should only list the canonical versions of pages and not their variants. So check through all of those things, Karl, and I hope that helps. If not feel free to send me another message and we can have a chat about it.

Our second and last Q&A is from James Diamond and he says, ‘you were talking about backlinking the other day, is it a case of the more the better when people are linking to us or one-and-done?’ To expand on that, I think this is in relation to the Twitter discussion going on around is (and there's a poll) the second link on a site worth less than the first one? So I think what James here and feel free again to contact me if I've got this wrong, James, I think what you're saying here is, once you've got a link from a website, are we done with that website or should we try to get them to link to us more times? The short answer from that is generally when you are trying to get people to link to you, whatever strategy you’re using, normally you would only expect the one link and then you would move on.

I do want to discuss this whole, is the second link on a site worth less, because I think it's an interesting question, it's kind of a loaded question, but I think it's worth just talking about. Purely from a PageRank algorithm point of view to keep it super simple because the PageRank algorithm is published and we've been told recently it still is used by Google, each link on a site should have its own independent value, which it will give to a page that it’s linking to and naturally if you get a link from a site, especially a regular content site such as a news site, the value of that link quote-unquote would decay over time just because the page - because remember search engines rank pages - the page which that link is on will tend to sink down the hierarchy of that site. So if for instance you are in an article that is linked to, from the site's homepage it links to you, that page within their site will be more important because it's really high up in that hierarchy and it's directly benefiting from, like it's one step away from all the links that go to the homepage, which is probably where most links are going to be. But then in three years time, what's likely gonna happen is that article probably isn't gonna be on the homepage anymore, it's probably gone into an archive section and now if you want to get to it you have to click on archives 2017 May, so it might be three, four clicks away, which just looking at it from a PageRank point of view means that it will be a less important page on that site.

Now, there's been other interesting studies about if you get a link - I’ve seen studies where they've shown if you a link from a site and then the link completely goes, there still seems to be some lasting impact from that and blah blah blah, but that's quite a natural thing, a process for a link to go through, so of course if you could continually get on the front page for instance of a news website, every month that would be great and you would be getting more SEO benefit than if you just had one link and then it disappeared off into the into the archives.

It was James Brockbank from Digitaloft who I saw commented on this thread, saying it might even be a weakness of many digital PR campaigns in that they get the same links every time and one of the responses to that was, essentially what I just said, which is that there is some benefit in obviously getting new links higher up the hierarchy each time, showing that you're still relevant, that you're still being talked about which I completely agree with, but James said he would much rather and I'm gonna have to paraphrase this because I can't remember exactly what you wrote, but essentially was saying something to the effect of - if he could choose 10 links from a high authority site or ten links from ten different sites, he would normally go for the 10 links from different sites - which again, I think makes complete sense and you can see the effect of this but in lots of different ways.

So I think it's fair to say the individual SEO benefit of a specific link from a domain may be affected by how many links that domain has to your site. So to give you a basic example, what’s in my mind here is site-wide links, so site-wide links don't have the impact you would expect if all links on a site were given the same SEO importance. So we're talking outside of PageRank here, so if you've got an authoritative site to link to you and that site had you know a hundred thousand pages, you would expect from one link some good uplift from that, maybe if they gave you a site-wide link you might expect, depending if it gets rated as spam or not, but hopefully if you've got a good link profile not, you might expect a bit more of an uplink but you wouldn't expect the same as if each individual page was giving what it should do.

That's really common, we see sites with sometimes hundreds of thousands or millions of links, but not from that many domains because they are getting site-wide links, but they don't have a massive impact. The other thing to think about is there's lots of interesting cases of parasite hosting, which is where people are getting pages to rank well on authoritative domains without any particular link from those domains, so it just seems to be that those pages that aren't linked to internally on that domain, so they should have really low PageRank and whatever, they manage to get them to rank well as through some algorithmic method they're associated with that property, with that website, with that link graph, with that entity, however you want to figure it out. That's fine, but that's the fact. We've seen it with Amazon hosted pages and all sorts, these spammy pages that will pop in a top ten, just because they're piggybacking and this to me is gonna be wrapped up with this signal the benefit of getting different sites to link to, so I definitely, definitely think that it's important as well as doing digital PR and getting in the news regularly, to have a wide range of sites linking to you where it's relevant and I say where it's relevant because actually to plan this from an SEO point of view, this is a really tail wagging, dog way, to think about it you don't actually need to know that much about algorithms, you just need to think about if you had the best content on the web in your niche, in your sector, where would you, where should you be getting links from, what kind of diversity would you expect to see in those link,s because that's the links you need at the end of the day, you don't need to know loads about maths or algorithms or anything to try and work that out, that's what Google's trying to model.

That also all ties into, we've spoken a lot before about Google - well a lot, not a few times - about Google having a lot of links they say they're on the fence about and we've talked about this in terms of using the disavow, so if you've got a lot of links that Google is a bit like, mmm about, they'll still be benefiting your site and if you're getting good links as well from newspapers, it will keep those links in good stead and Google will give you the benefit of a doubt. If you don't have the good links and you're only getting these really on the fence links, at some point that tower that you're building could all collapse and it's not that you're getting a penalty, it's just your link profile then Google's saying okay well there's just too many on the fence links here to be natural, so we're just gonna we're gonna start devaluing some of these because there's a good chance they're not good. I think that's what Google has been saying to us time and time again, so a really interesting question, I'm sure I've said a bunch of stuff there that some people don't agree with; if you want to talk about that more, as I said contact me, email, social media, happy to discuss it a bit more.

Well that's everything we got time for in this episode. I really hope you've enjoyed it, we're going to be back next Monday. We've got this coming up Wednesday, it is the next Search Norwich with Aleyda Solis and Hannah Rampton and they'll be talking about effective site audits and using Google sheets to build SEO tools, it's free, you can find out more about it at searchnorwich.org. We're back Monday the 20th of January, which is next Monday as usual you can get all the show notes and links from today's episode at search.withcandour.co.uk. That's everything for this episode, I hope you enjoyed it and have a great week.