06.08.2021

162 min read

By Mark Williams-Cook in SEO

500+ unsolicited SEO tips

The Unsolicited #SEO tips series started on LinkedIn but unfortunately got too big for the LinkedIn article system, which is why we're now here!

If you haven't seen the unsolicited #SEO tips series before, I basically post a single SEO tip every day on my LinkedIn and then curate them into these posts for every new 100 tips!

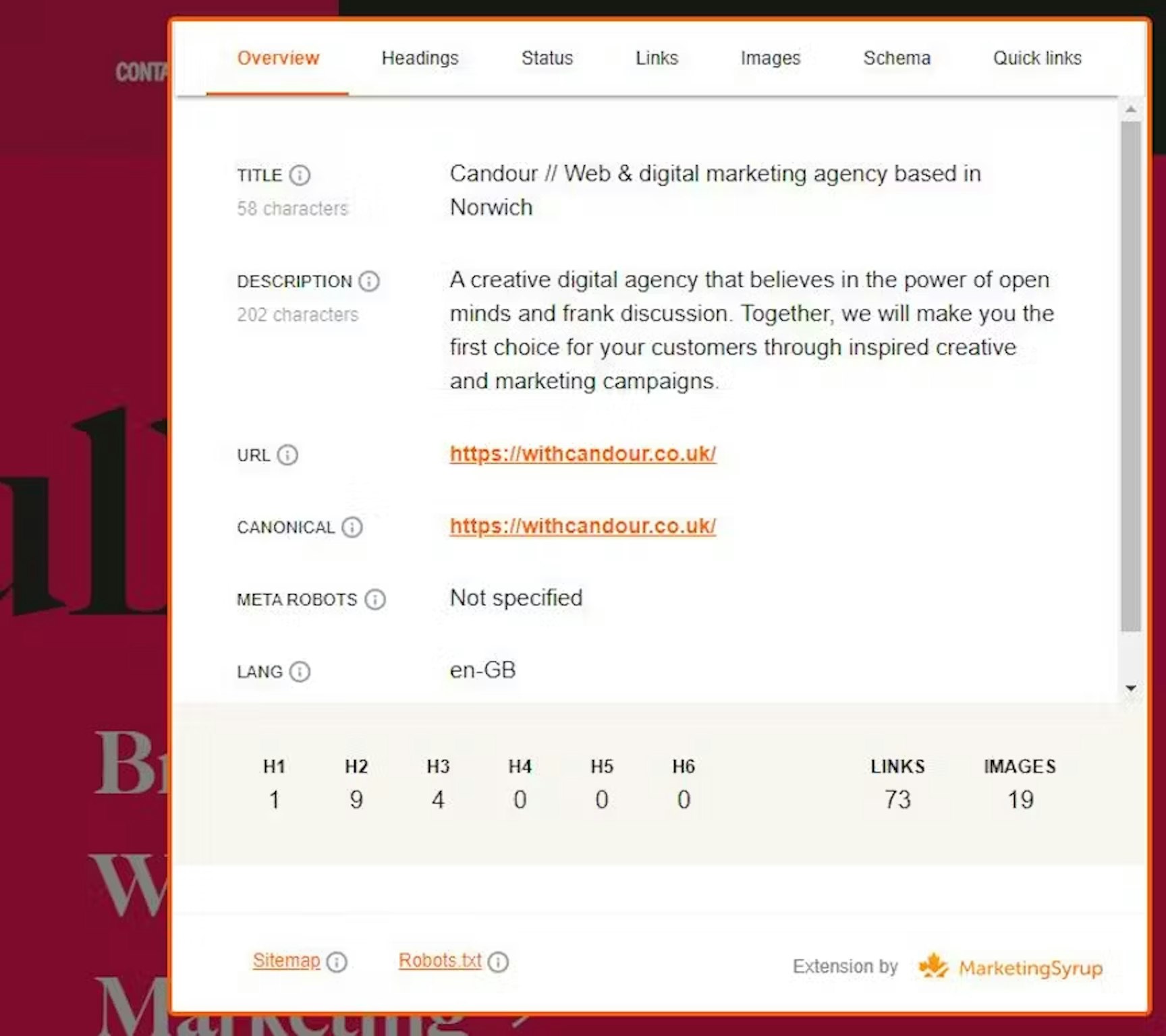

If you haven't met me before, then "hi!". My name's Mark Williams-Cook and I'm a director here at Candour, I run the free SearchNorwich meetup and the Search with Candour podcast.

I'm really proud to say, we're up to more than 500 tips now: That's more advice than you would normally find in a whole book about SEO, right here, for free!

Enjoy!

2019 Unsolicited #SEO tips:

Tip #1:

"We'll produce 2 blog posts a week of 500 words". If your SEO strategy sounds similar to that, I can pretty much guarantee you are wasting your money.

Tip #2:

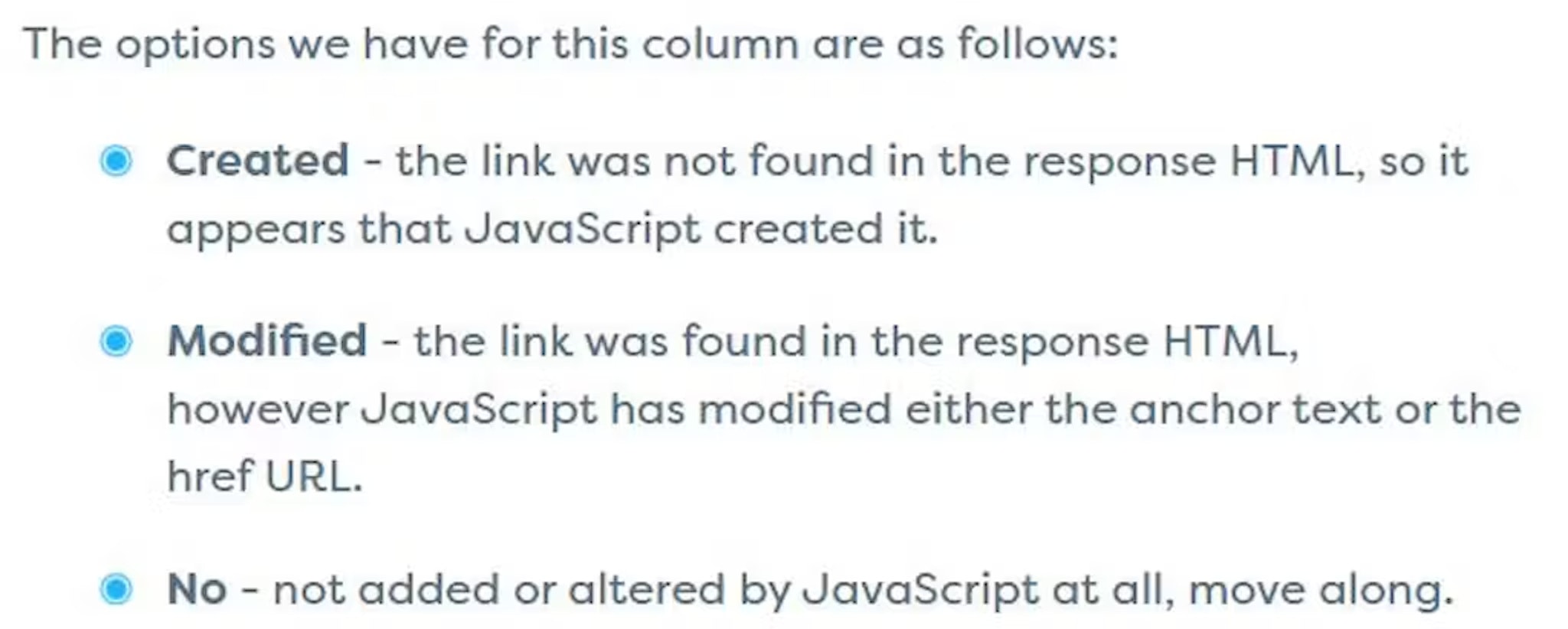

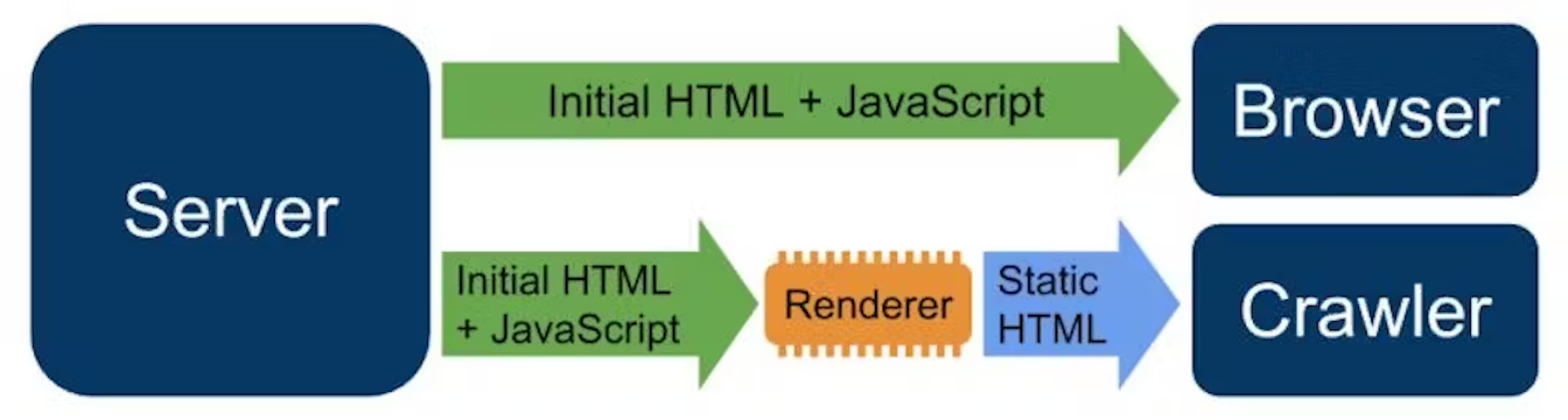

If you modify the contents of a page with Javascript, you may find vanilla (non-modified) HTML is what appears in the search results for some time. While Google can process Javascript (they are doing so in a median time of 5s), it can sometimes take a while for what is showing in the SERP to catch up! You can find out more about getting Google to work with Javascript here.

Tip #3:

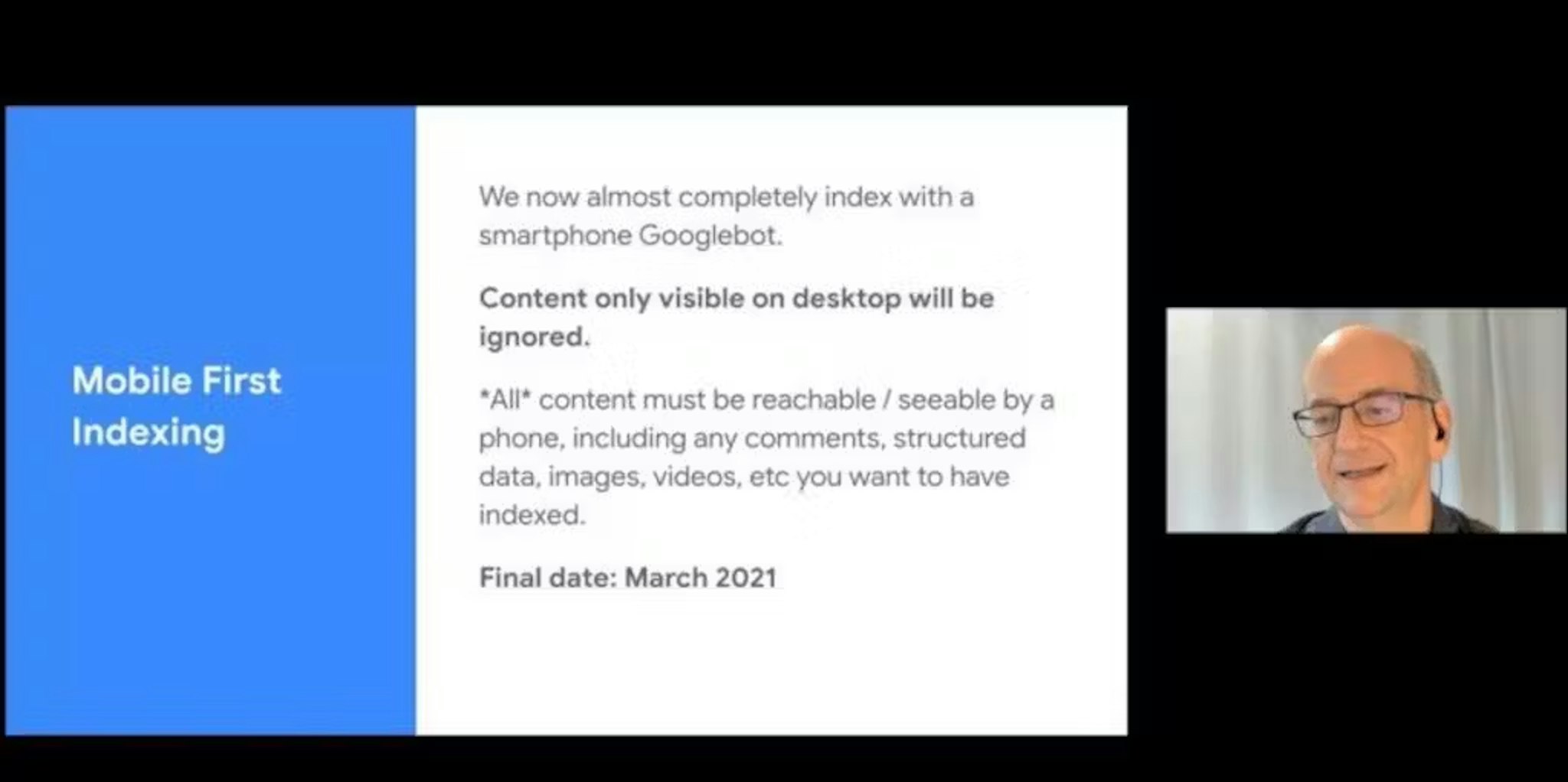

Google's "mobile-first" index means they are looking at your site as if they are on a smartphone. This means if you have a "mobile version" of your site that has less content than your desktop version, it is unlikely to get found. You can find out more about mobile-first indexing here.

Tip #4:

Never use automatic Geo-IP redirection to push users to different location versions of your website. It will confuse search engines, it's a bad user experience and it will actually be illegal under EU law from 2nd December! Here's some more info on internationalisation.

Tip #5:

Nobody can tell you the keyword a particular user searched for on Google and ended up on your site from organic search, despite what some tools claim to be able to do.

Tip #6:

Adding new content to be "fresh" is a myth: it does not apply universally. Some queries deserve freshness, others do not. Don't add new content for the sake of it being new.

Tip #7:

If you want to outrank everyone for seasonal terms, something like "best christmas laptop deals 2018", then keep it on the same URL every year (e.g. best-christmas-laptop-deals) and just change the year in the content. If you want to keep the old content, move that to a new URL (e.g. best-christmas-laptop-deals-2017).

Tip #8:

The words you use on internal links (anchor text) are massively important. Link to internal pages with the terms you want to rank - it can be more important than content on that page! Conclusion from a recent experiment: Even a website, where a keyword is neither in the content, nor in the meta title, but is linked with a researched anchor, can easily rank in the search results higher than a website which contains this word but is not linked to a keyword. More info on that study can be found here.

Tip #9:

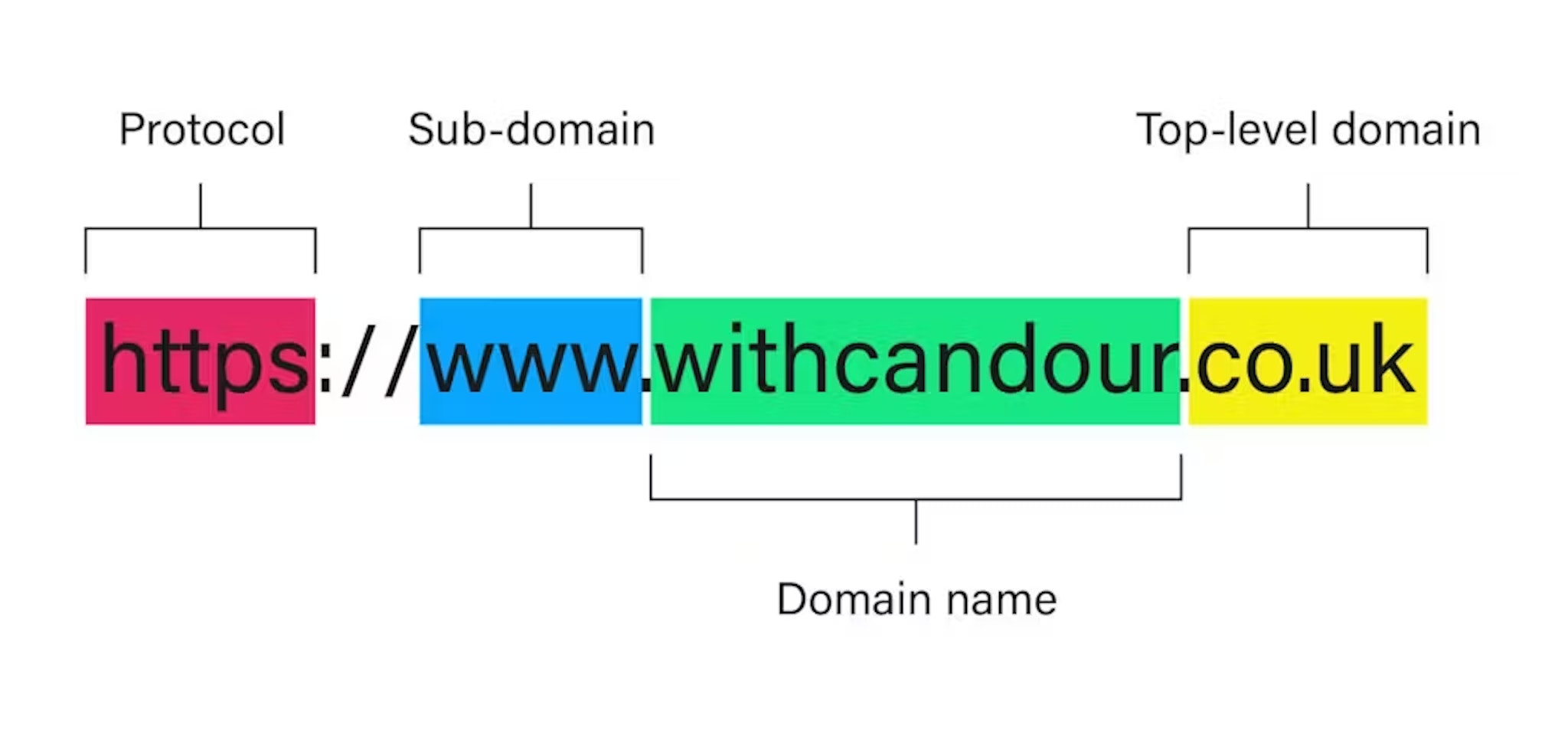

Any different URL counts as different page to a search engine. For example: If both www and non-www versions are accessible, Google counts these as different pages. In cases where content is identical and accessible through different URLs, you should be using permanent 301 redirects.

Tip #10:

Even if you're not an e-commerce site or collecting information, all of your site should be https not http. It's good for protecting your users' privacy and as a thank you, Google counts it as a positive ranking signal :)

Tip #11:

Contrary to popular belief, adding pages to robots.txt does not stop them from being indexed. To stop a page being indexed, you need to use the noindex tag. You can find more info from Google on noindex here.

Tip #12:

Google completely ignores the meta keyword tag and has done for years. Don't waste your time writing lists of keywords in your CMS!

Tip #13:

Your meta description does not directly improve how well your page ranks in Google. It does, of course, influence how many people are attracted to click on your result, so focus on that.

Tip #14:

Google claim to treat subdomains and sub-folders the same in terms of ranking. There are some interesting challenges with subdomains, such as sites like wordpress.com where a subdomain can host content that the domain is "not responsible for" (in this case, random peoples' blogs). Some SEOs have cited examples where they believe subfolders have out-performed subdomains in terms of ranking with other things being apparently. It's worth questioning whether your site really does need a subdomain and what the benefits are.

Tip #15:

Longer content does not necessarily rank better. Some studies may indicate this, but when you look at the source data, it's just because that content is so much better (and there is a higher probability longer content has had more effort invested). The web is not short of quantity of content - it's short of quality. Answer questions and intent as quickly as possible, then get into the detail if needed. More tips on what quality means this week :)

Tip #16:

Content does not just mean text! Sometimes a picture says 1,000 words and a video says even more. Google 'learns' what type of content best answers queries and you can get great clues as to what type of content to create by seeing what is already ranking E.g. "How to change a car battery" is all videos ranking top - after the short text content! (See yesterday's tip on content length).

Tip #17:

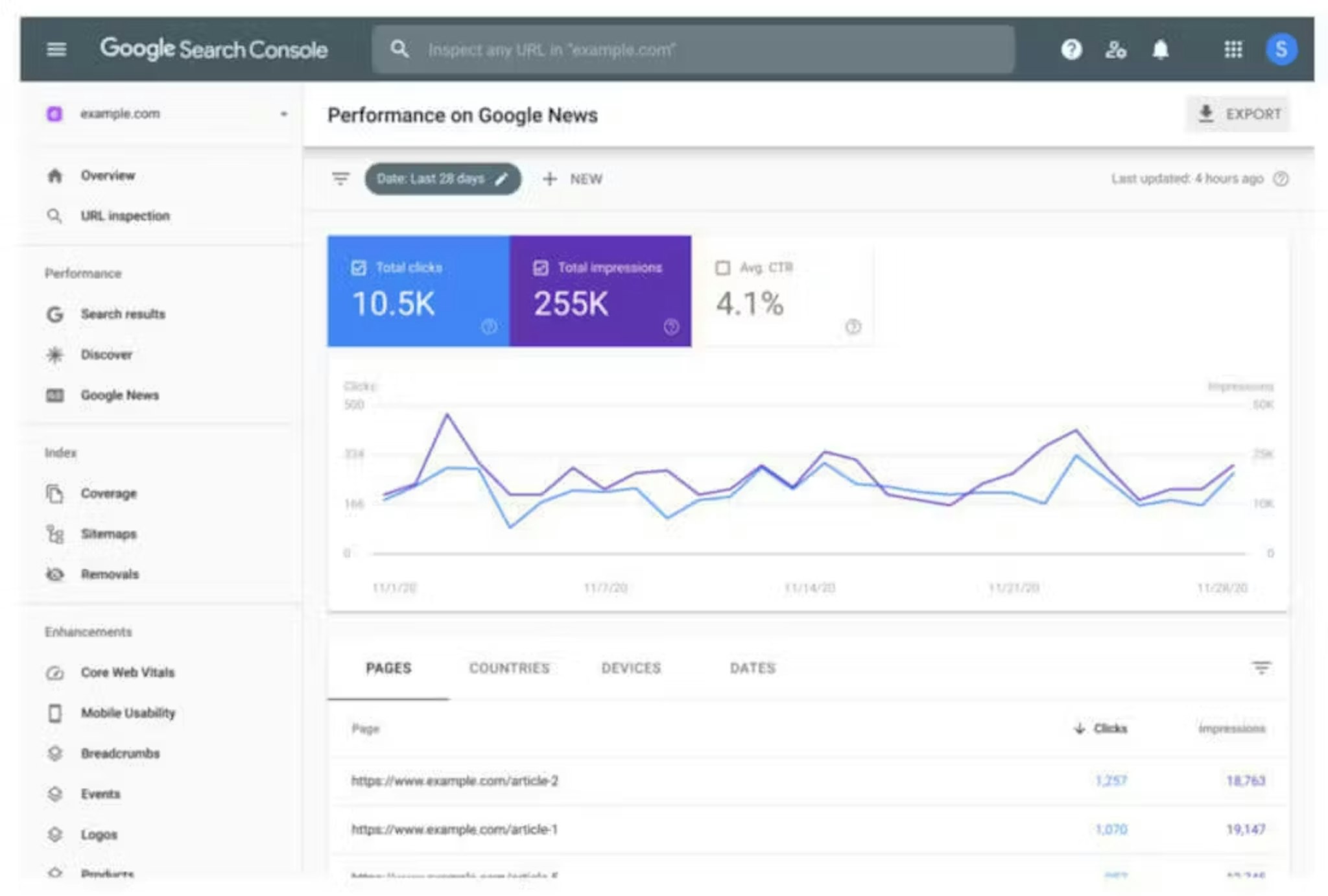

Back to basics. If you don't have one, a free Google Search Console (formerly Webmaster Tools) account will give you a wealth of diagnostic information directly from Google about your site, alert you to problems, penalties, hacks and give you average rankings and keywords your site is showing for. Here's some more info from an experiment of following a Googlebot for 3 months.

Tip #18:

You can download free browser extensions, such as User-agent Switcher for Chrome that will let you identify yourself as Googlebot to the websites you visit. It's really interesting seeing how some websites will deliver you a difference experience when they think you're Google. It's also really useful for uncovering what is going on if you get a "This site may be hacked warning". It's very common for hacked websites to appear "normal" to a regular user, but when they detect Google visiting the site, they'll show lots of hacked content and links to benefit the hacker's sites!

Tip #19:

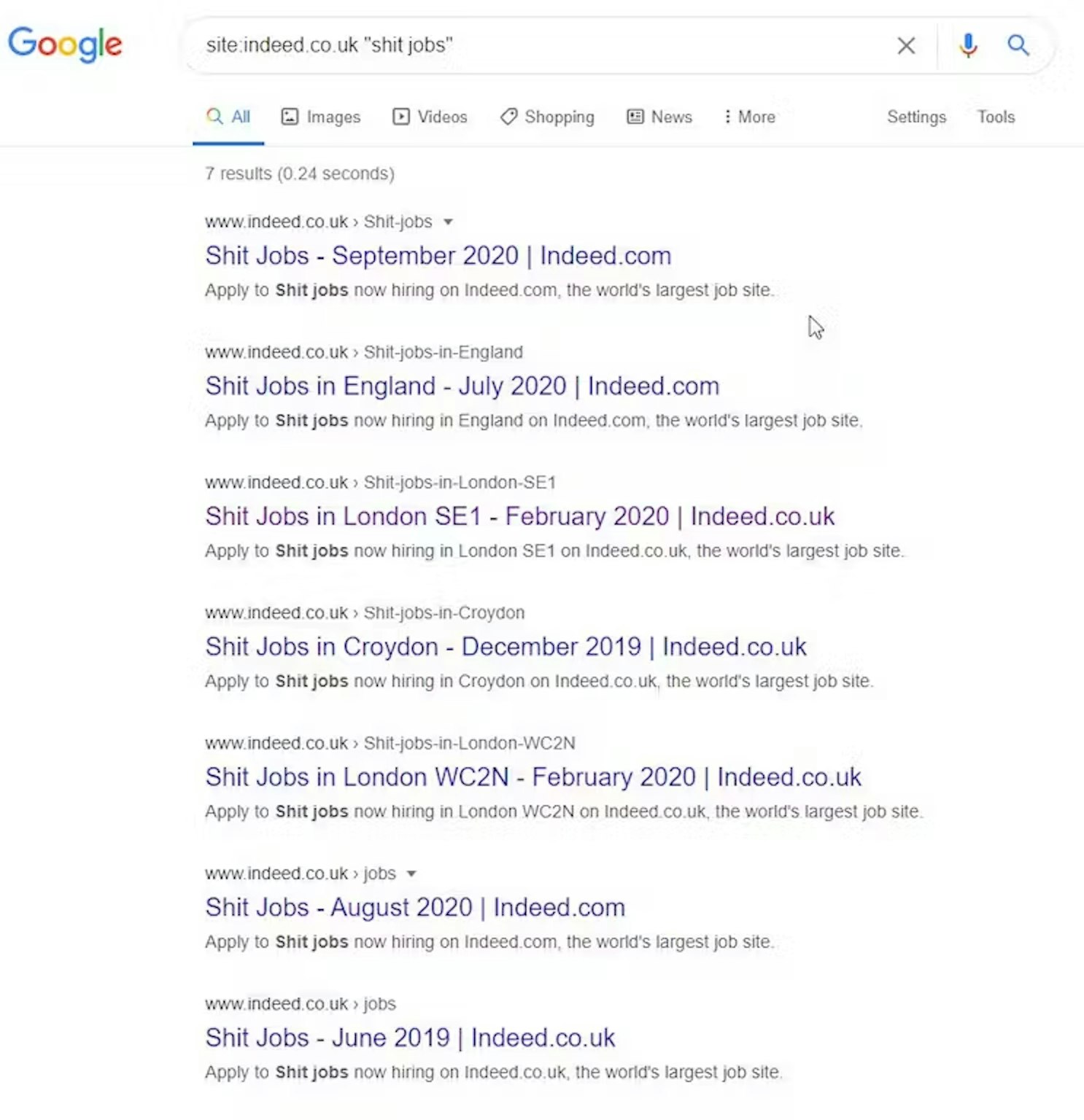

You can use search operators to get additional information about your site from Google. For instance, try a search for: site:yourdomain.com and Google will show you how many results it has indexed* for your domain and will list them roughly in order of importance**. You can also use this to see which specific page Google likes on your site for a specific keyword or keyphrase by conducting the search: site:yourdomain.com keyword It's not 100% accurate, I've actually seen some wild variances. The only way to be sure is to check in Google Search Console, but this method works with competitors or sites you don't have access to their Search Console. * This obviously has no keyword context but may have some other context from your personalisation, search history, device etc. It's more a rough guide for interest and is usually expressive of your internal linking.

Tip #20:

There is almost no case in which you or your agency should be using the Google Disavow Tool. You'll probably do more harm than good. This tool is only for disavowing links when you've had a manual penalty or specifically when you know blackhat/paid links have happened and you want to proactively remove them. In 99% of cases, just 'spammy' links should be left - if Google thinks they're spammy they'll just ignore them. Focus your efforts on creating and positive things, instead.

Tip #21:

If you are moving your site (full or partial), DO NOT use the Remove URLs tool in GSC on the old site. It won't make the site move go any faster. It only impacts what's visible in Search so it could end up hurting you in the short-term.

Tip #22:

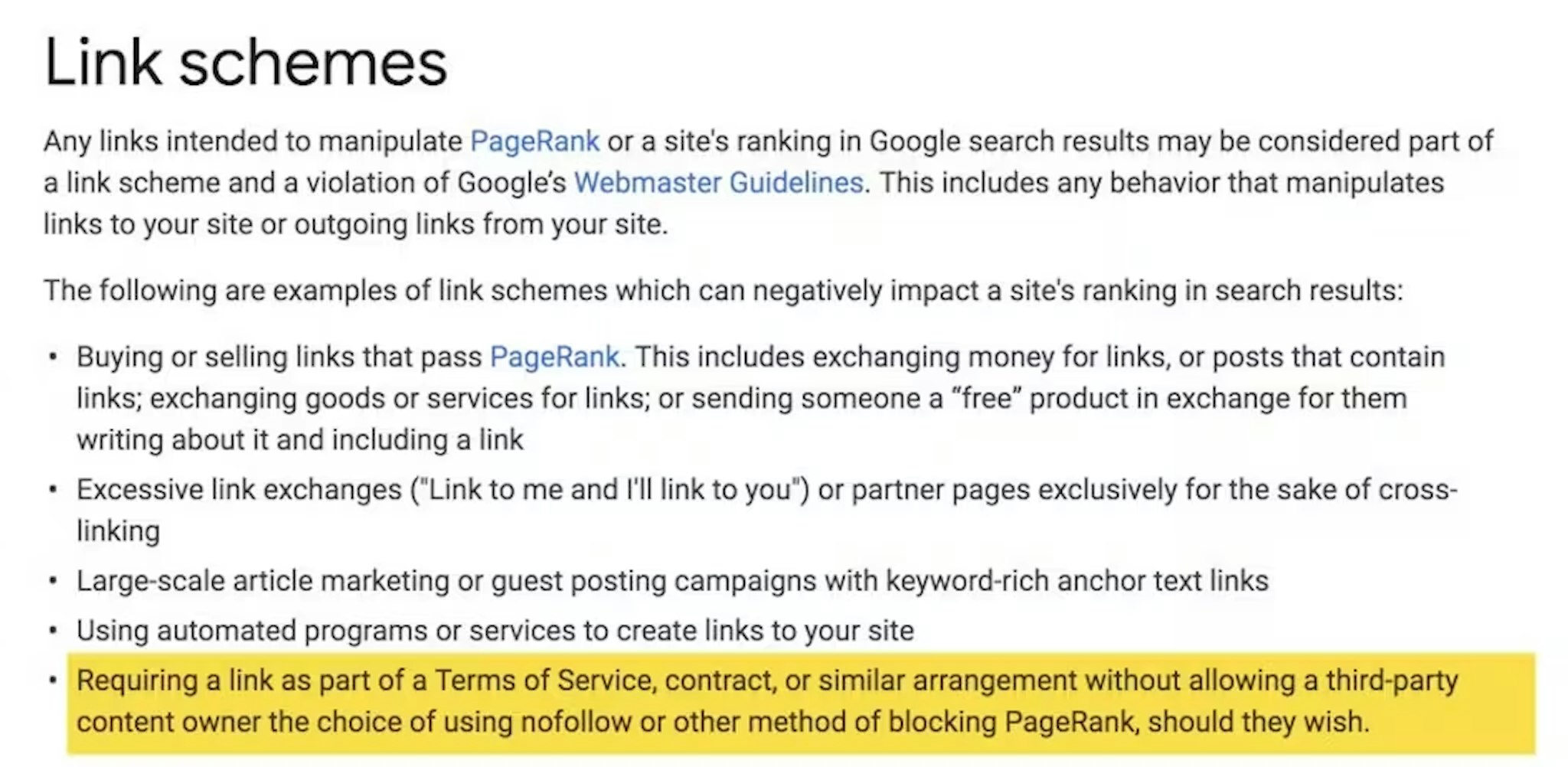

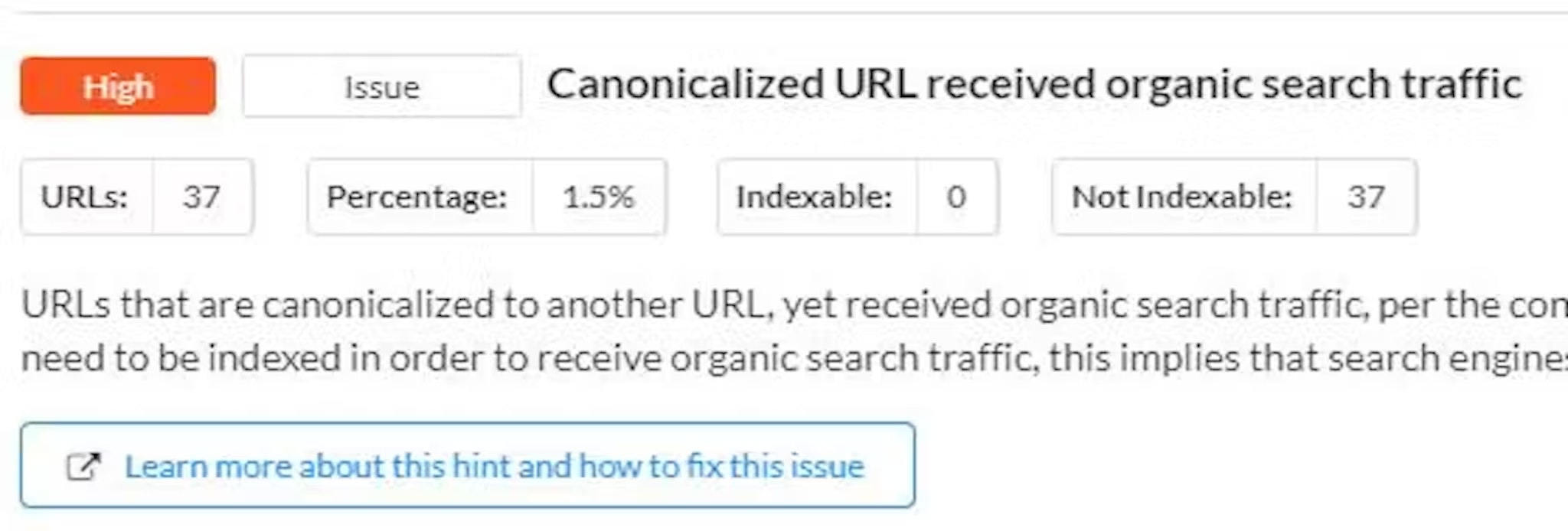

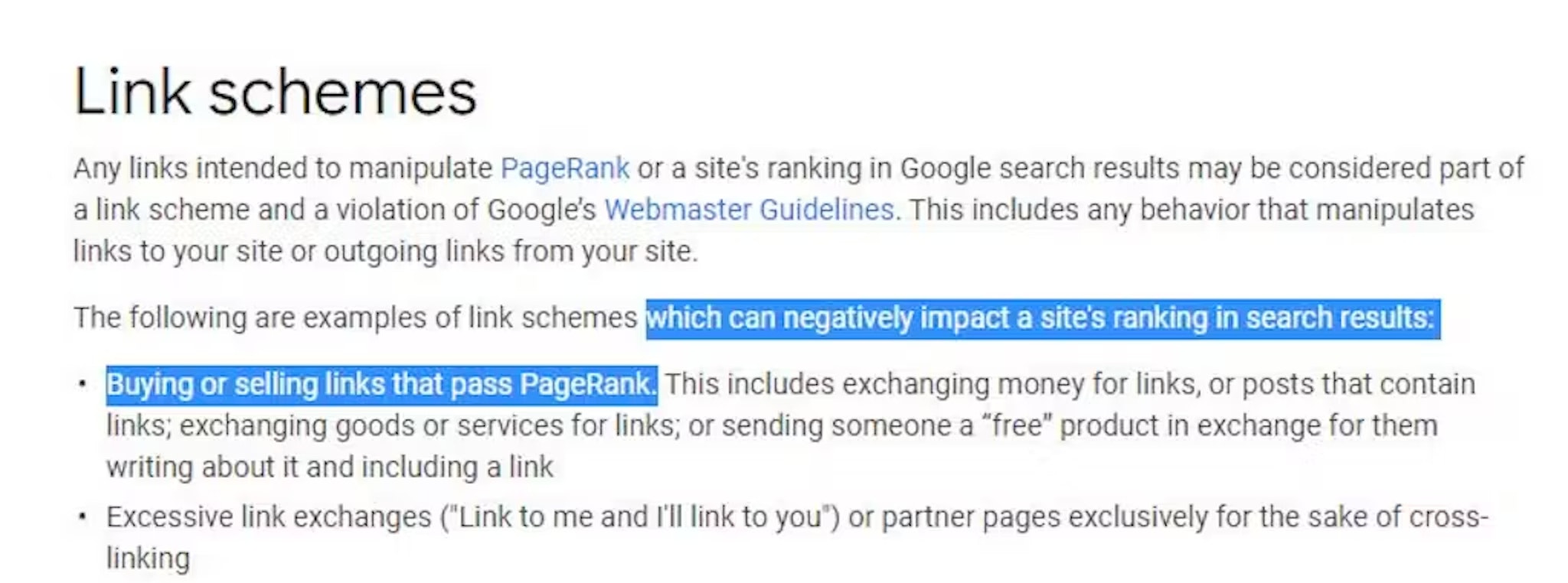

You can use the service such as Visual Ping to automatically monitor things such as Google's Webmaster Guidelines pages to get an email when they have been updated. For instance, Google recently added this to their definition of 'Link schemes', to include terms of service and contractual arrangements:

Tip #23:

First words are important words. If you're going to put your brand name in your page title, it usually should come after the description of the page content.

Tip #24:

If you're having to do redirects, don't do them at "database level" - e.g. in the backend of your CMS. Your site will be faster if you do the redirecimts "higher up", such as in the htaccess file. Faster sites are good for users and rank better.

Tip #25:

It's a common mistake to use robots.txt to block access to the CSS / theme files of your site. You should let Google access these, so it can accurately render your site and have a better understanding of the content.

Tip #26:

"Keywords Everywhere" Chrome plugin is a nice, free way to get search volumes, search value and suggestions overlaid with every search you do. I have it on all of the time and over the months and years, you build a good 'feel' for search competitiveness and how other people search.

Tip #27:

Pop-ups and interstitials generally annoy users, you too, right? Since Jan 2017, Google has specifically stated that websites that obscure content with them and similar will likely not rank as well. Here are some examples from Google of things to avoid.

Tip #28:

Competitors copying your content? One of the many things you can do is file a DMCA notice directly with Google. This can remove your competitor's content from search results. Here is the link to file a DMCA notice. N.B. There are consequences for fake DMCA notices.

Tip #29:

If you're AB testing different page designs on live URLs, make sure you use the canonical tag so you don't confuse search engines with duplicate content while the test is live.

Tip #30:

Ayima Redirect Path Chrome plugin allows you to live view what redirects (JS/301/302 etc) are happening and if you're getting chains of them.

Tip #31:

If your web page URLs work both with and without a trailing slash (/), search engines will think you have two identical copies of your website online. You should pick whether you want a trailing slash or not and setup permanent 301 redirects between each. Failing to do so, can result in the 'two' pages competing against each other in the SERPs and ranking worse than a single one.

Tip #32:

[Deprecated!] No longer an indexing signal for Google, but is for other search engines

If you have paginated (page 1,2,3,4 etc) content, there is special "Prev" and "Next" markup you can use to help search engines understand what is going on, better. More info on prev and next pagination markup here.

Tip #33:

Google has always flatly denied and there is no good evidence whatsoever that social media posts on platforms like Facebook and their associated 'likes' and/or engagements directly impact your rankings in any way. If someone is insistent about this, look closely - you may be dealing with a clown! 🤡

Tip #34:

Unsolicited #SEO tip: How 'old' a site is plays a part in how well the pages on it can rank - this is means all on-site and off-site facts such as how long links have been present. Older is better.

Tip #35:

Want to know where you rank? Googling it will just frustrate you. Even with incognito mode, you're not going to get a fair representation of the rankings. Google Search Console will give you some average rankings - but only for terms they choose. I'd recommend SEMRush. It's super cheap and will give you loads of keyword ranking data for your site, your competition and specific terms you want to track. You can get a trial here.

Tip #36:

Add a self referential canonical tag to all canonical pages. This means if someone scrapes your content or links to it with query strings, it's still clear to Google which version to give credit to. More info about self referential canonicals here.

Tip #37:

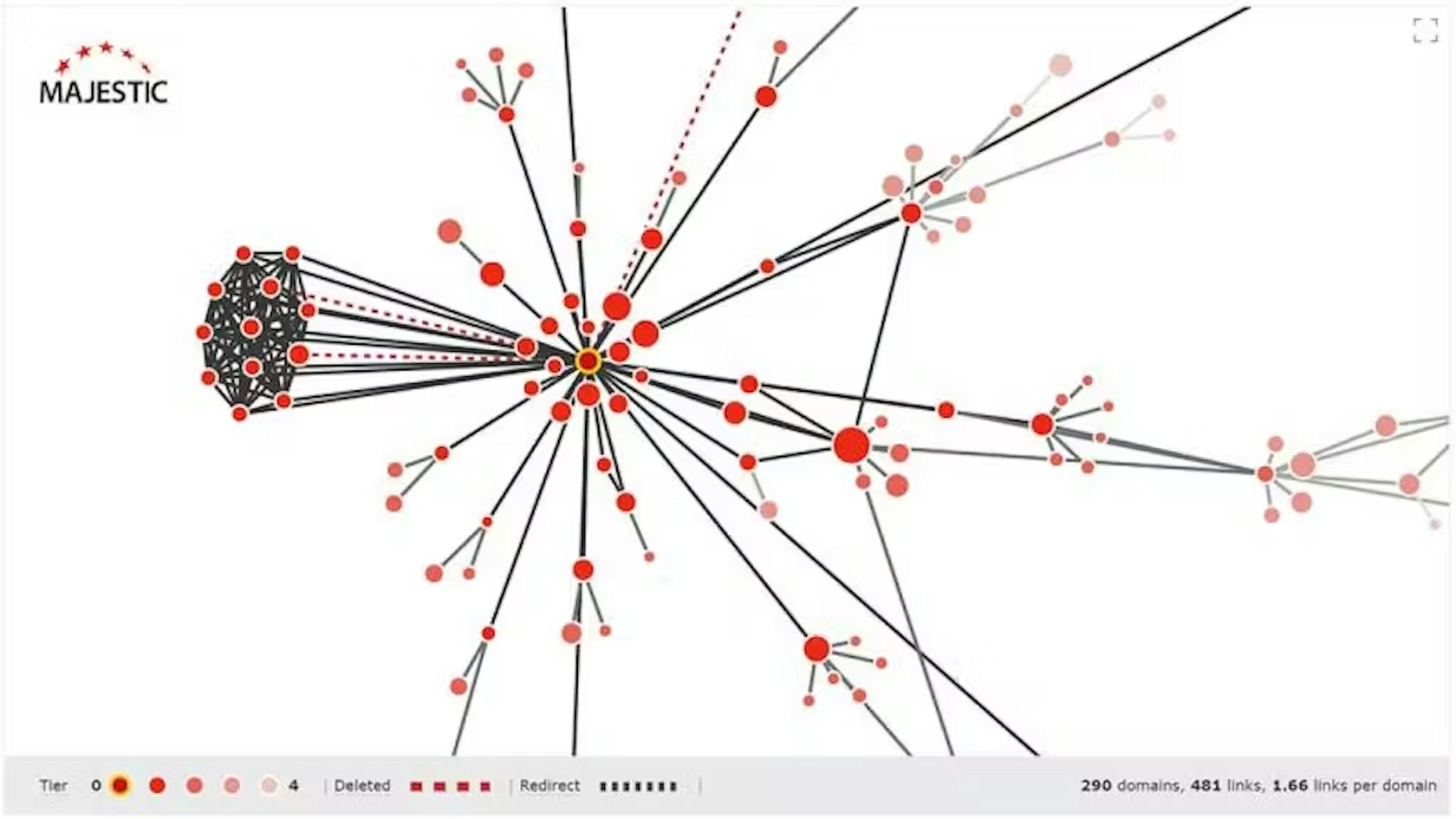

You can find easy link opportunities by using tools such as Majestic's Clique Hunter. Specify a few competitor sites and you'll get a list of links that all or most of your competitors have that you do not. This helps close the gap on where all your competitors are being talked about and you're not. More info from Majestic directly here.

Tip #38:

Watch this brilliant video from Lukasz Zelezny on SEO tips you can implement tonight! /watch?v=dXdqmVnP5pg

Tip #39:

Using a VPN is a good idea in general, but it's really helpful for SEO. With a service like ProtonVPN then you can click to change cities or countries and see what different search results look like.

Tip #40:

Google can index PDF documents just fine and it actually renders them as HTML. This means links in PDF documents count as normal weblinks - PDFs are pretty easy to share, too....

Tip #41:

This week, I saw a company that had been told by an agency their site was slow. It really wasn't (consistent ~3.0s TTI). You can test site speed (and more) yourself using Google's PageSpeed / Lighthouse audit tools. I'll do some tips the next few days about these tools, as they are commonly misused and misinterpreted. Here is Google's PageSpeed Insights. Here is Lighthouse.

Tip #42:

If you use the page speed tools from yesterday, keep in mind that results can vary every test you run, depending on all kinds of factors. If you are going to use these tools, run multiple tests on multiple pages and get some averages.

Tip #43:

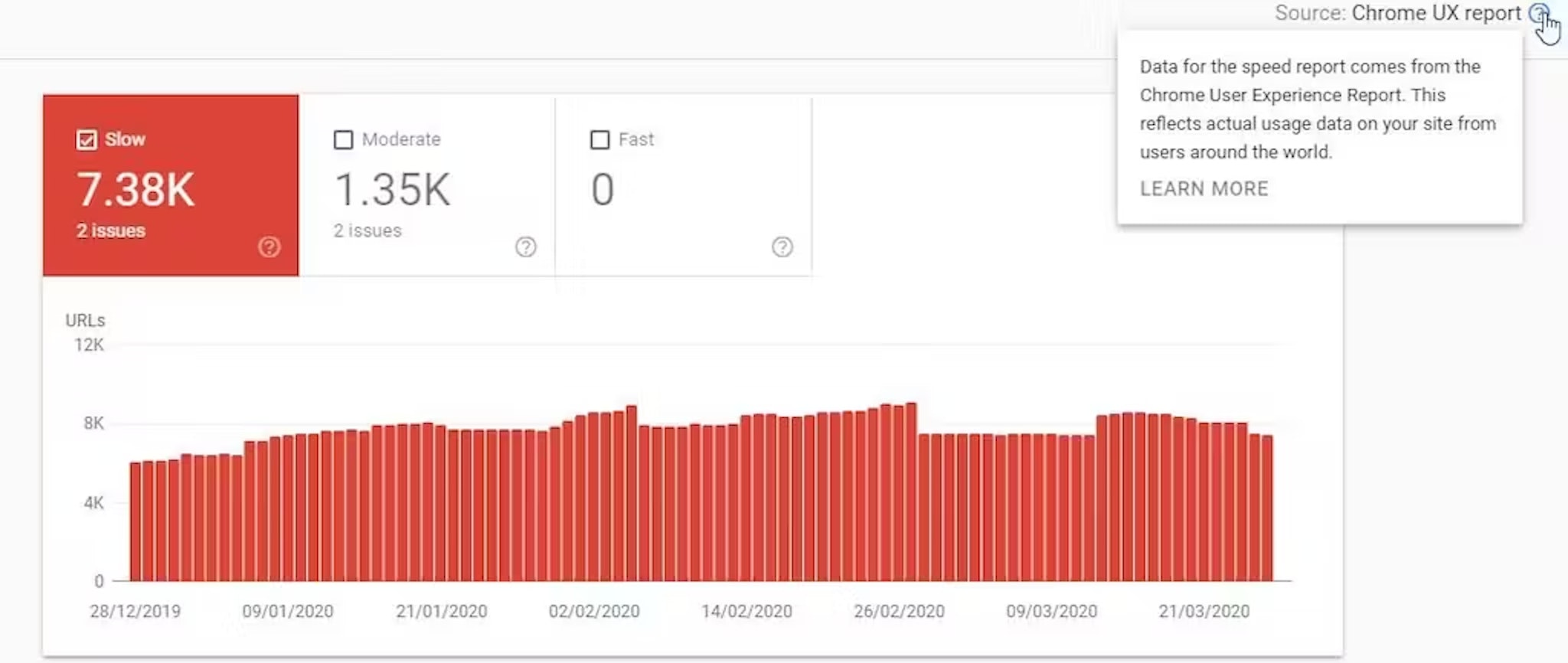

If you have enough traffic, Google's Pagespeed Insight tool will give you "Field data" - this is an amazingly useful average speed, directly from your user's browsers. It will give you a much better idea of how your site is performing outside of the 'spot checks' we spoke about in previous tips. Google Pagespeed Insights tool is here.

Tip #44:

Have you heard that 50% of searches by 2020 will be voice searches? They won't, it's complete rubbish.

Tip #45:

1 in 5 searches in that happen in Google are unique and have never happened before. The vast majority of searches that are conducted are terms that have fewer than 10 searches per month. If you're just picking key phrases based on volume from "keyword research", you're missing the lion's share of traffic and making life hard for yourself, as lots of other people are doing the same.

Tip #46:

Check the last 12 months in Google Analytics, if you've got content pages with no traffic - it's maybe time to consider consolidating, redoing or removing those pages.

Tip #47:

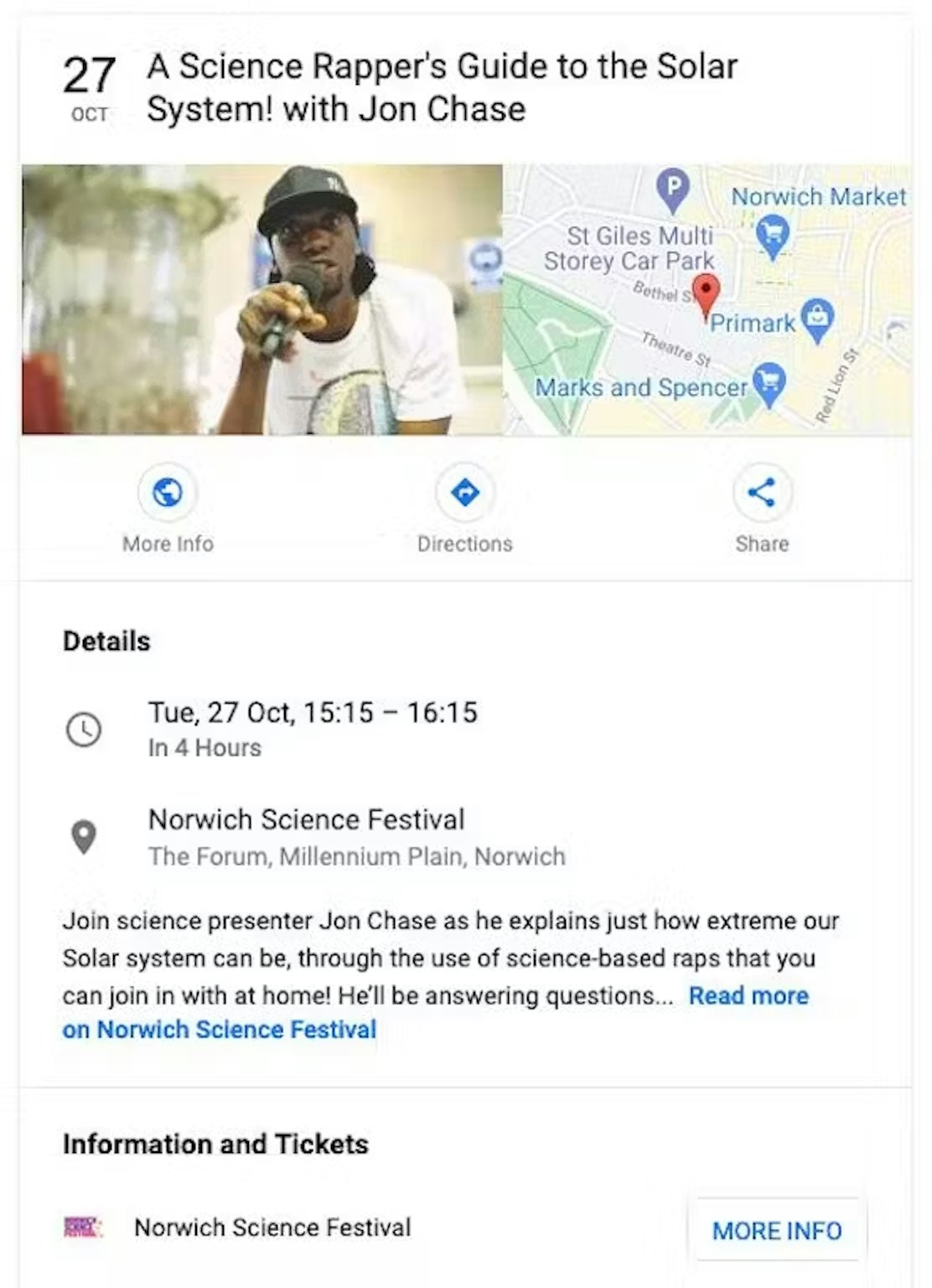

Key phrases mentioned in the reviews written about you on Google My Business help the visibility of your company for those terms.

Tip #48:

Google do not use UX engagement metrics directly as part of their core algorithm (CTR, dwell time etc). They have said this consistently for years and last week, Gary Illyes from Google referred to such theories as "made up crap" in a Reddit SEO AMA. However, there is the "Twiddler framework" that sits on top of Google's core algorithm, which is lots of smaller algorithms that do impact the end SERP. We have definitely seen SERPs change temporarily when CTR jumps, which is no doubt Google's way of trying to match intent from news stories etc.

Tip #49:

As a last resort, when your dev queue is stalled and you're drowning in technical debt, it is possible to modify things such as page titles or canonical tags via javascript with Google Tag Manager. It can take weeks for these changes to be indexed, but it does work.

Tip #50:

If you're serving multiple countries on one website, it is almost always better to do this with sub-folders, rather than sub-domains or separate TLDs. This means: mywebsite.com/en-gb/ mywebiste.com/fr-fr/ Is almost always preferable to: en-gb.mywebsite.com fr-fr.mywebsite.com.

Tip #51:

You need other websites to link to your website pages if you want to rank well in Google. This means if you consider SEO to be a one-off, checkbox task of completing items on an audit, you are unlikely to see success. Technical SEO gives you the foundation to build on, not the finished article. #backtobasics

Tip #52:

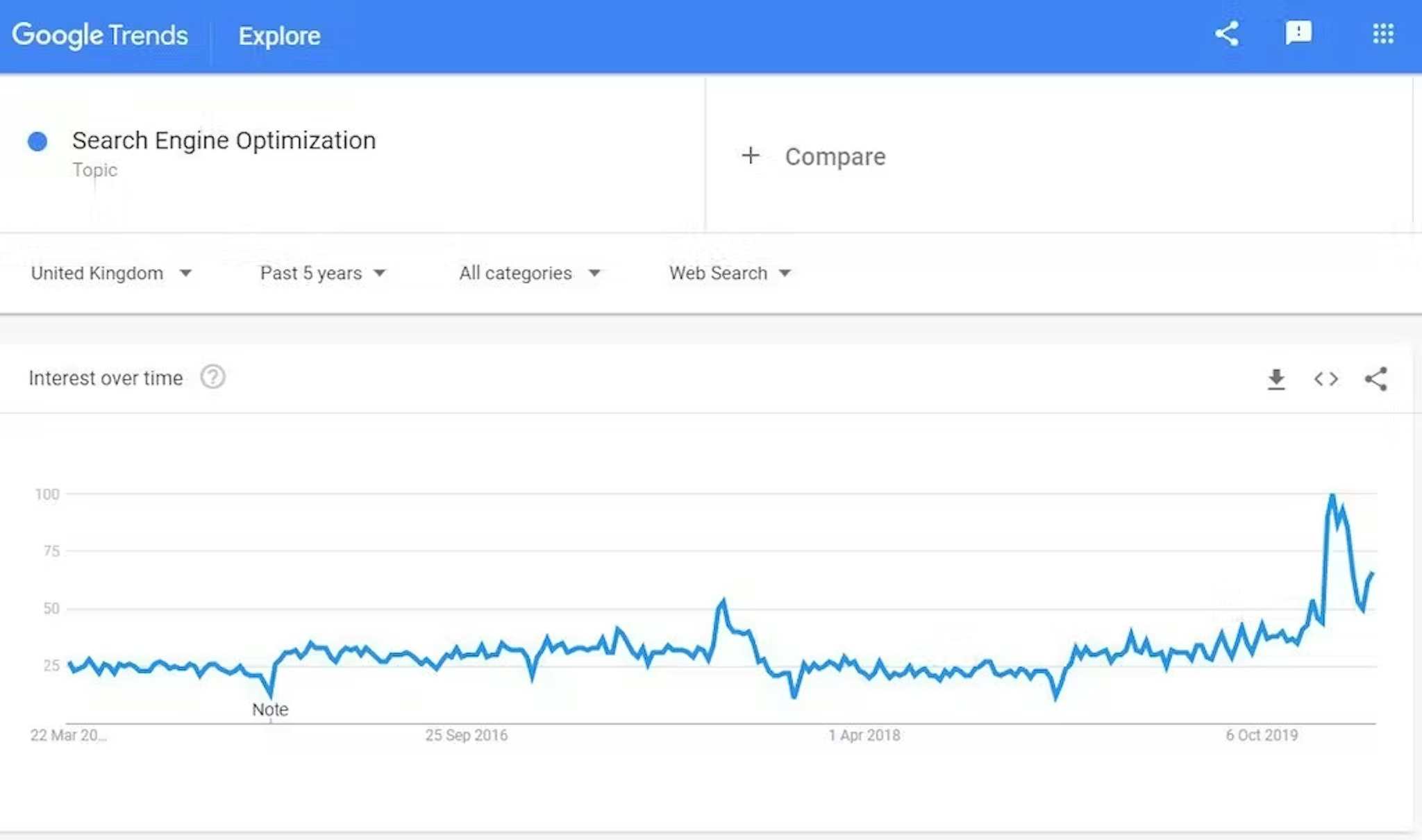

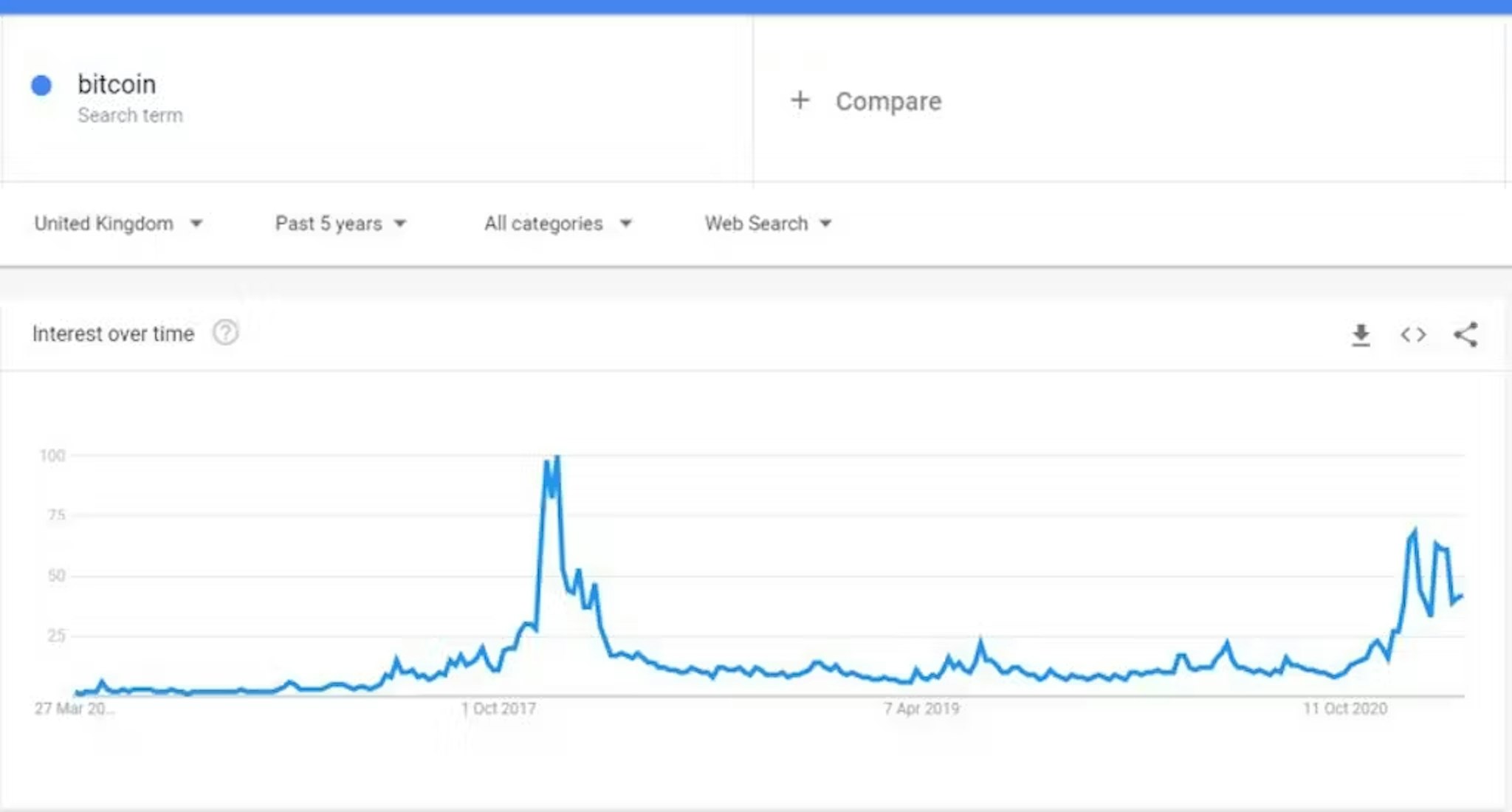

Have a play with Google Trends! It is useful to see trends in searches, when they happen every week, month or year. How much do they vary or are they trending up or down? Here's a funny trend for two searches (different Y axis) for searches around 'solar eclipse' and 'my eyes hurt' :)

Tip #53:

You can do some basic brand monitoring for free with Google Alerts. This gives you the opportunity to do 'link reclamation' - when websites are mentioning your brand or website and not giving you that link. Strike up a friendly conversation, offer them some more value, detail, insight and get that request in to get the link :-)

Tip #54:

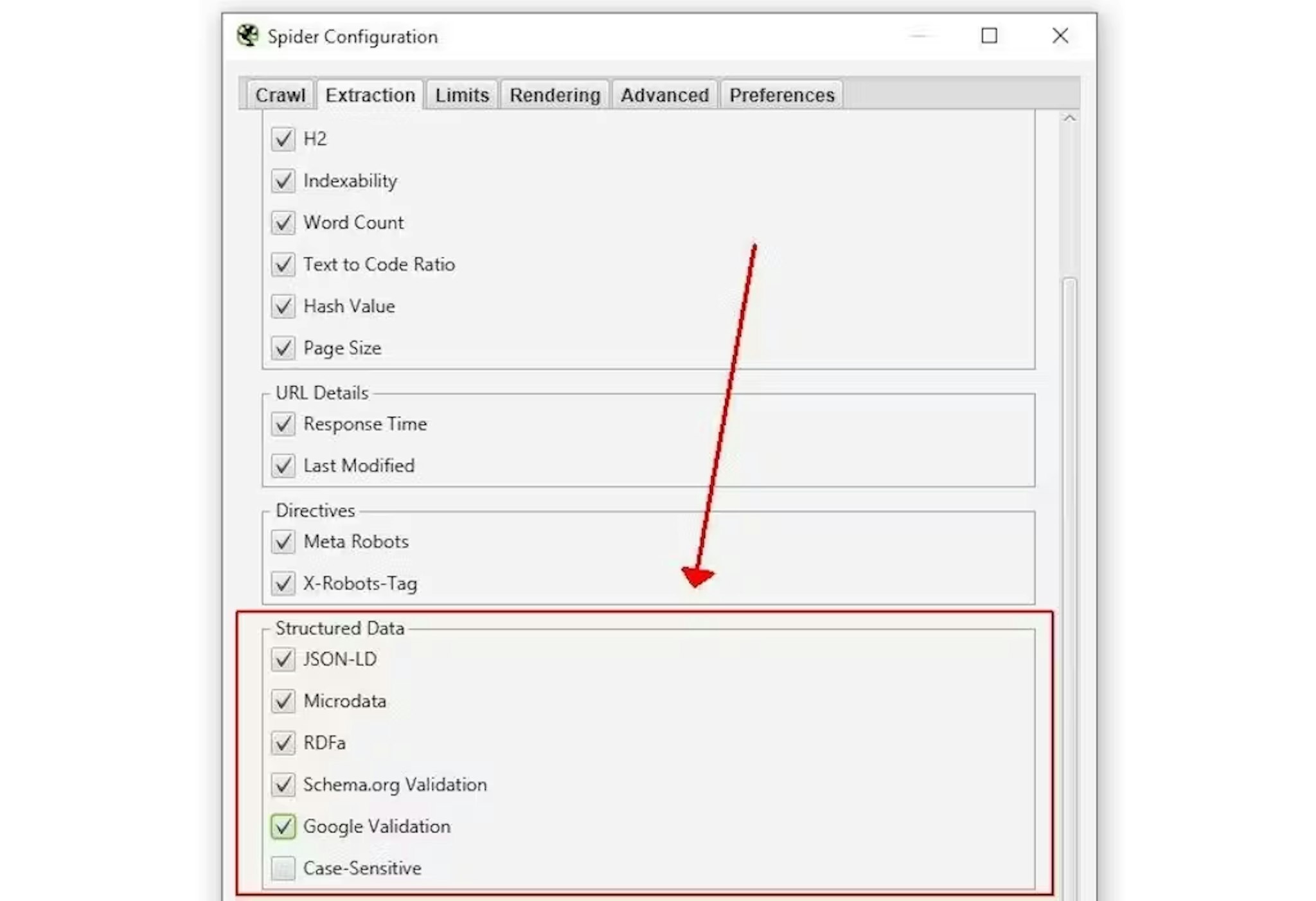

Use schema. It's important. Here's a short video about it. /watch?v=xYHK-laEhk4

Tip #55:

Registering for Google My Business for free, is how you can start ranking in the local map box results.

Tip #56:

While you can modify page content with javascript, such as with Google Tag Manager, this should be an absolute last resort. In this experiment I did, it took Google 24 days to render the javascript version of a page!

Tip #57:

Stuck for good content ideas? Put a broad subject (like 'digital marketing') into AnswerThePublic and you'll get a list of the types of questions people are asking in Google!

Tip #58:

Screaming Frog is a tool with a free, limited version, that allows you to quickly 'crawl' of your pages like a search engine would to see issues such as 404s or duplicate page titles.

Tip #59:

Video is often overlooked, YouTube is the second largest search engine in the UK - there is more to SEO than just Google search!

Tip #60:

Want a better chance that your videos will appear in search results? Then create video sitemaps! Video sitemaps give additional information to search engines about videos hosted on your pages and help them rank.

Tip #61:

Don't stress about linking to other websites where it's relevant and useful to the user. That's how the web works and is absolutely fine!

Tip #62:

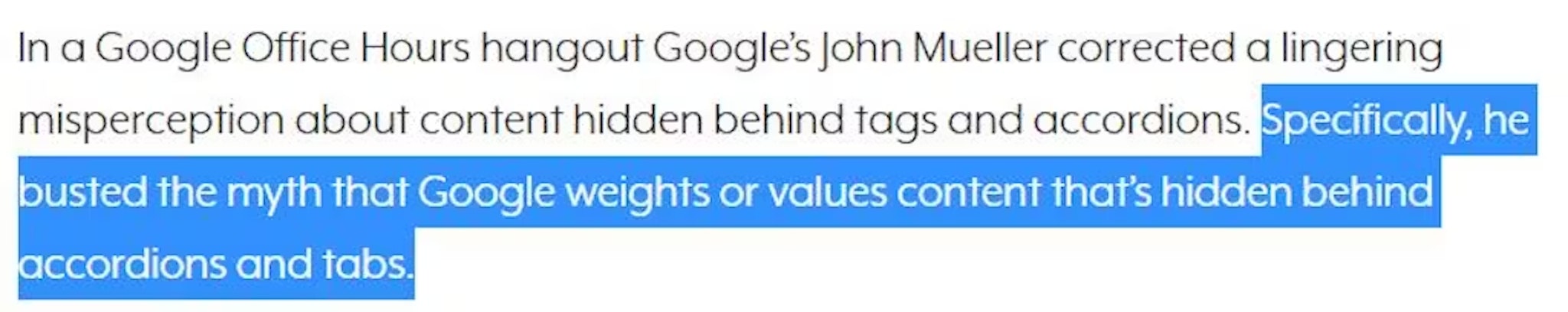

Did you know that sending someone a free product to review and get a link is against Google's guidelines and comes under 'link schemes' that could land you with a penalty?

Tip #63:

The factors to rank in the local map pack results are different to 'normal' rankings (but there is overlap).

Tip #64:

How much is your organic traffic worth? One way to get a good estimation is to find out how much it would cost to buy that search traffic through paid search. The cost per click (CPC) of a keyword is set by market demand and can be used a barometer for the value of your rankings. Tools like SEMrush can do this for you automatically. In this example, the estimated monthly value of the organic traffic is £5,700.

Tip #65:

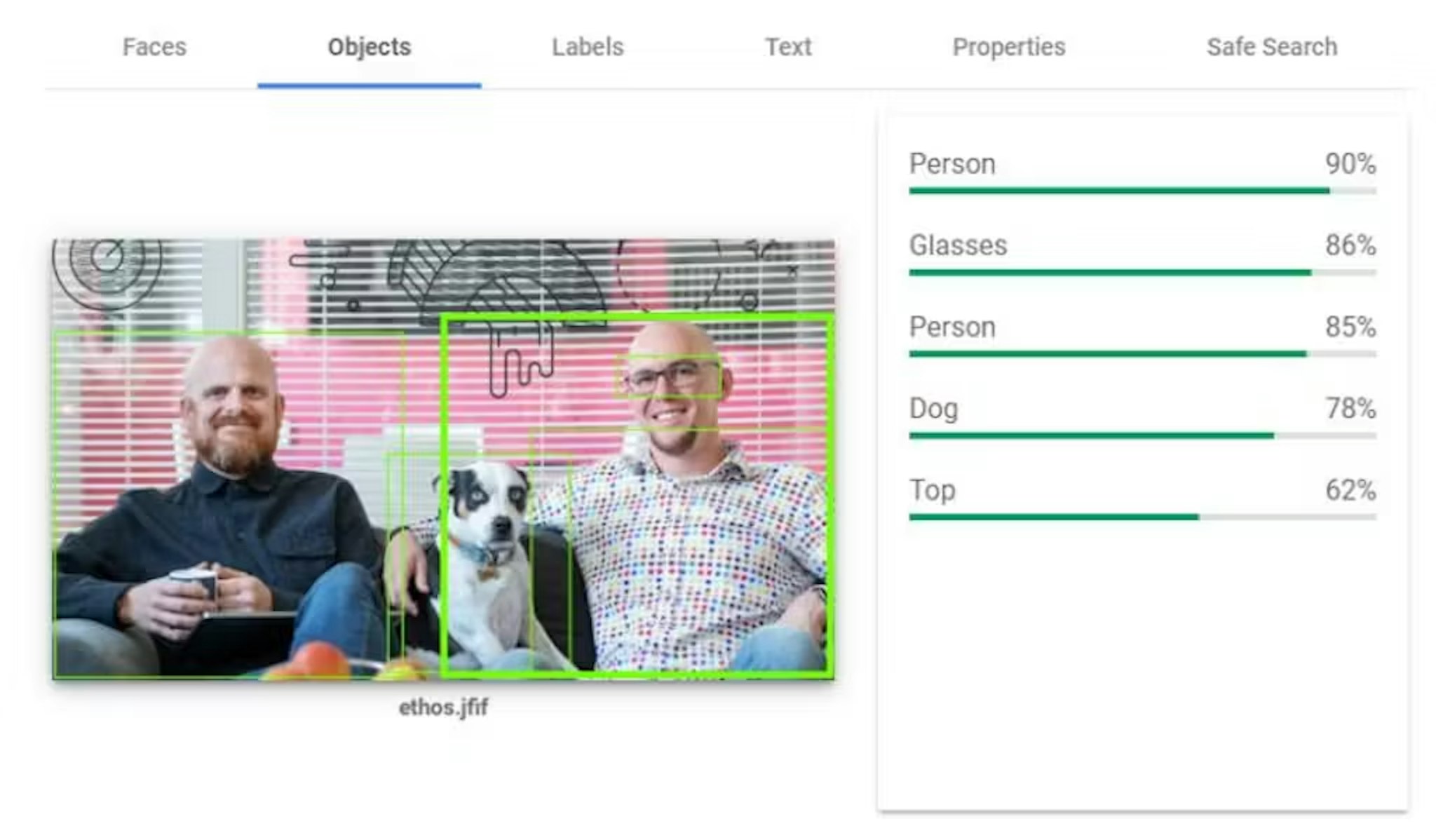

If you want your images indexed, you need an img tag within the HTML.

Tip #66:

Google Trends has a commonly-overlooked ability to trend YouTube searches.

Tip #67:

Domain age, or at least the component parts of it such as how long links have existed to it, play a part in ranking. It is almost impossible to rank a brand new domain for any competitive term.

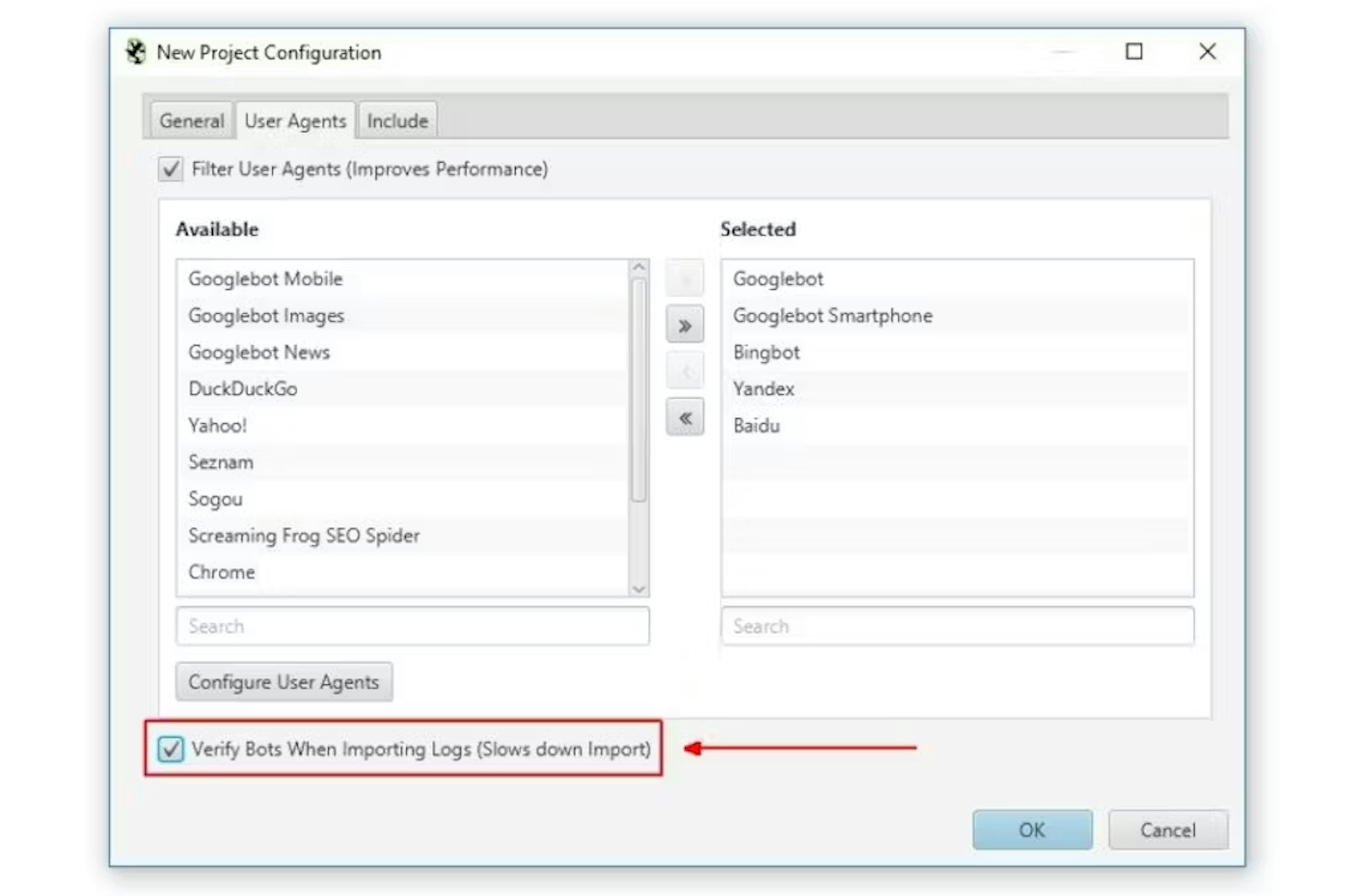

Tip #68:

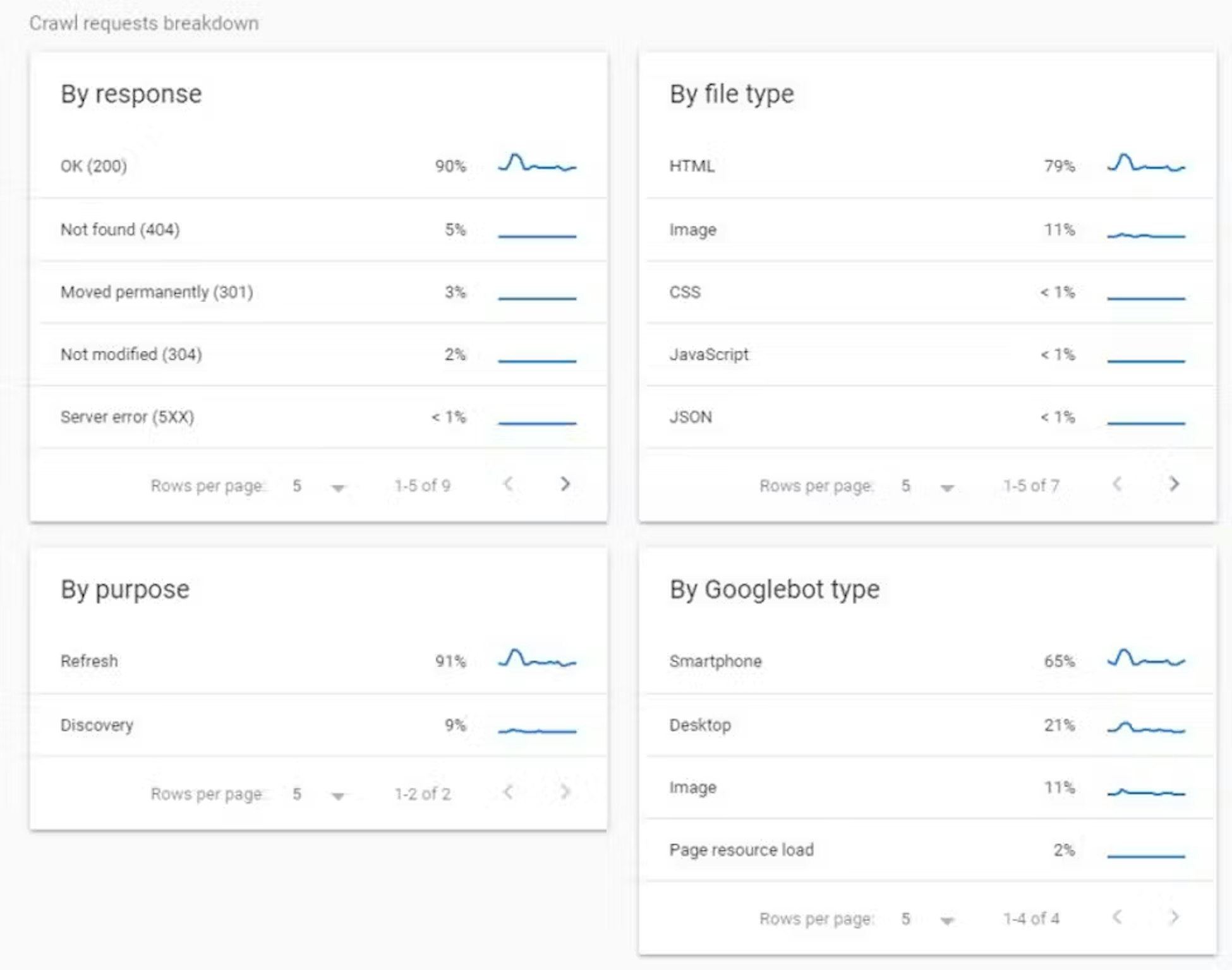

Examining your raw server log files can be a worthwhile exercise. You can see directly how Googlebot is interacting with your site and if it's getting stuck somewhere or getting error responses.

Tip #69:

When doing a site migration, don't forget to migrate URLs not within your site's internal link structure. This could include links to pages with marketing parameters, for instance. These 'hidden' URLs contribute to your ranking, commonly get over-looked and result in permanent ranking drops after migration.

Tip #70:

If you're trying to do a crawl of your site with a tool like Screaming Frog and getting 403 errors, this can be because many Web Application Firewalls or services like Cloudflare will default to blocking crawlers imitating Googlebot. Get around this by setting your crawl user-agent to Screaming Frog SEO Spider or another non-search engine one (or get yourself whitelisted by IT!)

Tip #71:

Don't focus specifics in algorithm updates, if you're having to do that, your underlying SEO strategy probably isn't right. Algorithm updates primarily represent the overcoming of technical hurdles which are still driving toward the same end goal.

Tip #72:

Golden rule of SEO - there is absolutely no 'SEO change' you should do on your site that will make the user experience worse. None. No exceptions.

Tip #73:

Ideally, you just want just one h1 on the page and it should be descriptive of the page content for the user. Naturally, your page title and h1 will normally be similar.

Tip #74:

The main functionality of your site, such as all the important pages should be accessible, without javascript. Disable javascript and have a click around your site. If things are broken or parts are missing, this can cause big problems for Googlebot!

Tip #75:

Canonical tags are not a directive. Do not try and use them on pages that are not similar - Google will just ignore them. I recently confirmed this with an SEO test too.

Tip #76:

Struggling to get interesting data to make a narrative to get links? Did you know Google has a Dataset Search? You can search for publicly available datasets to get inspiration and save huge amounts of time.

Tip #77:

With a reasonable number of results, a 'view all page' is optimal over paginated content. Research shows 'view all' pages are also preferred by users. Google says: "To improve the user experience, when we detect that a content series (e.g. page-1.html, page-2.html, etc.) also contains a single-page version (e.g. page-all.html), we’re now making a larger effort to return the single-page version in search results."

Tip #78:

If you're trying to stop content getting indexed, remember not to have it in robots.txt - or the crawler will never get to the page to see your noindex tag!

Tip #79:

Cannibalisation is when you have more than one URL targeting the same intent / key phrase. It is one of the main problems that causes otherwise technically optimised sites with decent content to rank very poorly.

Tip #80:

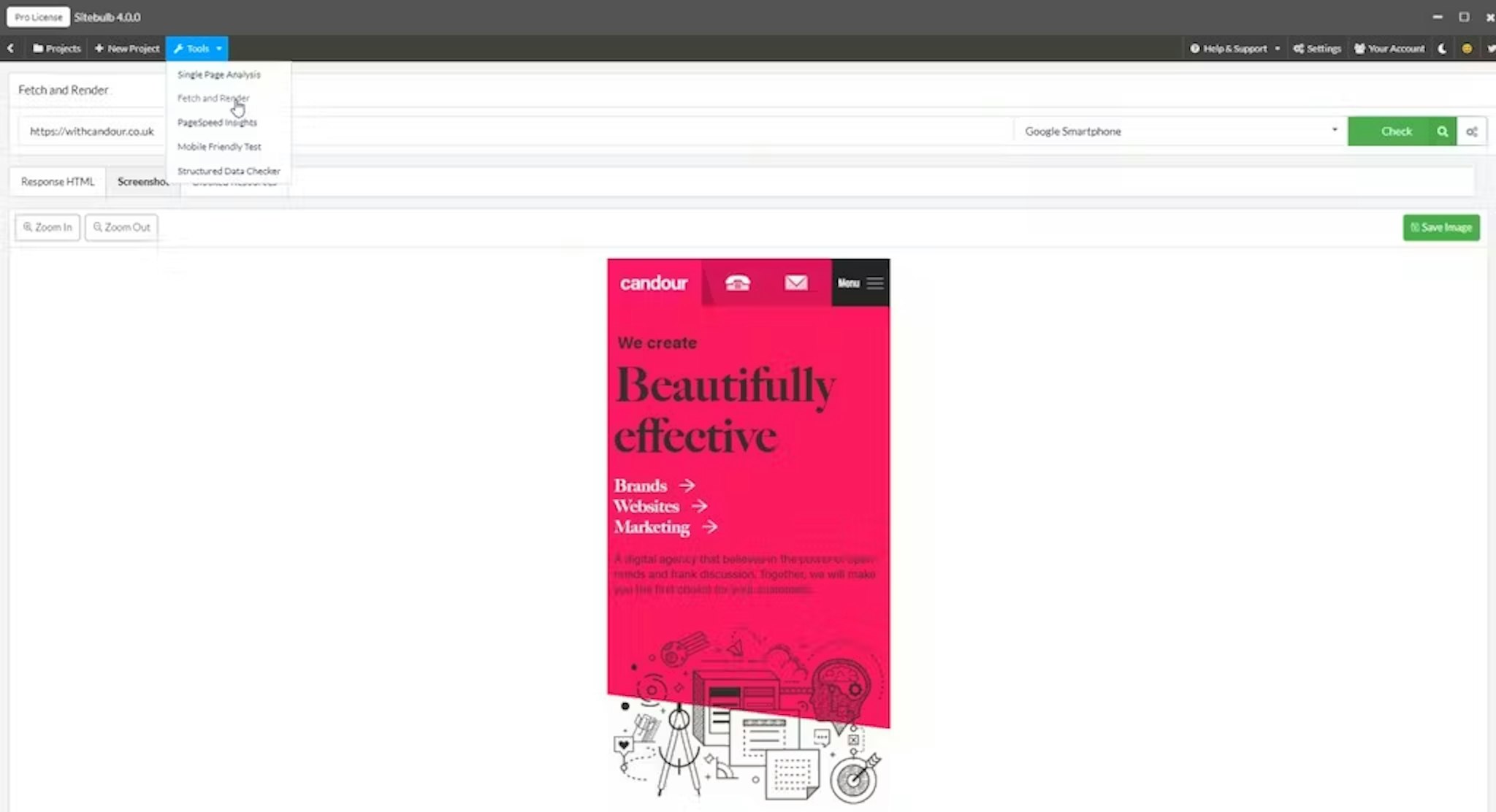

Making a visual crawl map is a fast way to get a bird's eye view of your content structure and see if you have any problems. One of my favourite tools to do this is Sitebulb. Neither Sitebulb or Patrick Hathaway compensated me for this post. They just made a really good tool. There's a trial version to check out.

Tip #81:

If you want content to rank well over months/years, you need to design your site to link to it from 'high up' in your site hierarchy. It's generally a mistake to post evergreen content in a chronological blog, as it will slowly disappear deeper into your site, more clicks away. If it's evergreen and always relevant, it should always be prominent.

Tip #82:

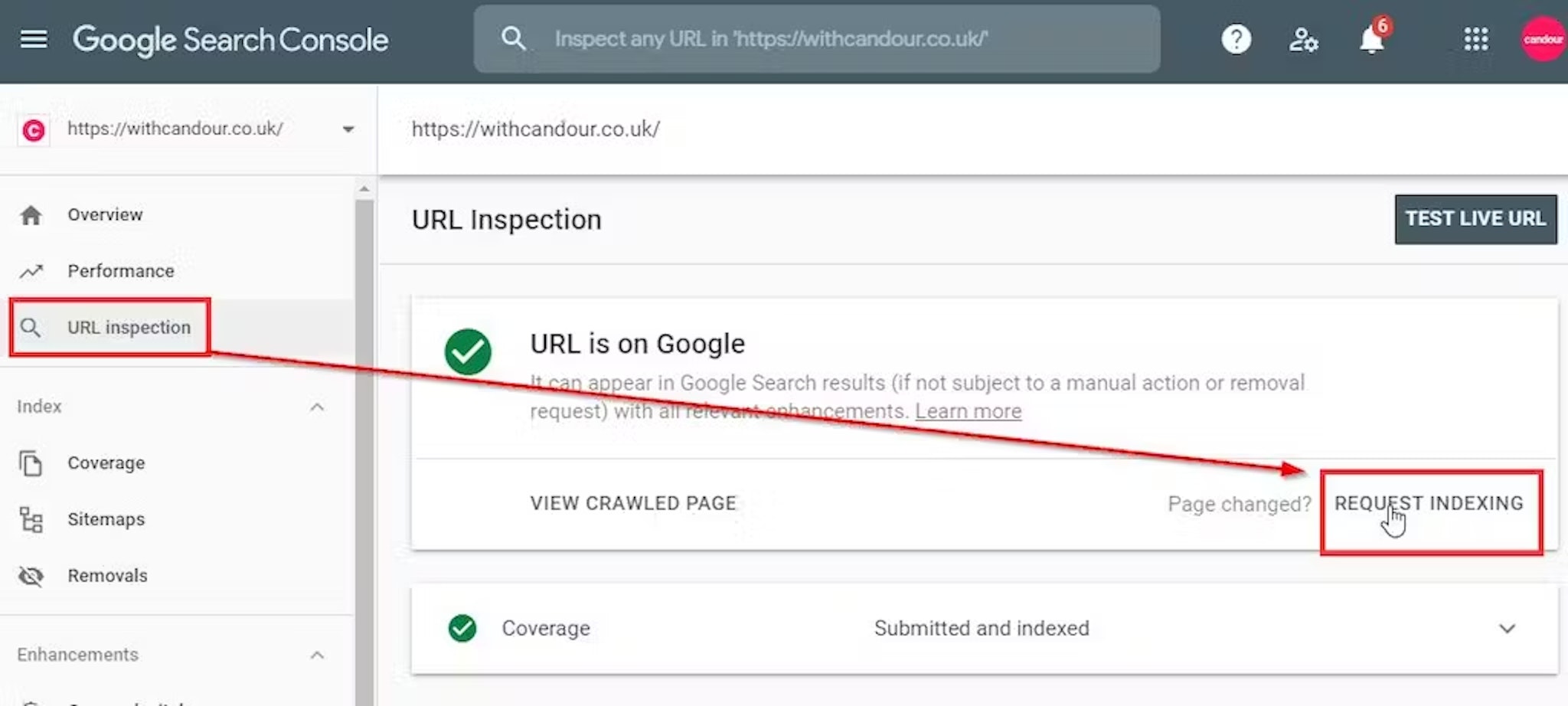

Google had a bug last week that caused millions of pages to become de-indexed seemingly at random - sometimes even big company's home pages. This bug is now fixed - so if it affected you, there is no need to panic, these URLs should resolve automatically. If you're in a rush (who isn't), you can speed up re-indexing by submitting the de-indexed URL via your Google Search Console account.

Tip #83:

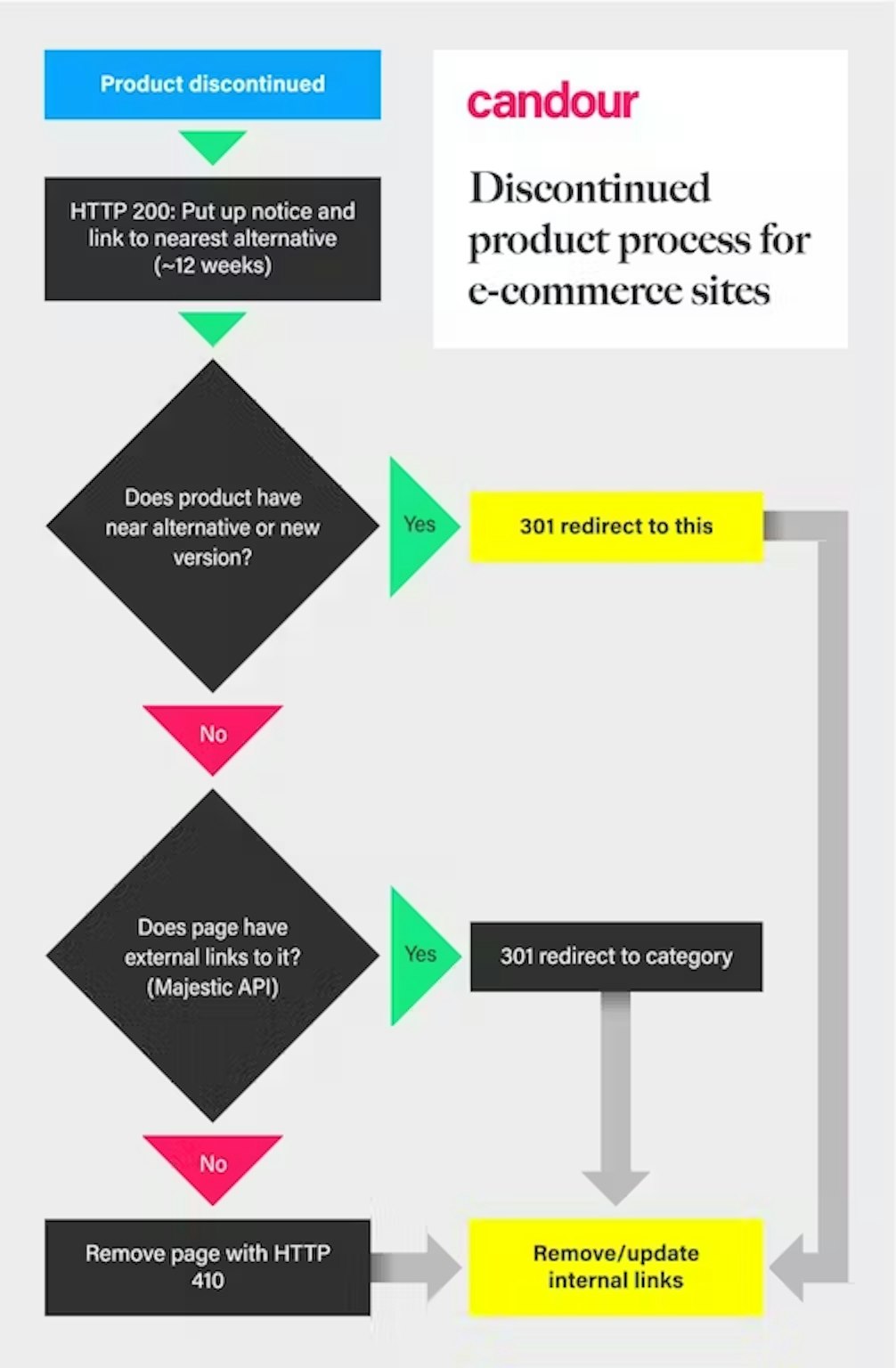

If you discontinue a popular model/product on your e-commerce site, rather than delete the page, update it to explain the product is discontinued and link to the nearest alternative products. This is more helpful to the user and prevents the loss of organic traffic.

Tip #84:

A specific 'keyword density' is not a thing, so don't waste your time on it. Apart from the fact text analysis goes far beyond this and tf-idf, it means you're writing for robots and not for humans - and therefore missing the point. The algorithm is only ever trying to describe what is best for humans, so start from there.

Tip #85:

www or non-www, pick one! Then redirect (301) one to the other. Did you know that Google and other search engines count URLs with and without www and different (and therefore duplicate) pages?

Tip #86:

Despite what they profess, the 'build your own site' platforms like SquareSpace and Wix are not optimal for SEO. While they can be great start points, it's unlikely you'll get great rankings with those sites. Even bigger platforms such as Shopify don't allow you to edit your robots.txt file! Edit: June 2021 - They do now!

Tip #87:

Do broken link reclamation. Check server logs or use a tool like Majestic to identify sites that are linking to malformed URLs. Set up 301 redirects for these to reclaim the links and get the extra traffic.

Tip #88:

Do not underestimate the power of ranking in Google Images. A huge amount of searches are visual, so it is worth making sure your image assets are properly marked up and optimised.

Tip #89:

The site: operator in Google is useful to see if you have major indexing problems, for instance if you have a 20 page site but find 5,000 indexed pages - or vice-versa - you have a 5,000 page site but only 20 are in the index. However! It will not give you an accurate count of the number of pages included in the index, so don't use it to try and measure index coverage!

Tip #90:

If you're using schema, don't use fragmented snippets, tie them together with @id - e.g. this Article belongs to this WebPage, written by this Author that belongs to this Organisation, which own this Website - build the graph!

Tip #91:

When doing a site migration, try and change as few things as possible. E.g. if you can do a move to http - https first, do that. It will make it easier to diagnose and fix the root cause of any issues.

Tip #92:

If you don't have a strategy to get people to link to you, it's going to be almost impossible to obtain competitive rankings. Links are still the life blood of rankings. Here is a recent test example. The site does not rank for years. It gets an influx of links (top graph) and the search visibility shoots up (bottom graph). The site loses links (orange, top graph) and search visibility falls (bottom graph).

Tip #93:

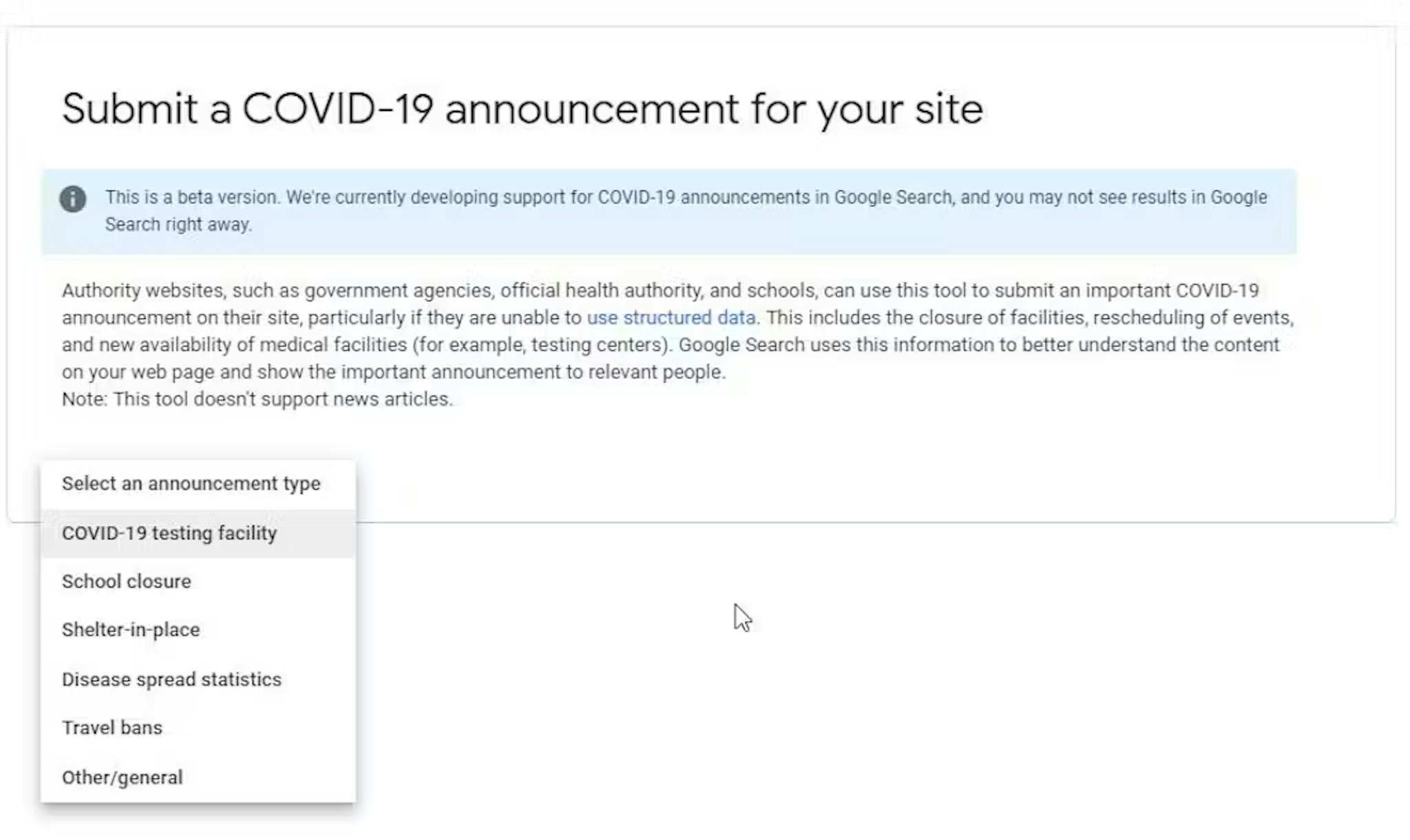

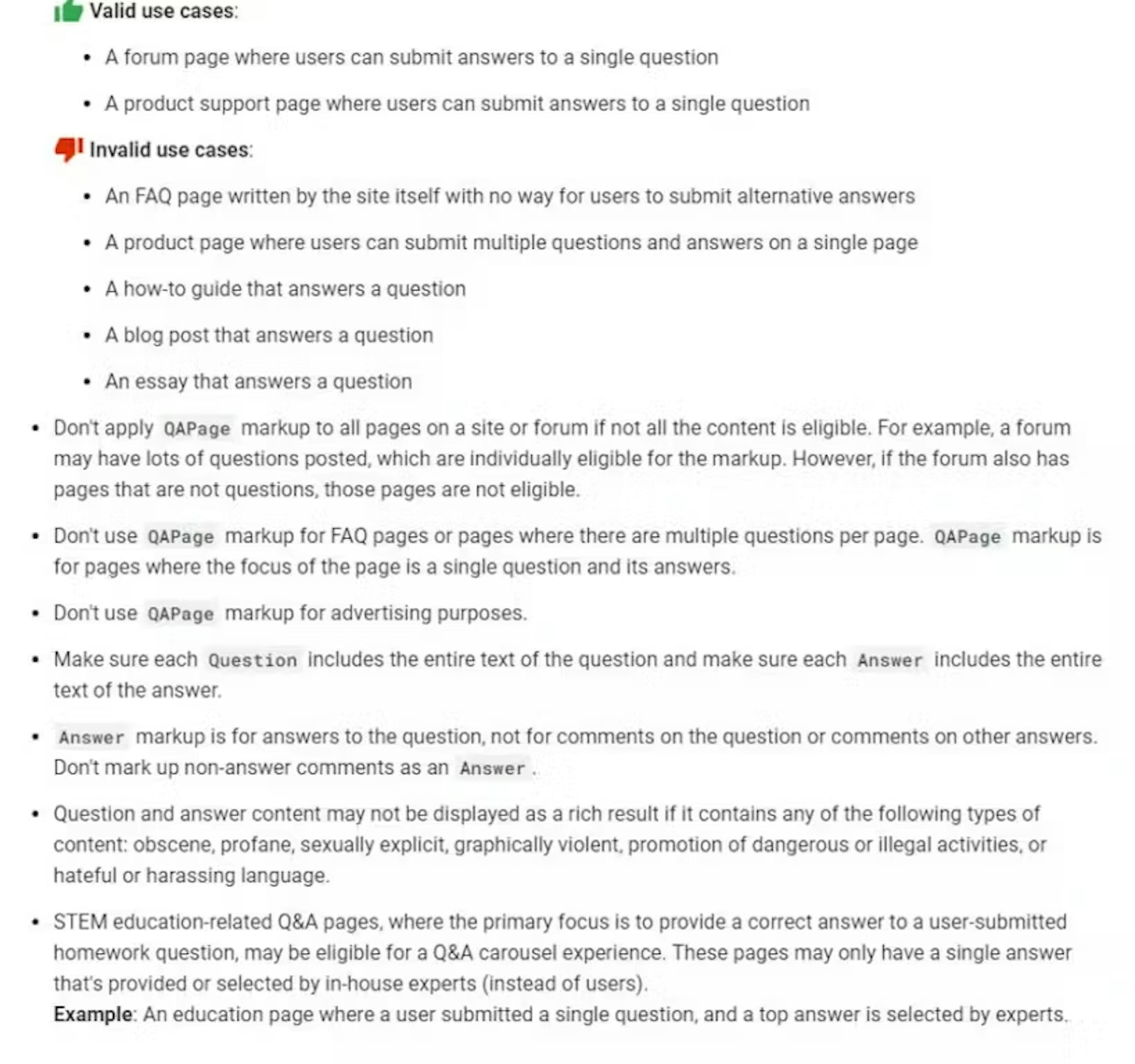

Google has just announced both Search and Assistant support for FAQ and How-to structured data. (h/t Andrew Martin). Find out more about Google's announcement here.

Tip #94:

"The content comes before the format, you don't 'need an infographic', you don't 'need a video'. Come up with the content idea, then decide how to frame it" - Brilliant advice (think I got the quote right) from Stacey MacNaught last night at #SearchNorwich.

Tip #95:

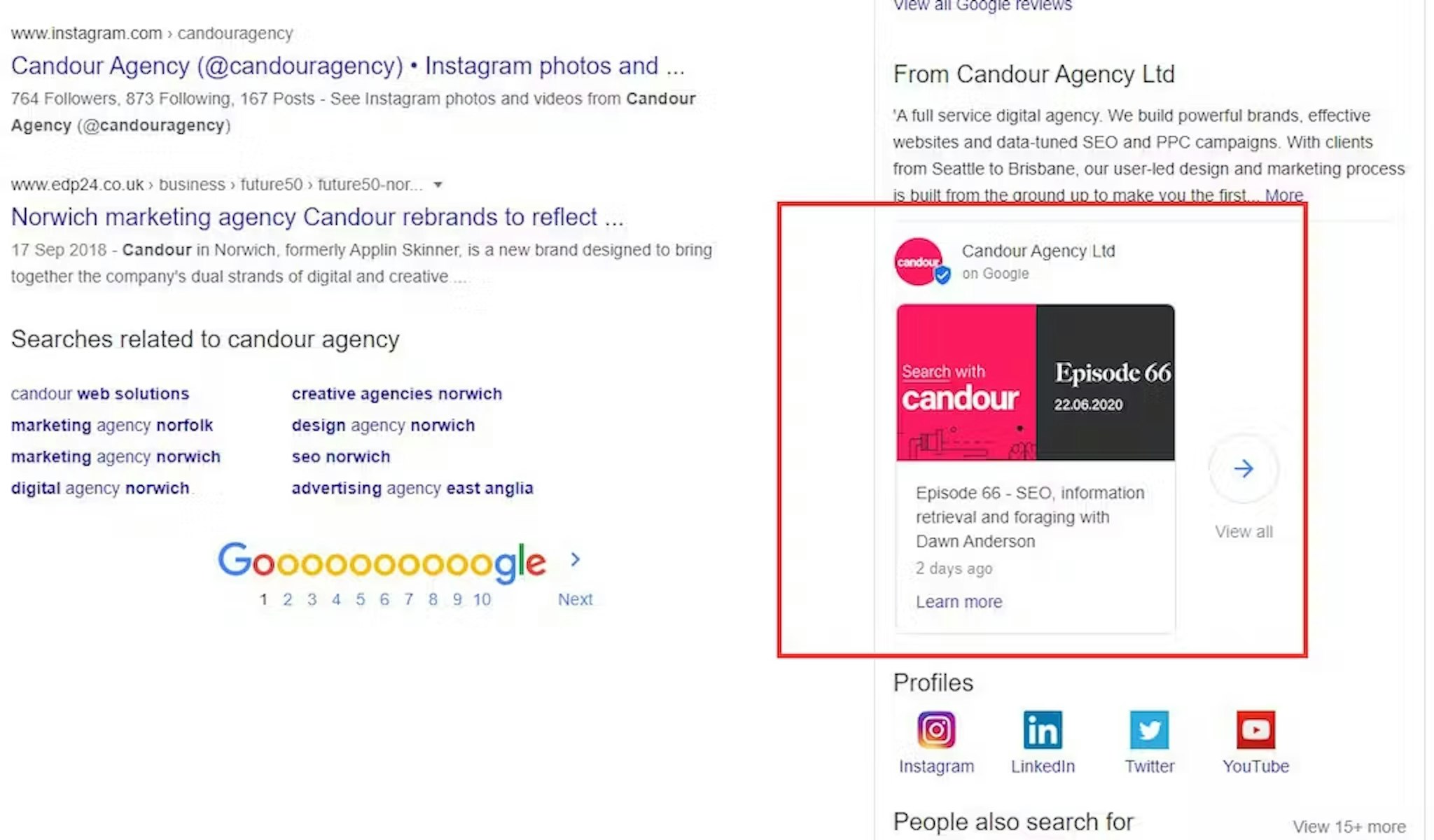

Dominating Google is about getting your information in multiple places not just your own sites. Or just making Google think you have 512 arms :-)

Tip #96:

Part of being 'the best' result comes with format. Google is bringing AR directly to search results. Your product, in the consumer's home. Doesn't get much more powerful than that! Find out more in our podcast.

Tip #97:

While it was never officially supported, Google has stopped obeying noindex commands within robots.txt. If you're using it to noindex pages your pages will now become indexed! You'll need to declare noindex either on-page or via X-Robots.

Tip #98:

Got a showroom? It's not expensive to get a 360 photo done for your Google My Business and it will help you attract more in-store visitors.

Tip #99:

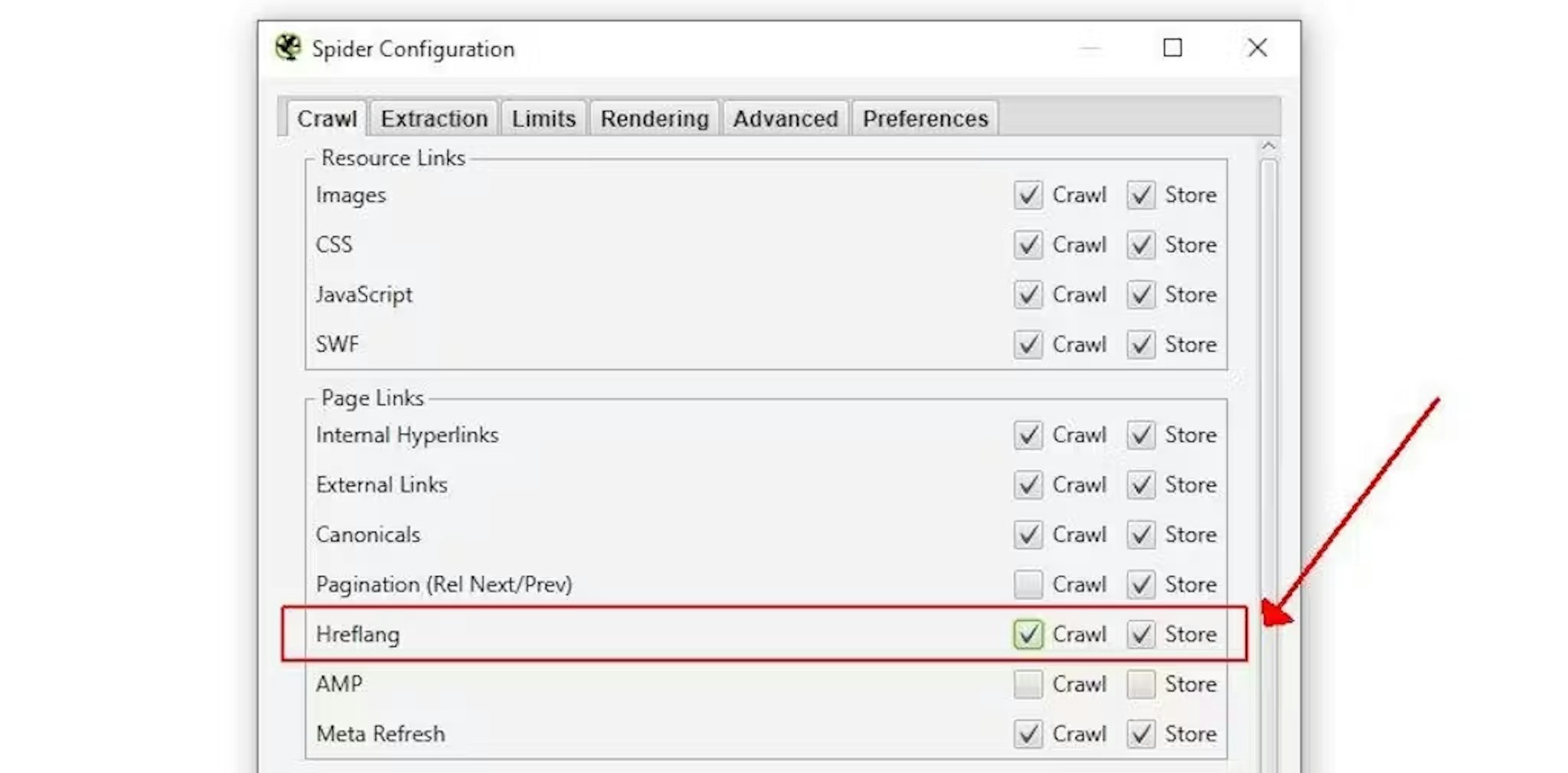

Avoid a common mistake if you're targeting multiple countries/languages by making sure you use the 'x-default hreflang' on your region/language selector page.

Tip #100:

If you're using Google Search Console and it looks like data is missing or you are getting "not part of property" errors, be aware - Google classes http, https, www and non-www versions of your site as different properties! Therefore, you need them all added to your Google Search Console and make sure you have the right one (the one your site uses / redirects to) when making changes!

Tip #101:

Bounce rate is not a ranking factor. A high bounce rate can be good in some cases, it needs to be taken in context with searcher intent.

Tip #102:

You cannot "optimise for Rankbrain" - 'Rankbrain' is the name of one component of Google search that specifically deals with queries Google has not seen before using AI to try and understand intent. Rankbrain deals with approximately 15% of queries (around 3,000 a second).

Tip #103:

"Google has 200 different ranking factors, each with 50 different variables". Have you heard this? That's what we were told almost 10 years ago by Matt Cutts from Google. This is not reflective of how Google works in 2019 and someone saying this to you should raise a red flag - it's super out of date information!

Tip #104:

Make sure you're only specifying hreflang with one method (on-page, sitemap, headers). I've seen numerous problems caused with conflicting tags - so check you're only using the one method!

Tip #105:

Having an empty 'voucher code' box as the last step of your checkout can kill your conversion rate as you send people off on a wild goose chase to find one! It's always worth having a "[brand name] vouchers, offers and coupons" page - it will always rank first and if you have no offers on, you can let people know so they don't feel they are missing out!

Tip #106:

Correctly categorising your business with "Google My Business" is vital to appear for generic map-based searches.

Tip #107:

It is worth looking at the last 12 months Analytics data and seeing what pages you have that get no traffic and asking why. It's a great way to see what your content weak spots are, what needs improving, rewriting or sometimes - just deleting.

Tip #108:

Don't add keywords in your Google My Business name, it can get you penalised.

Tip #109:

The cache of your page (before JS is rendered) is based on the First Meaningful Paint. This means pages with loading screens/elements that last too long may be caching and Googlebot won't understand what is on your page.

Tip #110:

I've mentioned cannibalisation before (many pages trying to rank for the same keywords) and how this can have a drastic impact on a site's ranking. Well, thanks to Hannah Rampton, you now have a free tool that you can use to check your site for cannibalisation.

Tip #111:

The recent Google 'diversity' update that limited how many organic results one site can have, usually to 2, does not include 'special' results such as rich snippets or Google news etc. This means it's worth considering what other angles you can use to dominate SERP real estate!

Tip #112:

Not sure where to start focus with? There are rarely 'quick wins' within SEO, but focussing on your content that ranks in position 3-10 can be the fastest way to get traffic, as most of it is locked up in those top 3 positions on a regular SERP. You can pull a report like this quickly with a tool like SEMrush (aff).

Tip #113:

If you're really thinking about your audience, their intent and getting people that know the subject to write your content - you don't really need to worry about what TF-IDF is, or how it works.

Tip #114:

Having an all secure site (https not http) using SSL/TLS is a great idea for many reasons - it is also a ranking factor in Google! Secure sites rank better and Google recently said it can be used to settle 'on the fence' rankings where most other things are equal.

Tip #115:

Sometimes blindly following Google's advice is not in your best interest (in the short term, at least). Here is Lily Ray demonstrating traffic loss after implementing FAQ schema markup.

Tip #116:

When calculating organic traffic at risk when completing a website migration, remember to only calculate from unbranded traffic - it is highly unlikely you'll lose traffic on brand terms during a migration. In some cases, this can be a significant amount of traffic and spoil your forecasts.

Tip #117:

If you don't have GA access or you inherit a new GA account with limited historical data, you can find historical URLs of a site by replacing 'example.co.uk' with the domain you want from the link: /cdx/search/cdx?url=example.co.uk&matchType=domain&fl=original&collapse=urlkey&limit=500000

Tip #118:

"Those aren't my competitors!" - You have both business competitors, who you are likely aware of - and you have search competitors - the ones that rank above you for the keywords you want. These are the people that you'll be competing with in SEO and you can use a tool like SEMrush to quickly identify which websites overlap with you on how many keywords and which ones. (aff)

Tip #119:

Name, Address, Phone (NAP) citations are important for local SEO and ranking in the map box. This means having your main business address listed as your accounts (common practice in the UK) can be very detrimental to your SEO!

Tip #120:

There is not such thing as a 'duplicate content penalty'. Unless your site is pure spam, you're not going to be harmed if someone copies a page of yours or if you have some copied content. It may get filtered out of a search result but you're not going to get your site penalised.

Tip #121:

URLs are case sensitive. This means search engines will consider: mysite.com/pageone and mysite.com/PageOne as different pages! Stick to lowercase where possible for your main, navigable and indexable URLs to make sharing and ranking easier!

Tip #122:

You should not be hiring generalist copywriters to write your content. Competition is fierce and your users (and Google) are looking for genuine expertise and insight - not a rehashed article made from reading 10 others that already exist. Not convinced? It's spelled out for you in Google's webmaster advice:

Tip #123:

There is a difference between an algorithm update and a penalty. If you lose a lot of traffic or rankings because of an algorithm update, this is not a penalty and there may not be anything you can "fix". Google is simply evaluating in a slightly new way, to closer match their goals. We've done a deep dive into Google penalties on this week's Search with Candour podcast that you can listen to here.

Tip #124:

Links to your site from posts on platforms like Facebook and LinkedIn do not help your ranking in Google.

Tip #125:

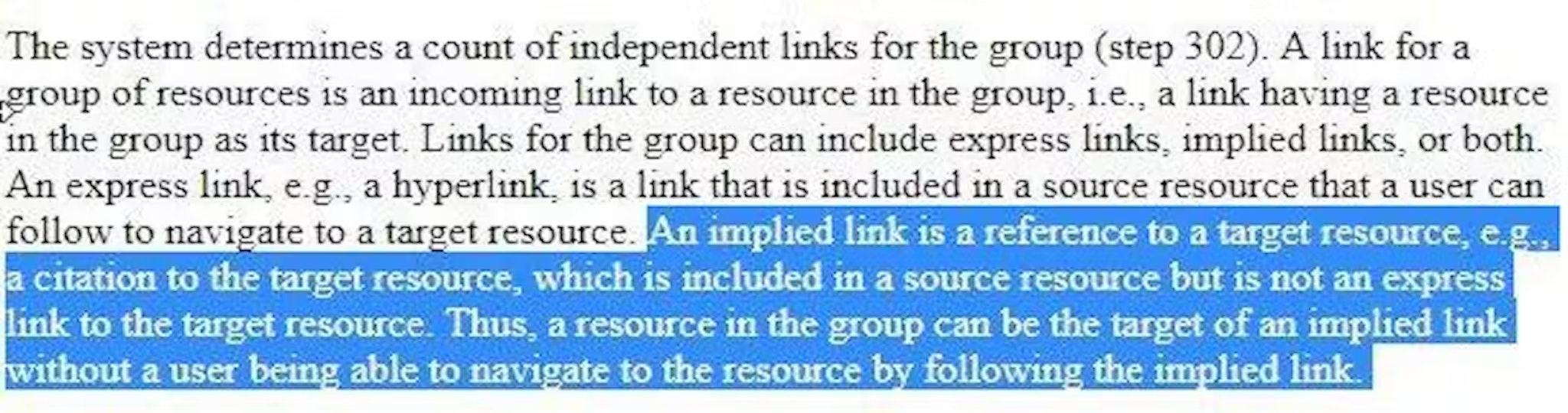

Patents are a good way get an idea of what might be happening behind the scenes when you are observing results. It is worth keeping in mind, just because Google has a patent for something, it's not necessarily used in ranking - but it gives you a good idea of what is coming. For instance, here is a patent that builds on Google's Knowledge Graph / entity model, that tries to attach internet sentiment about that entity as a ranking factor. In essence, to start promoting web results for businesses that people have had good experiences with. Full patent for the geeky.

Tip #126:

Paying for Google Ads does not improve your organic Google ranking. I had someone tell it does yesterday. It doesn't. It really, really doesn't.

Tip #127:

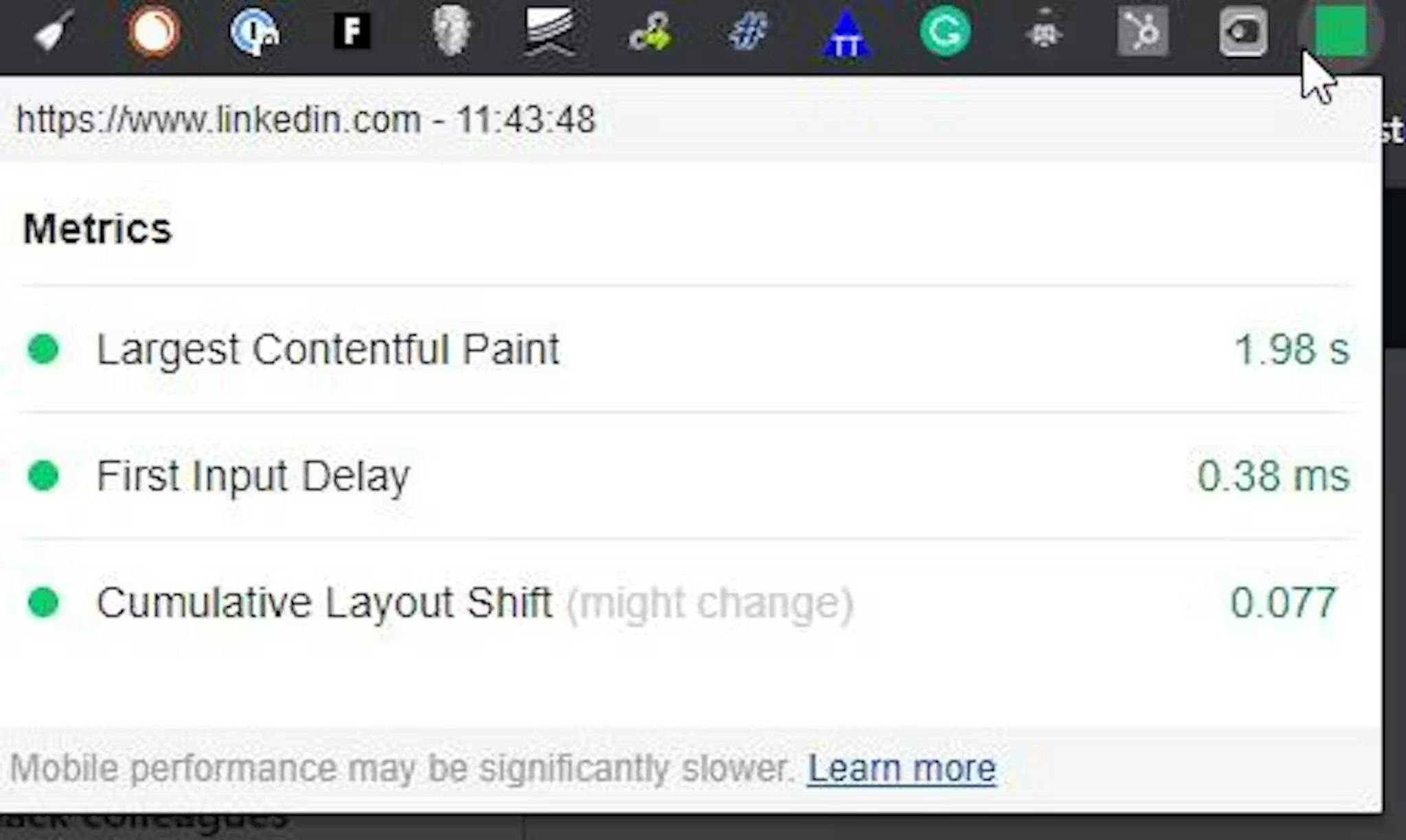

If you're using the Lighthouse Chrome extension to audit a site, make sure you launch it within an incognito tab as other extensions can impact results. To do this, there is a switch to 'allow in incognito' within Chrome.

Tip #128:

Do not claim your Google My Business short URL for the moment! There is a current issue Google is experiencing that is causing Google My Business pages to vanish, as if suspended in many cases when these URLs are claimed. (I think you were affected also Taylor Gathercole?) Technically, it's not suspended - it is due to CID syncing, but same end effect for the user!

Tip #129:

If you're building a new site, SEO considerations need to happen right at the start. How will you handle the migration? What schema are you using? Which content is evergreen and which is chronological? How are you going to avoid cannibalisation? It's not a plan to think you can "do the SEO" after the site is built.

Tip #130:

Quick and dirty keyword cannibalisation check, use this search in Google: site:yoursite.com intitle:"key phrase to rank for" This will only return results for your website where you have the key phrase you want to rank for in the title. If this returns multiple pages, you may be confusing search engines as to which page you want to rank for this term. Consider consolidating, redirecting or canonicalising as appropriate.

Tip #131:

Technical one, so hold your breath! If you see a Google SERP experiment (new type of result), it is possible to share these with other people by finding and manually setting the NID cookie. Here is a tutorial on how to do this.

Tip #132:

Straight from Google, "Pages blocked by robots.txt cannot be crawled by Googlebot. However, if a disallowed page has links pointing to it, Google can determine that it is worth being indexed despite not being able to crawl the page."

Tip #133:

If you're running A/B tests, do not use the noindex tag on your variant pages. As I put in unsolicited #SEO tip number 29, use a rel=canonical tag. This also means you'll pick up the benefit of any links your variant pages get. This is 100% what Google advises too.

Tip #134:

Almost 25% of all SERPs have a featured snippet, if you're not tracking them - what are you doing? You can use tools such as SEMrush to keep tabs on the types of SERP features that are appearing in your niche.

Tip #135:

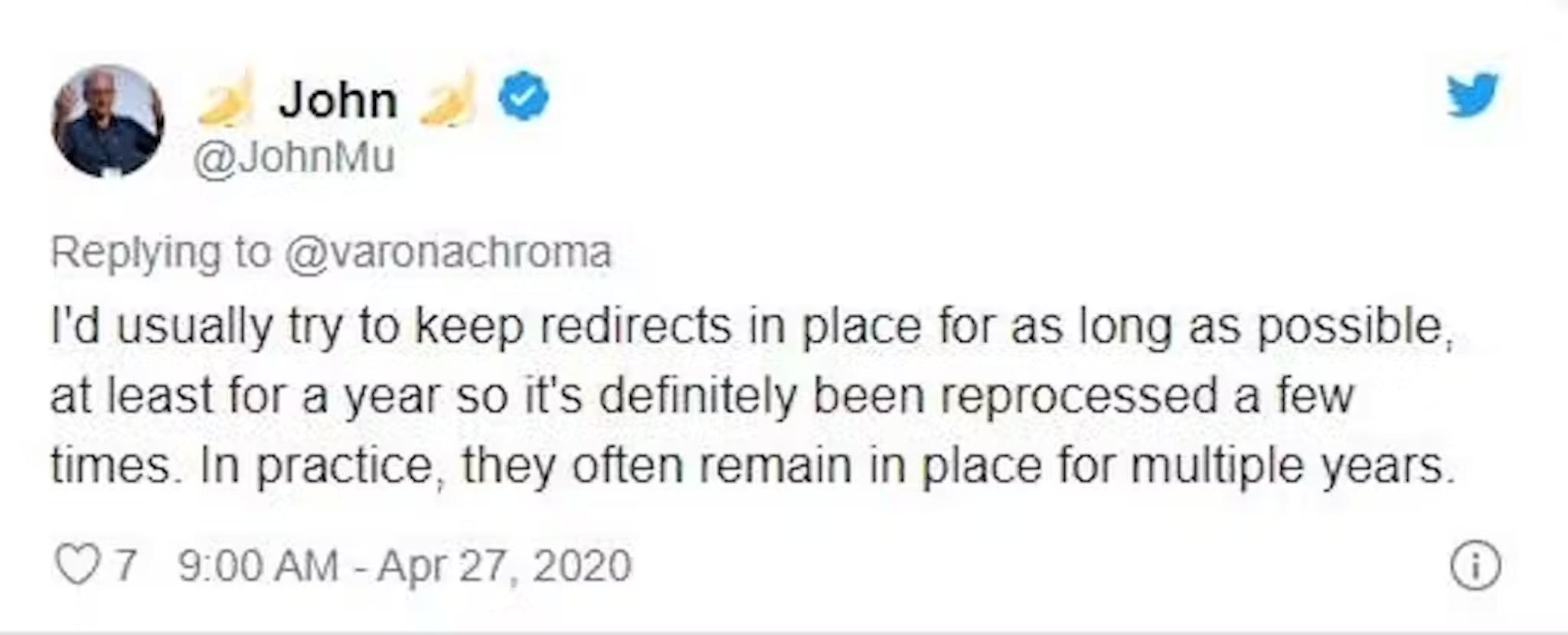

Moving pages or migrating domain? The best way to handle redirects in 2019 is on the 'Edge'. This means setting the redirect through a service like Cloudflare.

Tip #138:

It is possible to update page contents, such as page title with Javascript. You will have to accept that initially, Google will index the non-javascript page title and it may take a few weeks before they process and index the javascript version. This is worth keeping in mind if you have any intention of getting a site's indexed and ranking quickly.

Tip #139:

Less is more when it comes to Local SEO and Google My Business categories. Fewer, more specific business categorisations will get you better results that trying to cover everything.

Tip #140:

30% increase in organic traffic after 1 new piece of 'pillar' content attracts links. The internet is not short of quantity of content, it's short on quality. My first ever unsolicited #SEO tip was about how the churn of '500 word blog posts' is normally a waste of time. A great first win for a new SEO client, we got them to invest far more in one bit of content, rather than spread it out. The result - links, coverage and after a year of churning out content and seeing no real results, a 30% uplift in organic traffic. Baby steps that will continue to sustained growth now we have buy-in! If you're on a tight content budget - do less, but do it better!

Tip #141:

Remember to NoIndex your dev and staging environments so they aren't exposed like these sites and potentially mess up your live site rankings. (Bonus tip: Remember to make it indexable when it goes live, just embarrassing otherwise!)

Tip #142:

The hreflang tag can be used cross-domain! So if you've got your .co.uk your .com.au or even another language site, you can help Google understand the relationship and have them rank better! 🌍

Tip #143:

Google use contained programs in a framework called 'Twiddlers' which re-rank results. Twiddlers focus on an individual metric, work in isolation of each other and try and improve SERP quality. This means a factor might not be in the 'core algorithm' but it might be affected by a conditionally run Twiddler that alters the result. For example, there is a Twidder that runs on YouTube queries that will improve the result of a matching video, if that channel as many videos that are also a close match to the search (implying the channel is specialist).

Tip #144:

If you're planning on doing a site migration, don't fall for setting an arbitrary deadline or timeframe. Take a look at your Analytics data and plan it so you can do launch in your quietest period. This will help minimise any traffic losses you are likely to take.

Tip #145:

Getting a domain with a backlink history can really help give you a kick start. I hear Rob Kerry is launching a tool soon that will make this a lot easier.

Tip #146:

Server-side rendering (SSR) is important, you should not be leaving it to Google to try and process critical Javascript. This is a great example from Pedro Dias - "This is not a penalty. This is a website that migrated to a client-side rendered JS framework. Even with JS rendering on, most tools fail to crawl past 100 URLs."

Tip #147:

It's really great to learn about information retrieval and how search works but TF-IDF and 'LSI keywords' are not strategies. You can't "use TF-IDF to rank better". If you're focusing on these things, you're missing the bigger picture and there will be better areas you can put your time. That and people from Google might make fun at your expense :-)

Tip #148:

If you know you have backlinks that break Google's Webmaster Guidelines (or you have received a manual penalty) you can submit a disavow file, listing the links and domains you would like to tell Google to ignore. We covered this previously in a podcast episode.

Tip #149:

Links submitted to a disavow file are, according to Google, are only disavowed while listed. If you make a mistake, you can remove them from the disavow file and resubmit it.

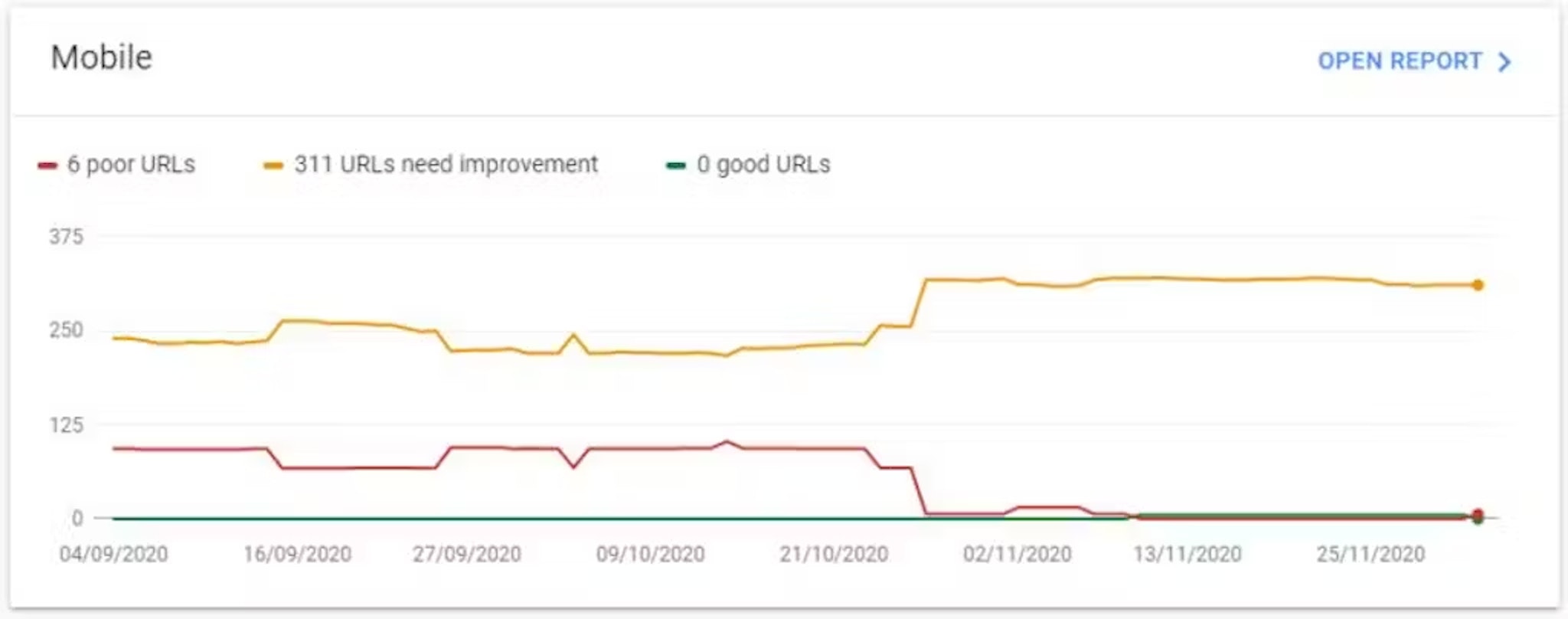

Tip #150:

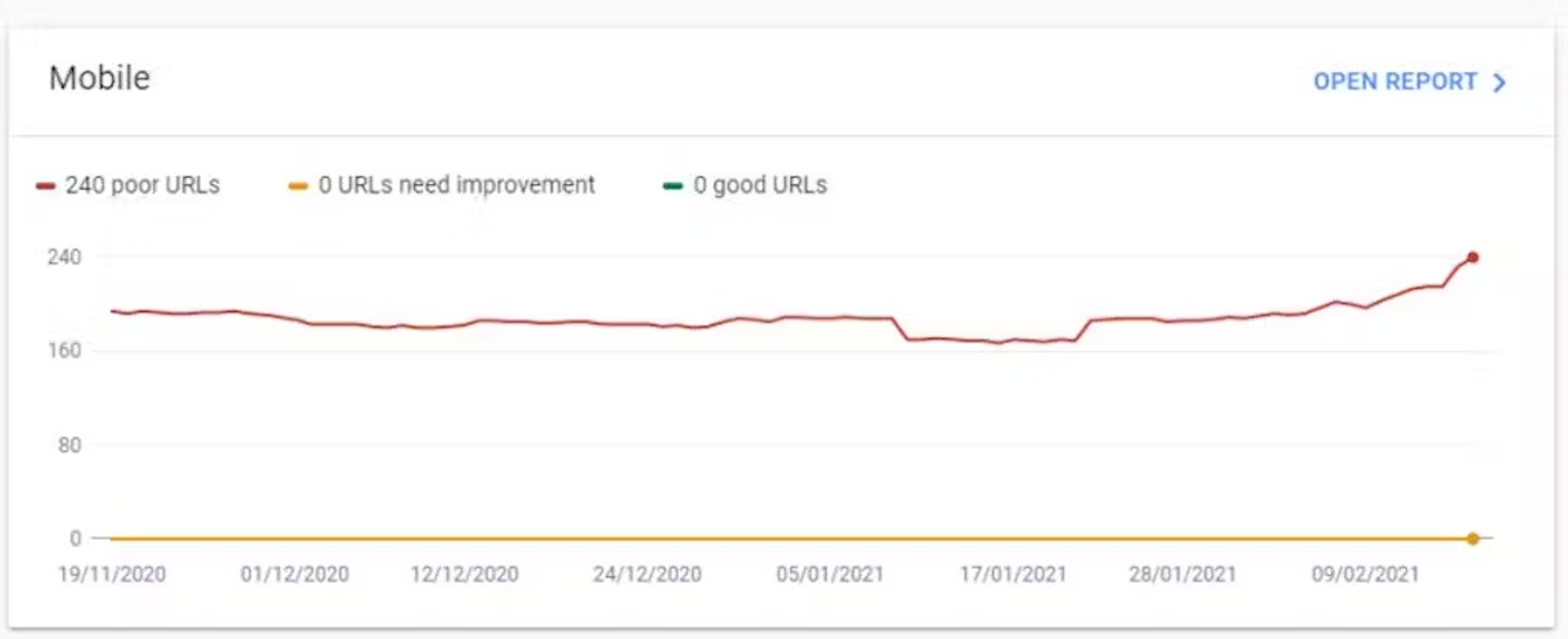

It's common knowledge Google now looks as UX components (site speed, mobile friendliness, no popups etc) for ranking. My tip here is do not approach UX by starting looking at these sporadic and individual metrics as you'll miss not only the bigger picture - but the bigger benefit. I got the chance to sit down last week with UX expert Tom Haczewski from The User Story to have a discussion about how to approach User Experience with an SEO context for episode 26 of the Search with Candour podcast.

Tip #151:

Google today has announced support for other attributes to identify the types of link in their link graph, "sponsored" for paid links or adverts and "ugc" for user generated content links.

Tip #152:

I've noticed for a couple of years that some nofollow links seem to provide a consistent ranking improvement (especially in local packs). Google's most recent update that they might use nofollow as ranking hints is a much more sure sign this is true. In short: Don't avoid nofollow links.

Tip #153:

You can provide Google with 'directives' and 'hints'. A directive is something that Google will always obey, such as a 'noindex' tag. A hint is something Google will take under consideration, but may choose to ignore, such as a 'canonical' tag. Google's documentation will specify which tags it takes as hints and which as directives. Here is an experiment that shows how Google can ignore a 'hint' if it believes you are being deceptive.

Tip #154:

The Google Quality Rater Guidelines is a 167 page document that specifies how the 10,000 Google manual reviewers rate websites. It is worth noting that the actions of manual reviewers do not directly impact rankings - they are used to test how Google's algorithm is performing. It's a really worthwhile read if you work in SEO, as it lets you know what Google is aiming to achieve.

Tip #155:

A homepage is not 'special' in that it has more power to rank well than any other page, in isolation it has the same ability to rank as any other page on your site. Homepages tend to just pickup the majority of links, so they can rank easily - that's all!

Tip #156:

If you're a small business, it is likely that an SEO audit will have almost no measurable value for you unless: a) You have the resources to deploy changes recommended b) You are going to invest in a sustained SEO effort Generally, a technical audit will only have immediate impact if the site is deploying it's ranking potential inefficiently - i.e. the site already has a decent backlink profile. For many small businesses with almost no links, making technical changes will have limited impact in isolation. Think of it as tuning the engine that has no fuel.

Tip #157:

Intent trumps length with content. Content length is not a ranking factor. Yes there are some correlations, largely due to longer content normally has a lot more effort put into it, earns more links and you've got a bigger net to catch longtail searches with - but please, don't make it longer for the sake of it!

Tip #158:

This may not be a popular one and it's one just from experience (and got a few dozen answers from SEO experts on my Twitter). If a company is offering a Gold, Silver, Bronze package type approach to SEO, it's likely going to be hot trash. There are some great comments in the Twitter thread on other's thoughts on this - a few detractors, but generally strong agreement. It's definitely become a red flag to me over the years.

Tip #159:

Because so many people have not heard of it, I want to give a shout out to the AnswerThePublic[dot]com research tool. Whack in a subject and it will show you the common questions that are being searched for in Google about that topic. It's a brilliant way to start building topic lists for your content plan.

Tip #160:

If you haven't already, I do believe it is time to double-down on making the best of your Google My Business Reviews. Google has killed the ability for you to show in-search stars for your own company reviews that you control, now. The only options left are Google My Business and third-party websites. If you want to learn more, I spoke about this briefly in episode 28 of the Search with Candour podcast.

Tip #161:

Google can sometimes ignore your meta description and use any on-page content it finds that it believes is more relevant for the user. This is usually a good thing - dynamic meta descriptions can in some cases give better CTR.

Tip #162:

Google has launched some new options that allow you to control how your website snippets are displayed in search. The changes go live in October and full details are here.

Tip #163:

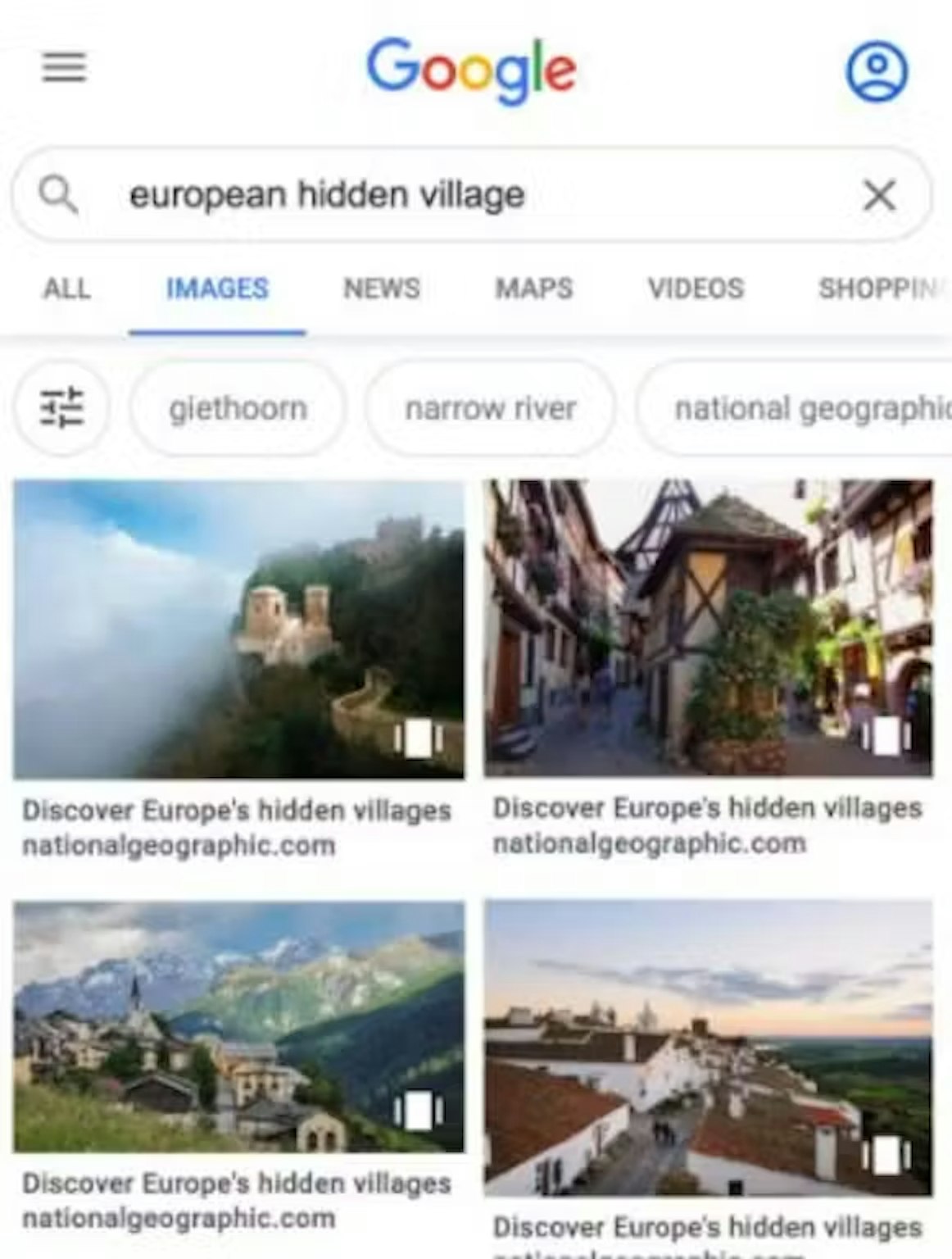

You can see how much search traffic you get from Google Images by going into Google Search Console, selecting Performance -> Search Results and changing the 'Search Type' filter to 'Images'. There's a huge amount of traffic potential locked up in Google images!

Tip #164:

The 'user end' of indexing, caching, ranking, site:, cache: and Google Search Console are all separate, independent systems. For instance: It is possible to have a URL rank for a keyword that Google says does not exist with site: search It is possible for a URL to be present in cache: and for Google Search Console to say it is not indexed It is possible for a URL to rank for a keyword but not a search for its own URL This means: Don't jump to worrying if you see something that looks off, Google's infrastructure is huge and different systems cannot always be in sync.

Tip #165:

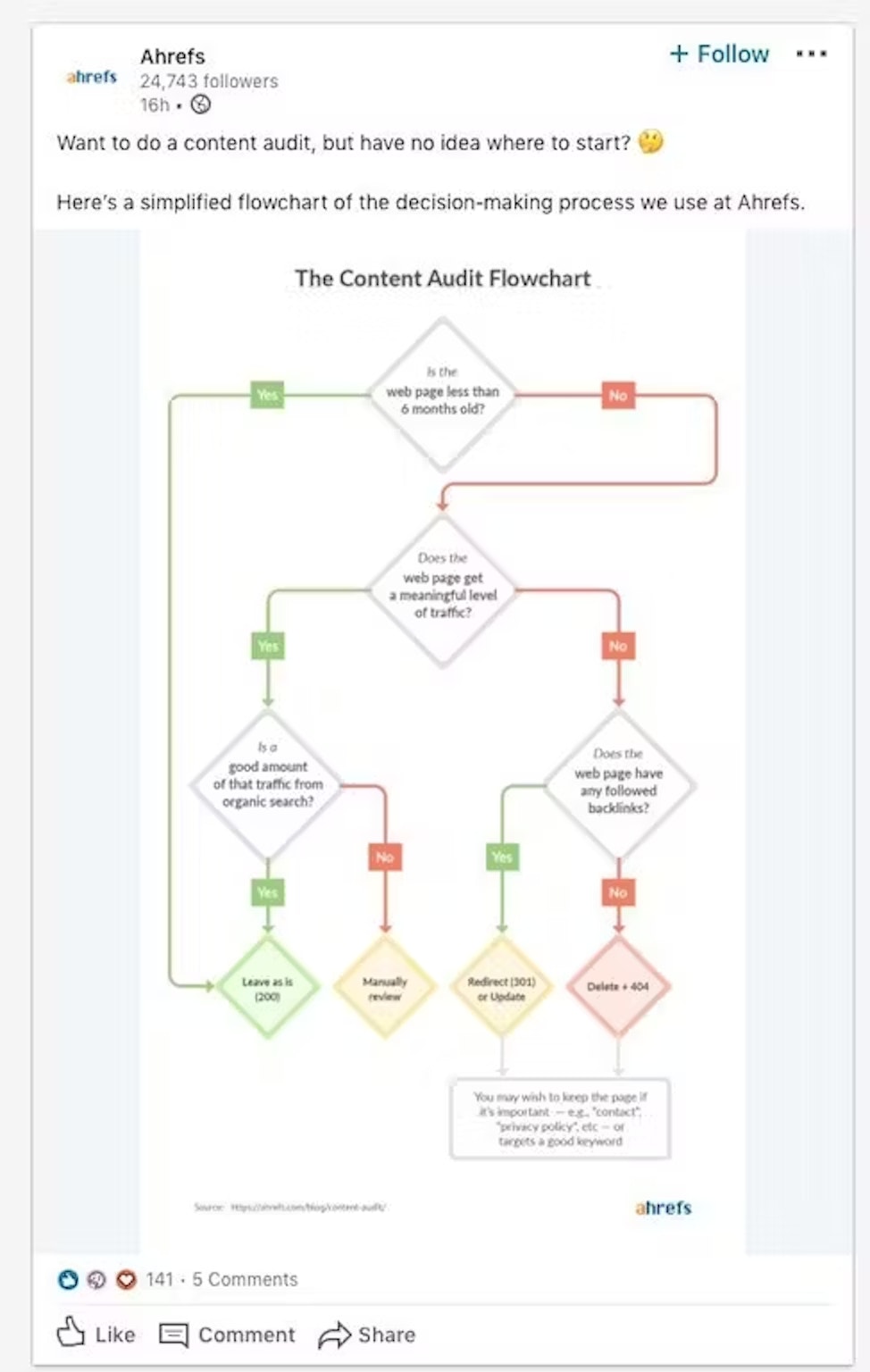

Content pruning' seems to be a new fad in SEO, the logic of improving 'overall site quality' by deleting pages that aren't being visited. This is a gross over-simplification and usually isn't the right approach. Look at your content in context to competitors, intent, searches, time of year etc. There are lots of alternative and sometimes more productive routes, such as: - Merging a page without another similar page where the topics are so closely related it makes sense. - Rewriting/improving the page - Checking you are targeting the right words matching user intent for that content - Showing the answer is another way e.g. image, video - what is best for the user. All things I've covered in previous tips!

Tip #166:

Google has confirmed internal rel="nofollow" links will always be treated as nofollow, in reference to their recent update where they said rel="nofollow" maybe treated as a hint. This means they can still be useful in some situations for handling things like internal faceted navigation.

Tip #167:

This tip is directly from Google for helping you choose an SEO or an SEO agency: "If they guarantee you that their changes will give you first place in search results, find someone else." Monitoring individual keywords isn't really the best measure of success, nobody can account for future algorithm changes or what your competitors will do if you start to climb. Like with many things in business and life, if it sounds too good to be true, it probably is.

Tip #168:

Your participation (or not) in providing Google with 'rich snippet' (position 0) results, does not impact your 'normal' 1-10 rankings.

Tip #169:

Google is not king everywhere! If you're targeting Russian speaking countries, you will need to rank in Yandex, which can be quite different from ranking in Google!

Tip #170:

If you have a site that is easily accessible to search engines, well thought out in terms of navigation and internal links, there really is no need for an internal HTML sitemap. I discussed this in more detail in episode 30 of the Search with Candour podcast.

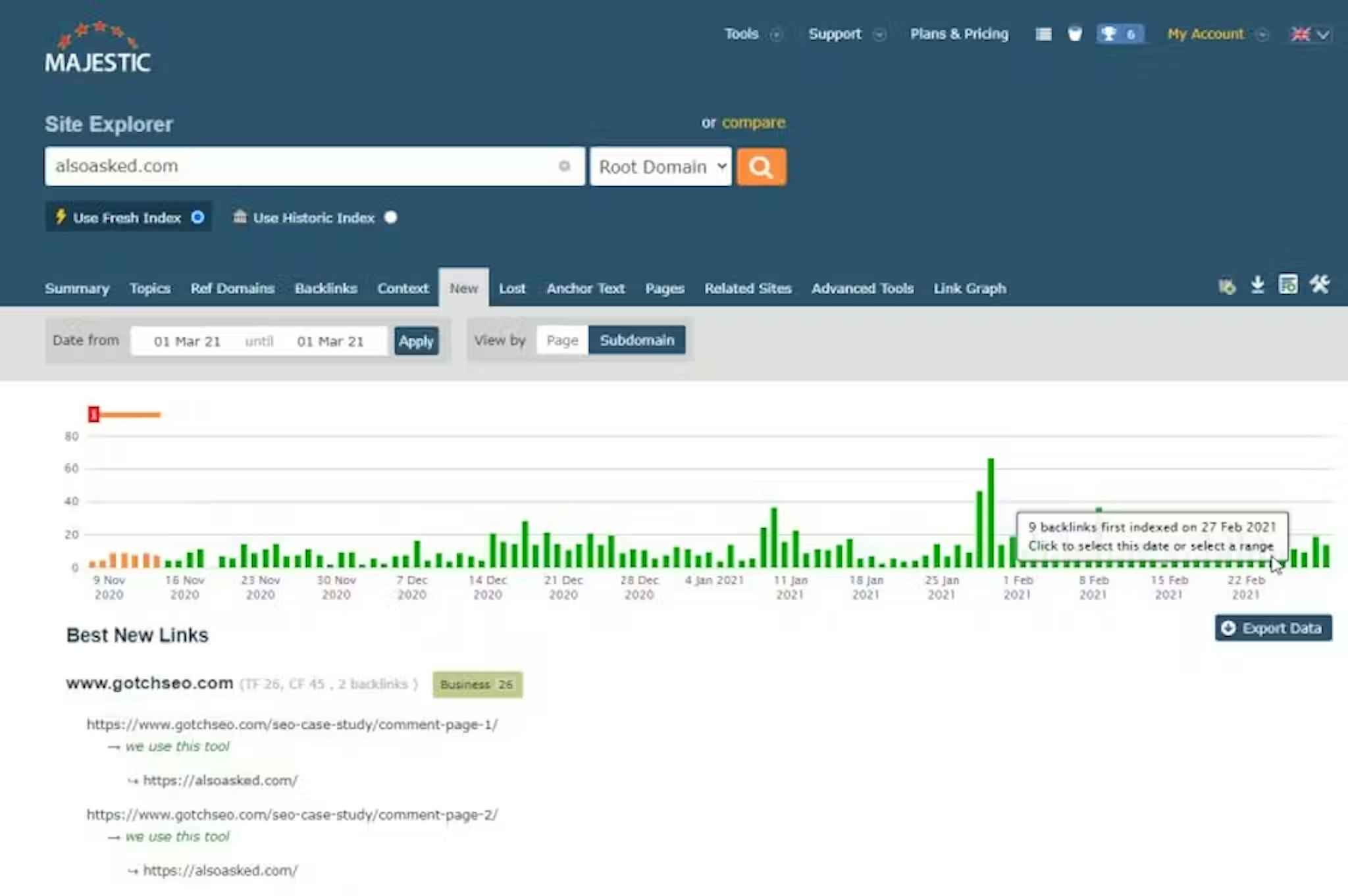

Tip #171:

When doing backlink analysis, it's good to use a selection of tools to get the best picture of your profile. My favourite is Majestic, but I often combine it with data from Google Search Console, Moz, SEMrush and Ahrefs.

Tip #172:

Google ignores anything after a hash (#) in your URLs. This means you should not use # in URLs to load new content (jumping to anchor points is fine).

Tip #173:

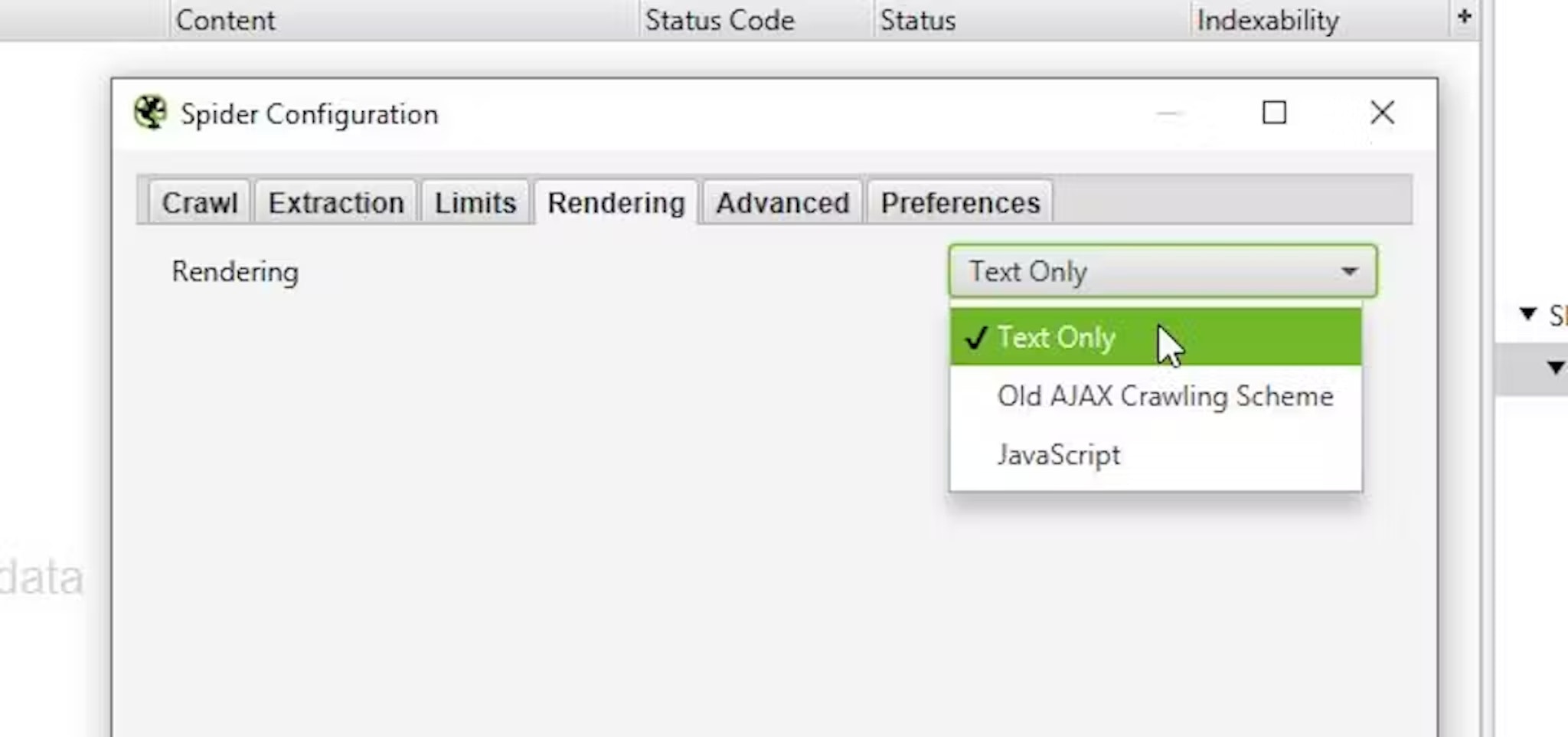

If you're auditing a site, you need to make sure you crawl it both with and without Javascript and with different user-agents and compare differences. I've seen too many site audits done assuming there was no user-agent sniffing or that there was a JS fallback in place!

Tip #174:

There is a danger of applying thinking from Google's Quality Rater Guidelines literally to 'how the algorithm works'. Example: The QRG asks the rater to check for Wikipedia articles on an entity, as it is "a good source of information about companies and organisations." This does not mean this is how the algorithm works. It means that for humans, checking Wikipedia is a reasonable way to litmus test for authority. It would not be reasonable to give humans a print out of the finely weighted, thousands of variables a machine would look at. The human can do a thing a machine can't in many weighs, so it's a check and balance for an algorithm.

Tip #175:

Make sure redirects go to the canonical version of a page. A common mistake is redirects (a directive) going to a page, which then has a canonical tag telling Google (a hint) that another page is the canonical version.

Tip #176:

You generally should use the NoIndex tag on your internal search pages. It's a poor UX for a user to go from a search page to a search page.

Tip #177:

Simple one, but came in very handy for someone on my SEO course today! Try using the site: operator in Google to see what pages you have indexed. You do this just by doing a search for site:yoursite.com. Sometimes you'll be surprised by what is (or not!) indexed!

Tip #178:

A blog is normally a terrible place to host 'evergreen' content such as how to guides. If your blog/news section is chronological, the content will slowly 'sink' down your site's hierarchy. It becomes more clicks away, harder to find for users and will become seen as a less important page for search engines.

Tip #179:

If you're doing outreach and trying to links from newspapers and don't get a response - you don't have to give up there. Most newspapers will have multiple journalists covering similar topics, so it's always worth trying another contact - it certainly beats endless follow ups to one person that just annoys them!

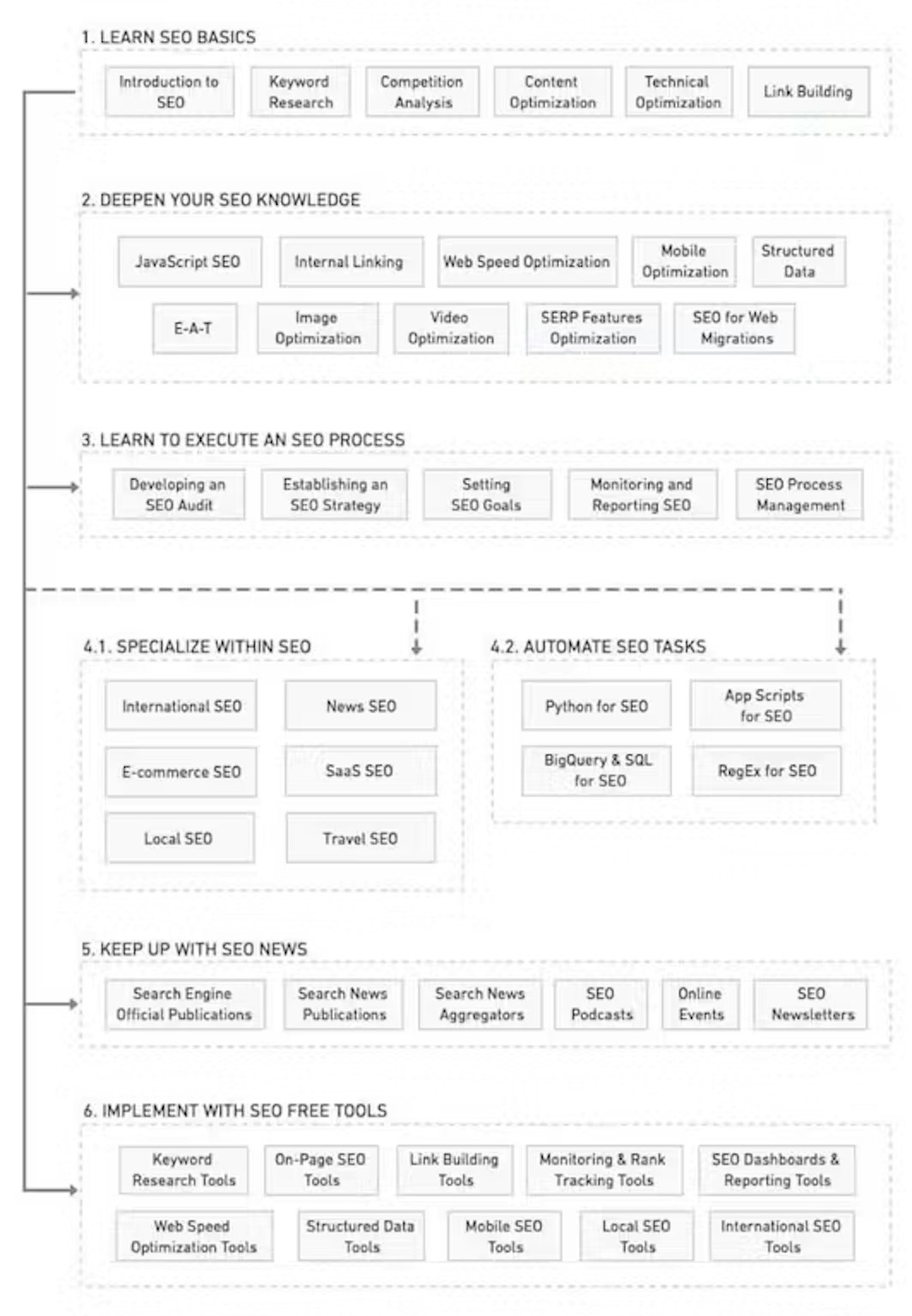

Tip #180:

If you're starting out in SEO, I would invest more time learning about how search engines work and what they are trying to achieve, rather than specific "SEO tactics". Learning the foundations will give you a solid framework to make much better strategic decisions. This means reading less "10 ways to.." and more on subjects like Information Retrieval (IR).

Tip #181:

Did a competitor copy your content and Google screwed up and is ranking it and not yours? There is a simple DMCA form you can complete that notifies Google. I've had very good/fast success - it will remove the offender's result and get you back to where you deserve. We discussed this in episode 30 of the Search With Candour podcast.

Tip #182:

It's a long one, so buckle in! With product sites, it almost always beneficial to users and search engines to have a "view all" page. There is almost no reason why you wouldn't. The most common excuse is "we have too many products". That's not a good reason. Firstly, there are easy tech solutions to this, such as lazy loading - secondly, you're just side-stepping two other bigger issues: 1) The combined latency of clicking through multiple pages is greater than a single page. You're actually providing a slower overall experience. 2) Secondly, do your users actually want/need to look through 7,349 products? Is that useful? Is there not some initial filtering you can do? Do many people really click through to page 56 on your paginated results? Lots of research to show users prefer "view all" and it's better for our robot friends.

Tip #183:

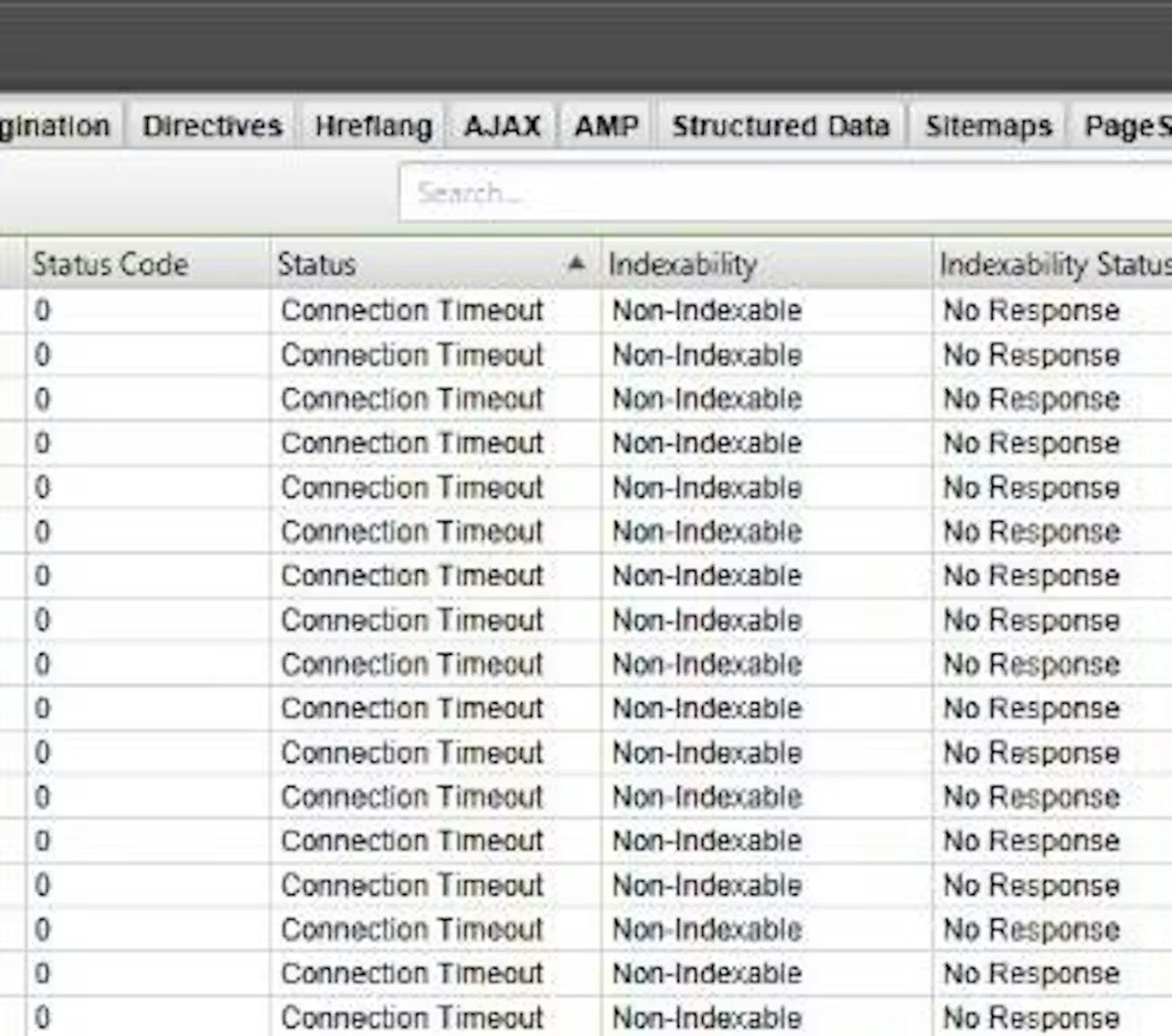

Unsolicitied #SEO tip: If you're doing a crawl of your site and you're getting things like HTTP 504: Timeout errors, then your site is probably also timing out for Google - that's bad. In 2019 there is no reason why your site should buckle under the (very low) weight of having a crawl. There is no reason that most sites should not be run via a service like Cloudflare.

Tip #184:

There's a Google algorithm update called 'BERT' you're about to be flooded with 'ultimate guides' to imminently. Let me save you some time: With this kind of update, there is nothing you can do to "optimise for it" the same way you cannot "optimise for RankBrain". Write better content for your users, focus on satisfying intent and maximising user experience. There is no 'SEO copywriting', there is just good content, bad content and the stuff in-between.

Tip #185:

Make sure your broken pages (404) actually return a 404: Not Found header. I've seen many sites say "404: Not Found" yet they return a "200 OK" header. This is known as a 'soft 404'. "Returning a success code, rather than 404/410 (not found), is a bad practice. A success code tells search engines that there’s a real page at that URL. As a result, the page may be listed in search results, and search engines will continue trying to crawl that non-existent URL instead of spending time crawling your real pages."

Tip #186:

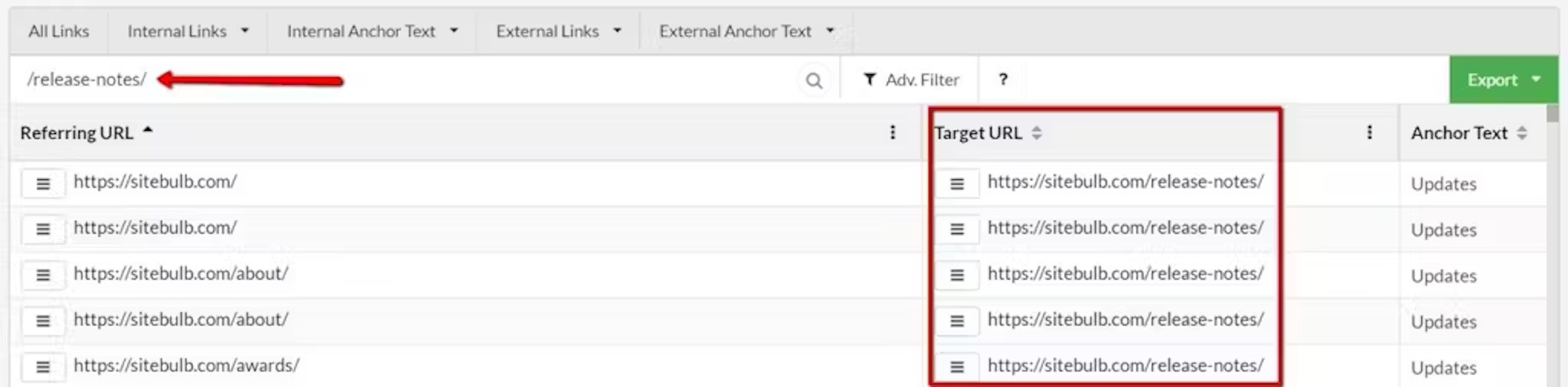

Redirect chains can cause issues. There is no reason why internal links on your site should be redirecting. If an internal link redirects, simply update the link to point to the end location.

Tip #187:

It's generally accepted sub-folders perform better than sub-domains, sharing in the main domain's equity. If you're told "you need" to put part of your site on a subdomain, it is possible to display it to the user as a subfolder using a reverse-proxy. Easy way around a lot of technical issues and clears up SEO ones at the same time!

Tip #188:

Google employing BERT into its algorithm is only affecting US results currently, so if you have seen any recent changes, it is likely not that. An interesting side note: Bing has been using BERT (Transformers) for ages now!

Tip #189:

All other things being equal, two links from two different domains are worth more than two links from the same domain.

Tip #190:

Really want to call yourself an 'SEO copywriter'? Did you know it's possible to query Google's Natural Language API with your (and your competitor's) content to see how Google is understanding entities and topics? It can be a great way to compare and highlight missed opportunities. Here is a great guide by JR Oakes.

Tip #191:

Using anchor jump points within your content gives users a great way to quickly find the information they are after -but you can also get additional links within the SERP which can help clickthrough rate.

Tip #192:

SEO is not just about Google! Bing gets some great traffic for B2B searches, especially for organisations where the IT is locked down and they may be forced into using older browsers that default to Bing.

Tip #193:

It's here! You can now try our free new keyword research tool AlsoAsked.com. This tool will mine Google in real-time for "people also asked" questions and show you how to group these into topics. We built this tool with Go after seeing the brilliant Python CLI version made by Alessio Nittoli, but realising most people are not comfortable running command line tools and installing packages. Enjoy!

Tip #194:

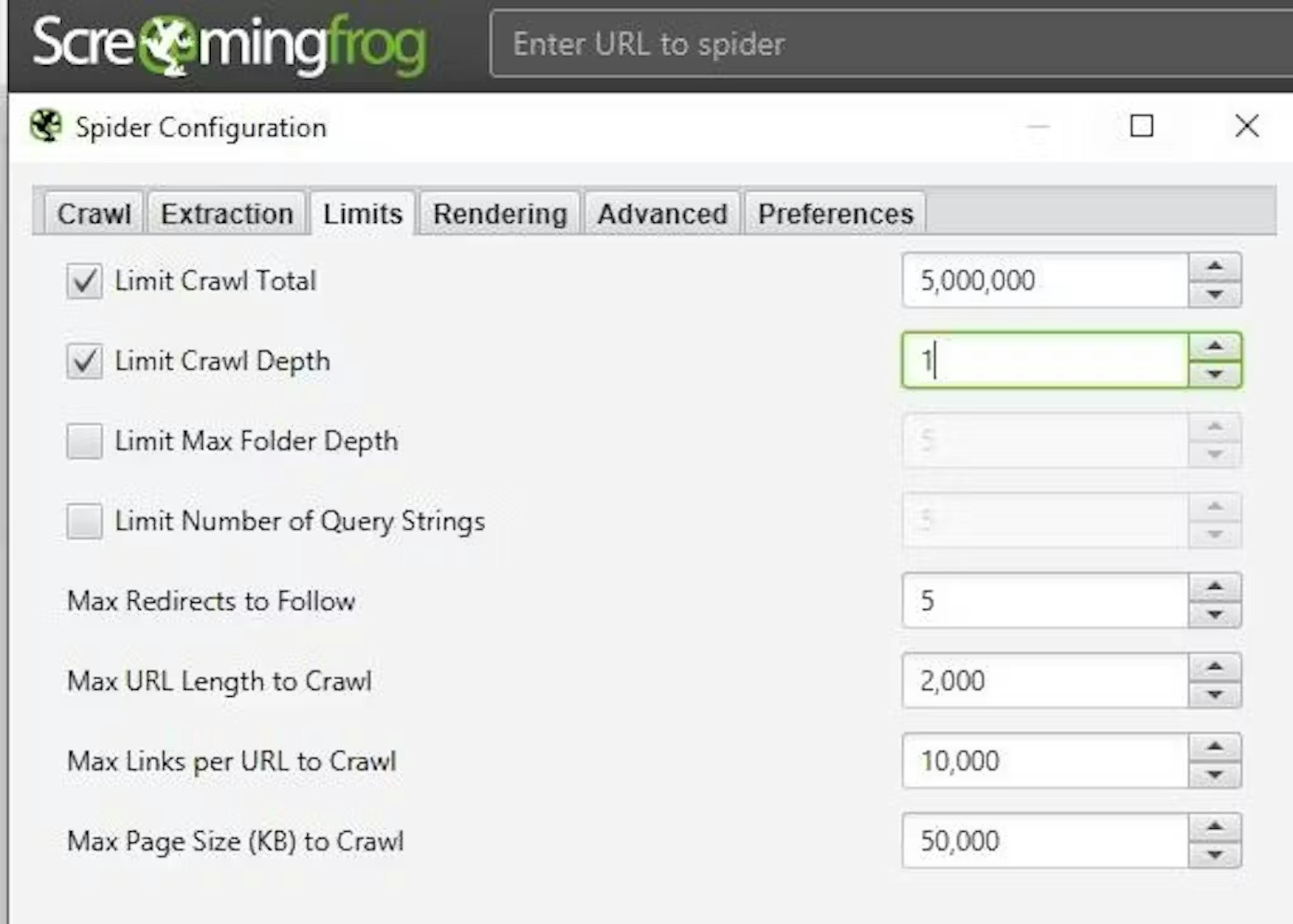

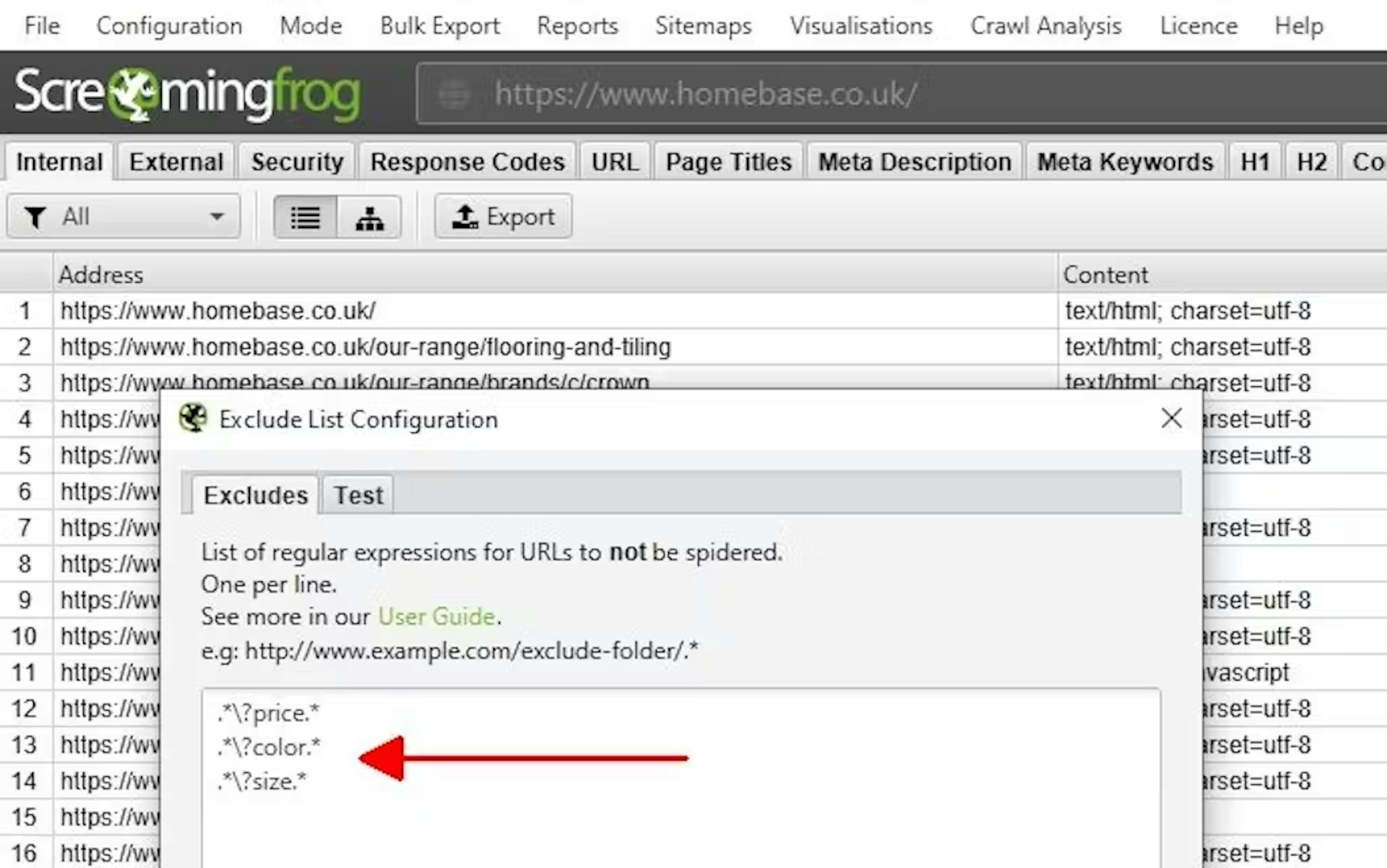

If you are trying to use a desktop tool such as Screaming Frog on large sites, remember it is not always necessary to crawl every single page to find the main technical issues. Problems usually exist over templates, so auditing a sample will give you insight into what needs to be fixed quickly.

Tip #195:

Technical SEO audit actions need to be prioritised. One of the factors you should consider is the difficulty and cost of implementing a change. It's a waste of everyone's time to try and push for a change with negligible impact if it means battling significant technical debt to achieve it, there will be other things you can focus on.

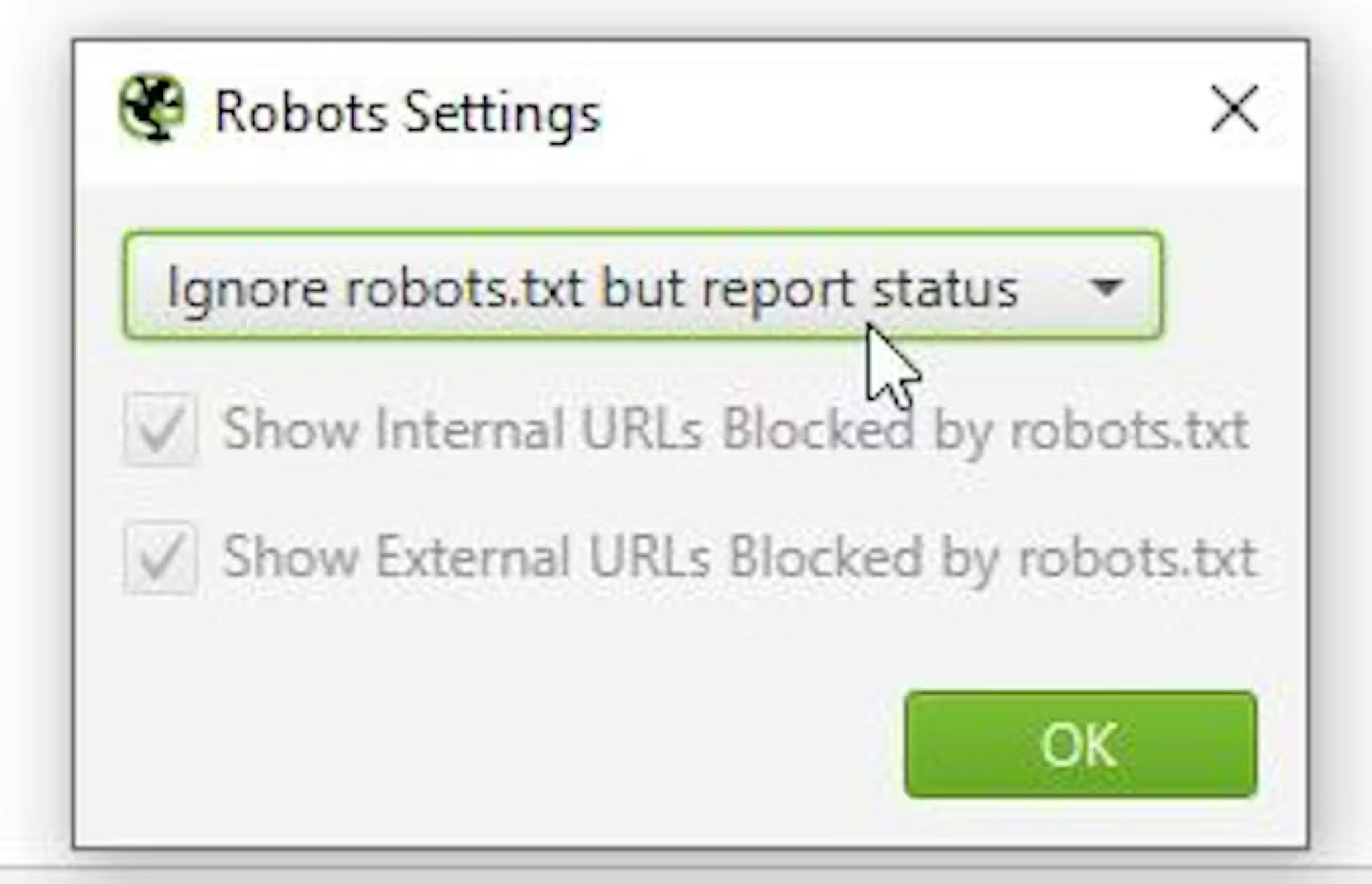

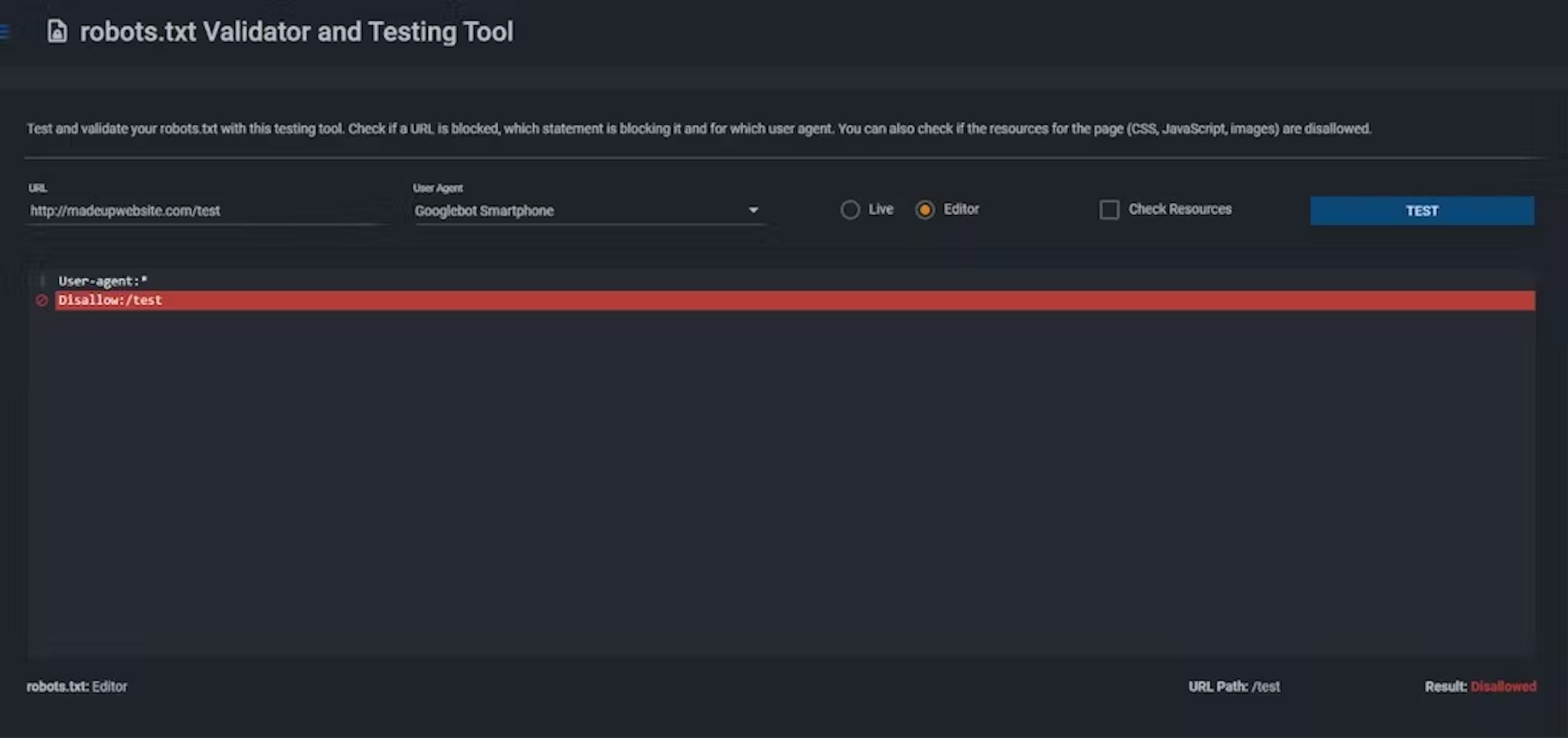

Tip #196:

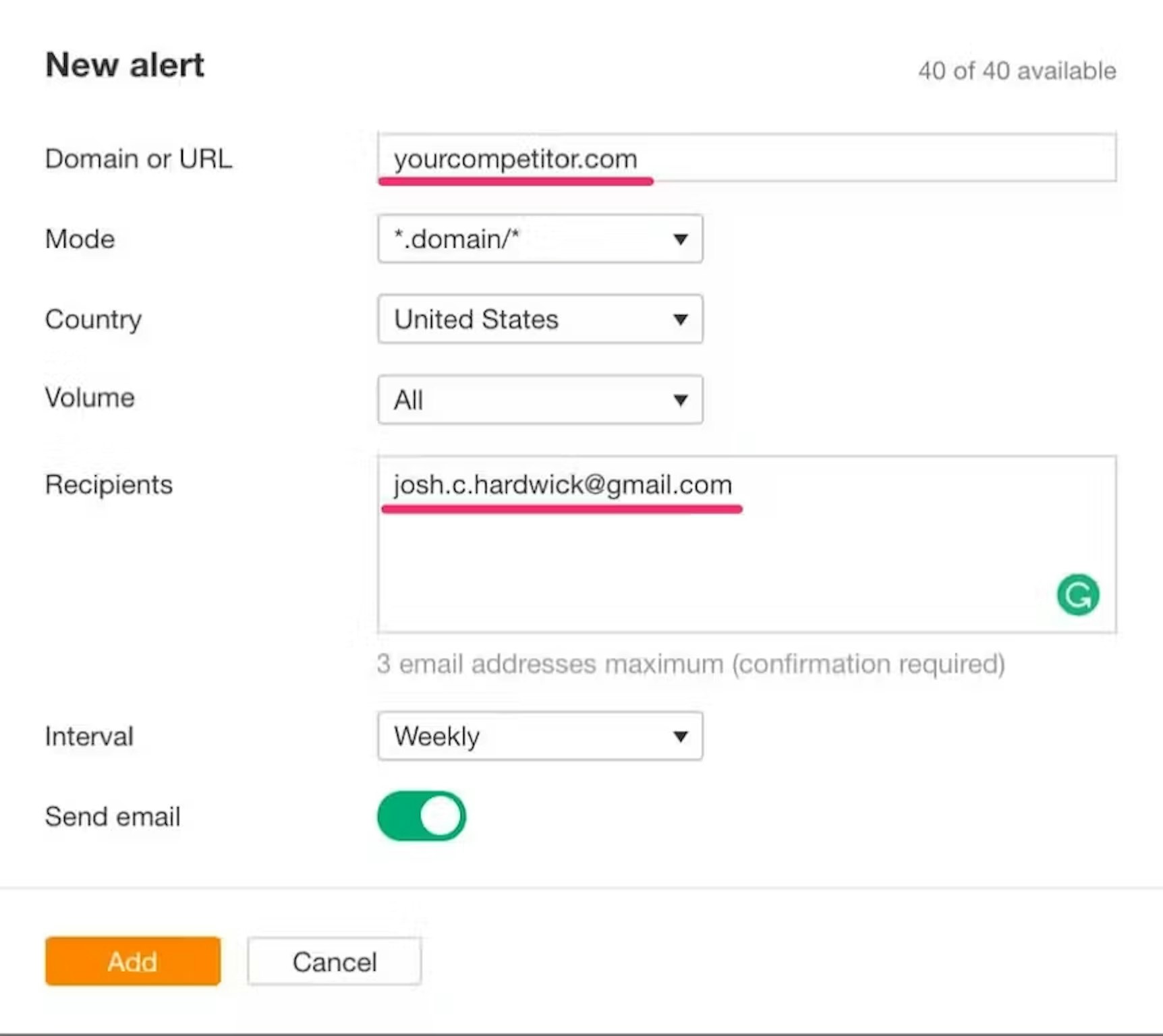

Things like robots.txt, noindex, redirects and server response codes can usually be changed by many parties and can quickly change how you're ranking. If you're working in a larger company, with dev partners or content teams, it is always a good idea as an SEO to use a tool to monitor for these types of changes so you can step in and save the day, rather than pick up the pieces!

Tip #197:

If you're working with an SEO or an agency, their on-going focus should be on actions that drive results. A 'monthly audit' is not a thing. Audits are a great place to start - they identify issues, gaps, opportunities, help define strategy and roadmaps, but within a reasonable timeframe they are finite - and of course, as the last tip highlighted will be constrained by what is possible. Ongoing monitoring, especially on large sites is important - but it can be automated. Reporting and benchmarking is important, but again, it can mostly be automated. My opinion is, if someone is trying to sell you a 'monthly audit', there is a good chance it will not represent good value.

Tip #198:

There are lots of reasons one person may get a different search result to another, but the impact of personalisation is generally over-stated. While things like geography and device may alter a search result, there is actually very little personalisation. Personalisation of search results normally happen in clusters, such as when Google works out your doing a few related searches in a row. Apart from that, in the organic results there is actually very little that is "personal" to you.

Tip #199:

Don't let a 'zero' monthly search volume put you off producing content. Over 90% of the key phrases in ahrefs database have <20 searches per month, this is what the longtail is. The important bit is this though: You're only getting volume for that exact phrase. If you write the content well, there's a few hundred variations on most phrases that suddenly make it a lot more appealing - none of which will initially look appealing through volume data.

Tip #200:

Clicking 'view source' won't actually show you what's going on with the page if Javascript is used to render the DOM. There's a great Chrome extension called 'view rendered source' which allows you to compare the rendered and non-rendered source. Really handy for making quick progress on technical SEO audits!

Tip #201:

The quality of links is far more important than the volume. This is why setting targets on volumes of links doesn't tend to work so well and can be a very outdated approach to SEO.

Tip #202:

"So what?". It's a great test for when you're producing content in an effort to get coverage and links. As ideas are formed and developed, it can be easy to get off-track and sold on your own idea. When you've got your story, data, headline - ask yourself, "So what?". Why would other people care? If you've got an answer to that, can you move onto the next stage.

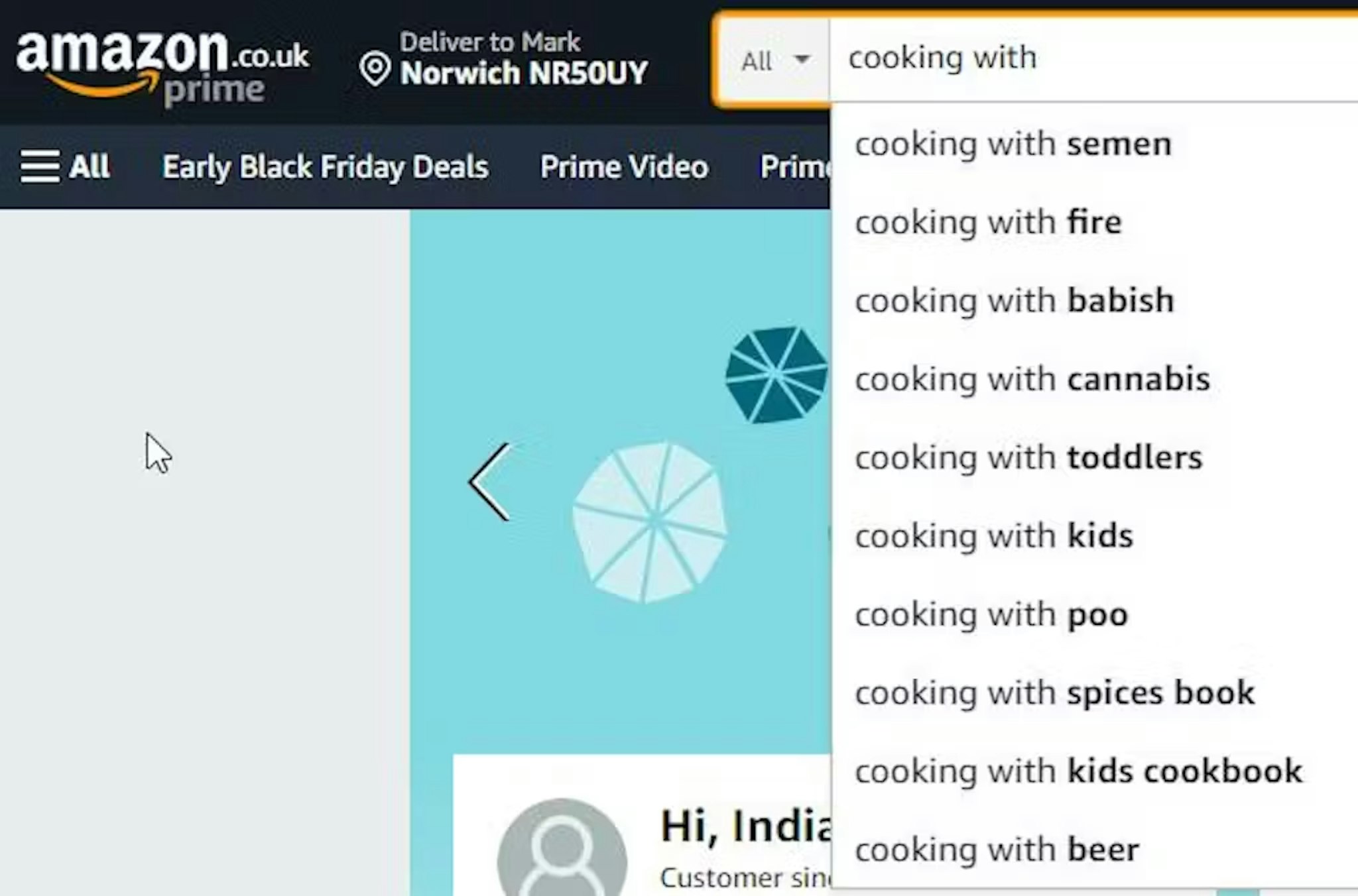

Tip #203:

Google Suggest data, such as that mined by AnswerThePublic can give you incredible insights into the intent behind a search. For instance, take the screenshot below for a few suggestions on "Shard height" as a search term and consider, even from this tiny slice of information we can learn: > People are searching in feet and with the spelling 'meters', this gives a strong hint many searchers may be American. > Shard vs Eiffel tower: Perhaps metres/feet don't mean a lot to some people. This tells us a picture comparison may be the perfect way to answer this query, allowing people to compare the height of something they know. > Using these two bits of information, it would suggest that a visual comparison to well known American and other European buildings would be a good area to explore. Three really important insights from just two of hundreds of data points!

Tip #204:

Little tech tip not many people seem to know. If you have an ecommerce site and you want rich results but can't get schema on your site, you can actually achieve this through submitting a product data feed in Google Merchant Centre. You don't actually need to spend any money on ads and Google can use that structured data to enhance your search result.

Tip #205:

Google ignores crawl-delay specified in robots.txt, but you can change this within Google Search Console. Keep in mind, if you're having to do this - it means you've probably got bigger infrastructure problems: There shouldn't be a case where Googlebot is causing your site to creak in 2019!

Tip #206:

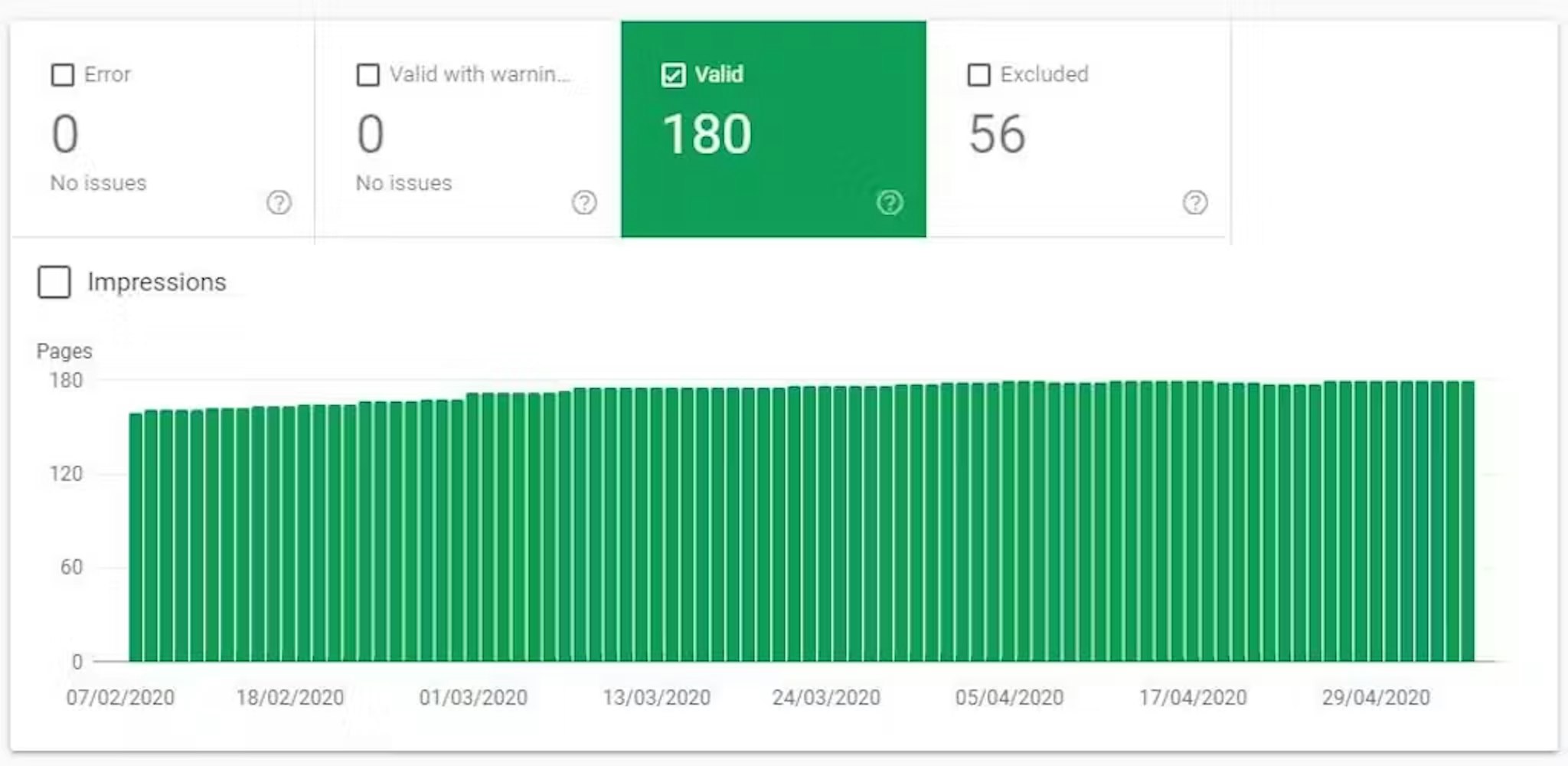

Edit: As of Jan 2021, crawl anomalies have been removed from GSC Make sure you get out the 'crawl anomalies' report in Google Search Console under 'Coverage'. While GSC will quickly report 404 and 5xx errors, crawl anomalies often gets overlooked but can expose serious issues such as frequent timeouts that are preventing indexing and ranking.

Tip #207:

Canonical tags must be declared in the head, putting them in the body means they will be ignored. This can be very bad.

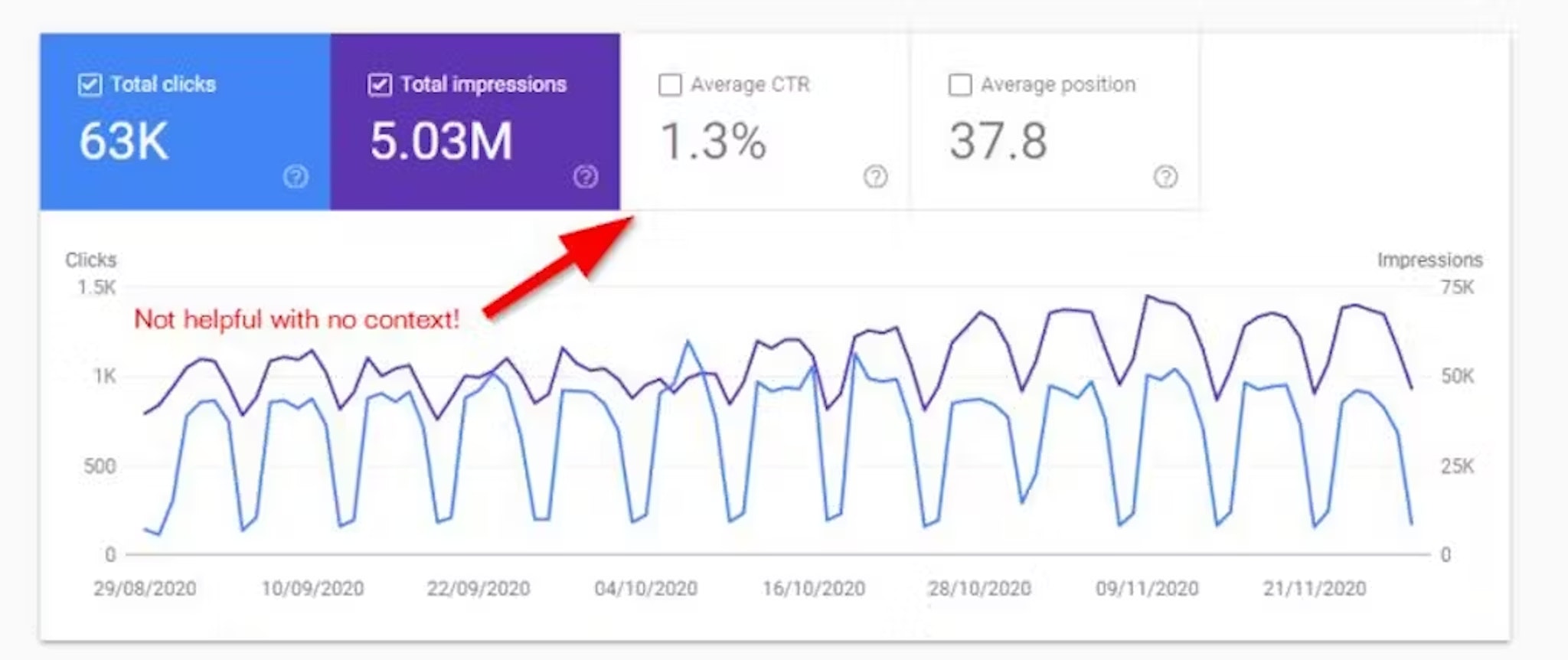

Tip #208:

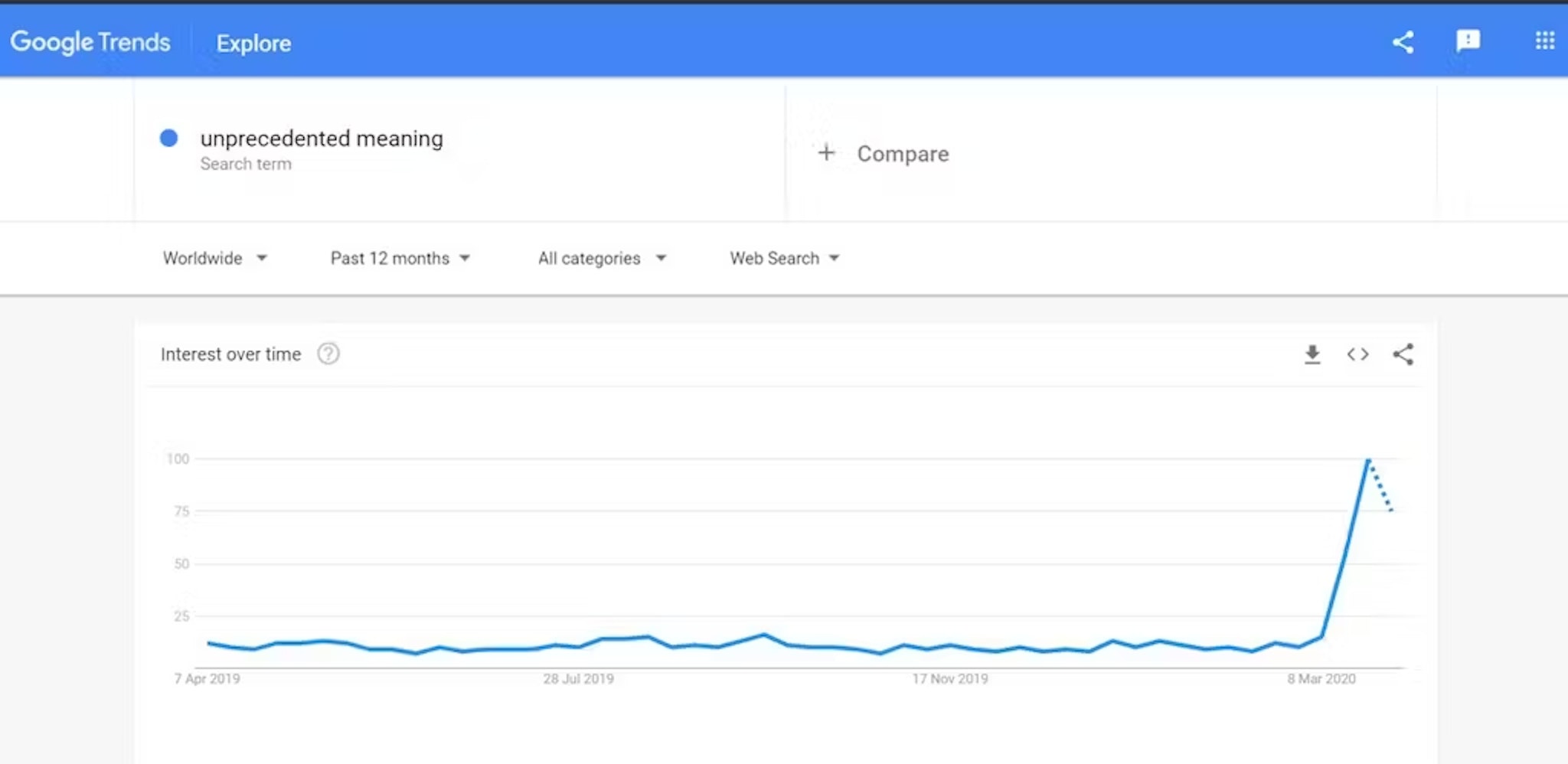

If you're trying to measure organic performance, especially at this time of year, you need to look at Year on Year (YoY) figures. If you're running an e-commerce site and you've seen an organic uplift in clicks/impressions over the last 30-90 days compared to the previous 30-90 days, this should not come as a surprise - it's coming to Black Friday, Cyber Monday and Christmas shopping season! If you really need to do this type of analysis, use Google Trends data to normalise your traffic to see if there is uplift after adjustment.

Tip #209:

It's that time of year when you'll be doing sales, so make sure if you've got products or categories in special /sale/ URLs that you have canonical tags set up to the original pages and you 301 redirect them once the sale is over instead of killing the pages - sales attract links!

Tip #210:

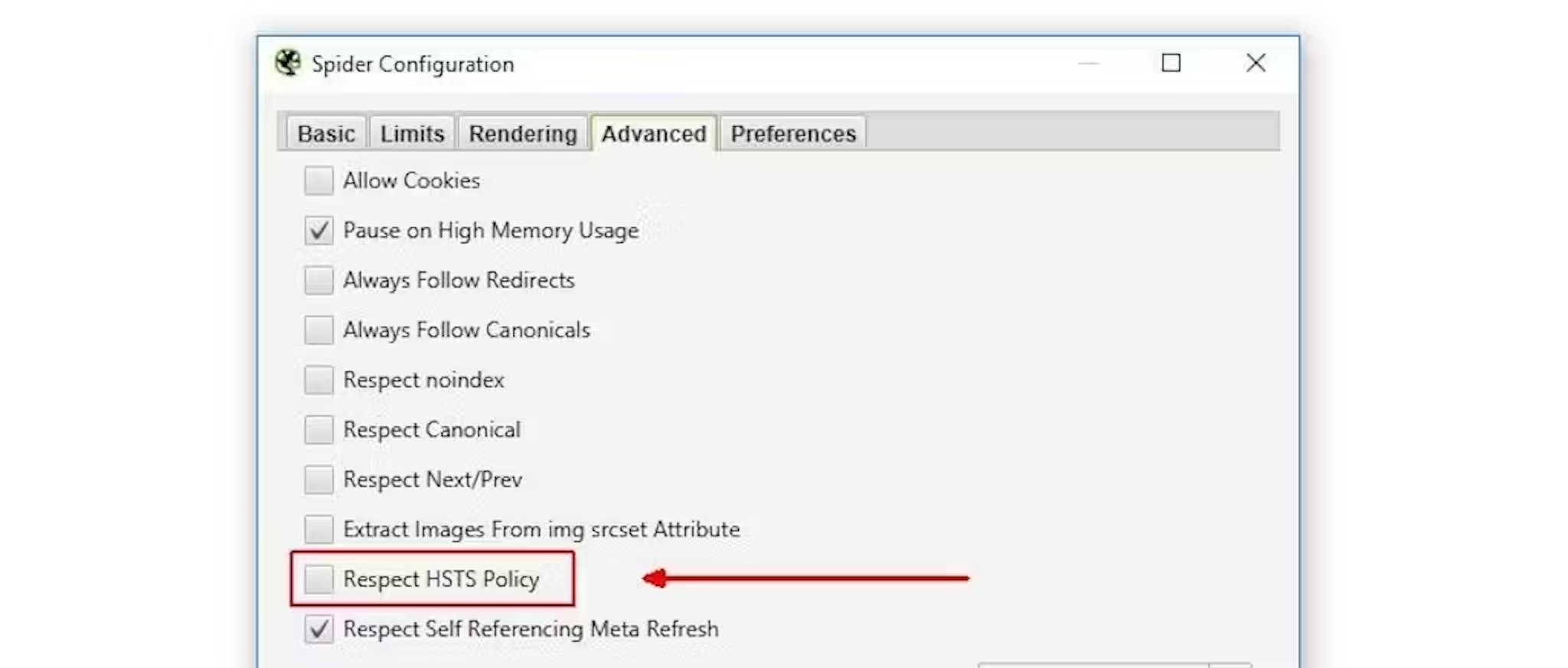

It's a good idea to implement http -> https 301 redirects before HSTS to make sure everything is working. You can leave the 301s as a fallback, although Googlebot will treat them as 301s, not all search engines are the same.

Tip #211:

Sometimes your target key phrases can be close to impenetrable. Rather than waste resource trying to get positions with no return, it can be worth considering alternate (normally lower search volume) phrases for the same intent. A smaller slice is better than no slice at all! You can make this judgement on Google Trends, search volumes and cost per click data.

Tip #212:

Search intent shift is when at certain times of the year, the majority of the intent behind an individual search will change. A good example is 'Halloween', which switches from informational to a commercial term near halloween. As this happens, Google will can change their rankings drastically to adapt to this intent. If you do see fluctuations in your rankings around specific events or times of year like this, it will may well be that there is nothing "wrong" with your site, it's just not the best to serve that intent, at that time.

Tip #213:

If you're dealing with a site that has input from multiple teams, content people, product teams, external devs, I would strongly recommend some kind of ongoing cloud monitoring/crawling of the site - it can really save your skin. Yesterday, we detected a problem that got through a dev agency's QA that made the whole site appear blank to Google. On a site doing over £1M in revenue a month, you can imagine the fallout this would have caused if it wasn't spotted before it could do damage!

Tip #214:

When setting up keyword tracking, it is useful to use multiple tags on keywords. This allows you to view how well you rank for a specific topic, product or service. This information can help form your SEO strategy, determining what you need to do around specific topics to get rankings. For instance, if Google 'likes' your site for a specific topic, just building new content means you'll likely rank for it off the bat. If you're struggling on a topic, you'll need to gain more authority, which a lot of the time comes down to getting links.

Tip #215:

Consider whether ranking factors are query or non-query dependent when making strategic SEO decisions. For example, PageRank is a query-independent ranking factor, it applies the same to all sites. Content freshness is a query-dependent ranking factor, some queries deserve freshness, others it does not matter. This means: Internal linking is important on all sites, content freshness may not be.

Tip #216:

Buyer beware: Despite some spurious claims to the contrary, there is no tool on the market that can tell you specifically what organic key phrase an individual user searched for and then clicked on. Only Google has that data and it doesn't give it out.

Tip #217:

As tempting as it may be, I would not alter page titles if possible during a site migration. It's helpful to change as few things as possible to get a clearer view of if there are issues. It's likely there will be opportunities you can take advantage of, but doing them in a linear fashion will usually provide the clearest route to success.

Tip #218:

Don't be afraid to link out to other good sites when it is helpful, but linking out does not directly help boost your ranking.

Tip #219:

I find it shocking they still exist, but I guess it's because I am in the SEO bubble - but there are still large companies out there offering 'SEO ad platforms', which are basically adverts or advertorials that 'pass SEO benefit'. Avoid, avoid, avoid. They will put your website at a substantial risk of getting a penalty. While it's up to you if you want to follow Google's rules, if you're going to break them - at least do it well!

2020 Unsolicited #SEO tips:

Tip #220:

If you really want to show users something and not search engines, it's worth keeping in mind that Google ignores everything after a '#' in a URL... Has some "interesting" uses :-)

Tip #221:

It can be hard to xtract insights from Google Search Console can be hard. Luckily, Hannah Rampton (Butler) made a free tool to generate insights quickly from Google Search Console.

Tip #222:

If you're unsure of where to start with link building, take one of your competitors that ranks #1 and put their website into the Majestic link tool and click 'backlinks'. This will give you a list of all of their best links and you'll be able to see what strategies they have been using to acquire them. Great and vital bit of initial research to carry out.

Tip #223:

It's a good move to start using cloud instead of desktop-based tools for spot checking. For instance, it's very easy to monitor your site for things like broken links, accidental updates to robots.txt or the like with a cloud based tool. It's far too common that on a first audit of a client site, I'll find dozens, sometimes hundreds of broken links. Unhappy users, unhappy bots

Tip #224:

You can get more mileage and links from your web content with collation and curation. If you're using a tool like AlsoAsked to answer questions about your products or product categories, you could look at combining all that information into a single/guide page that can be used with outreach efforts - it makes it a lot easier to build resources to get links from.

Tip #225:

The pages that you want to rank well for higher volume terms should be linked "high up" within your site's hierarchy, such as the main menu or homepage. If you have a page that you expect to rank well that's 4-5 clicks away and only linked to internally from a couple of pages, you're likely to be disappointed!

Tip #226:

An automated SEO audit report done by an online tool has almost zero value unless it's put in context to your business by someone that understands both it and SEO. These tools will rarely give you a good action plan and will almost always provide false positives.

Tip #227:

The Lighthouse 'SEO audit' score means pretty much nothing. You can score 100 and still have massive technical problems on your site. The Lighthouse audit will only look at that individual page and it does so with no context to what you want to rank for or your business.

Tip #228:

Google is ending support for data-vocabulary on April 6th, 2020. You have until then to switch to schema dot org or you will lose rich snippets. Here is Google's blog post about data vocabulary and switching to schema.

Tip #229:

Massive announcement by Google today. If you have a featured snippet ('position 0' result) that page will no longer rank on page 1 of the search results. So if you see some big shifts/drops, this could be why!

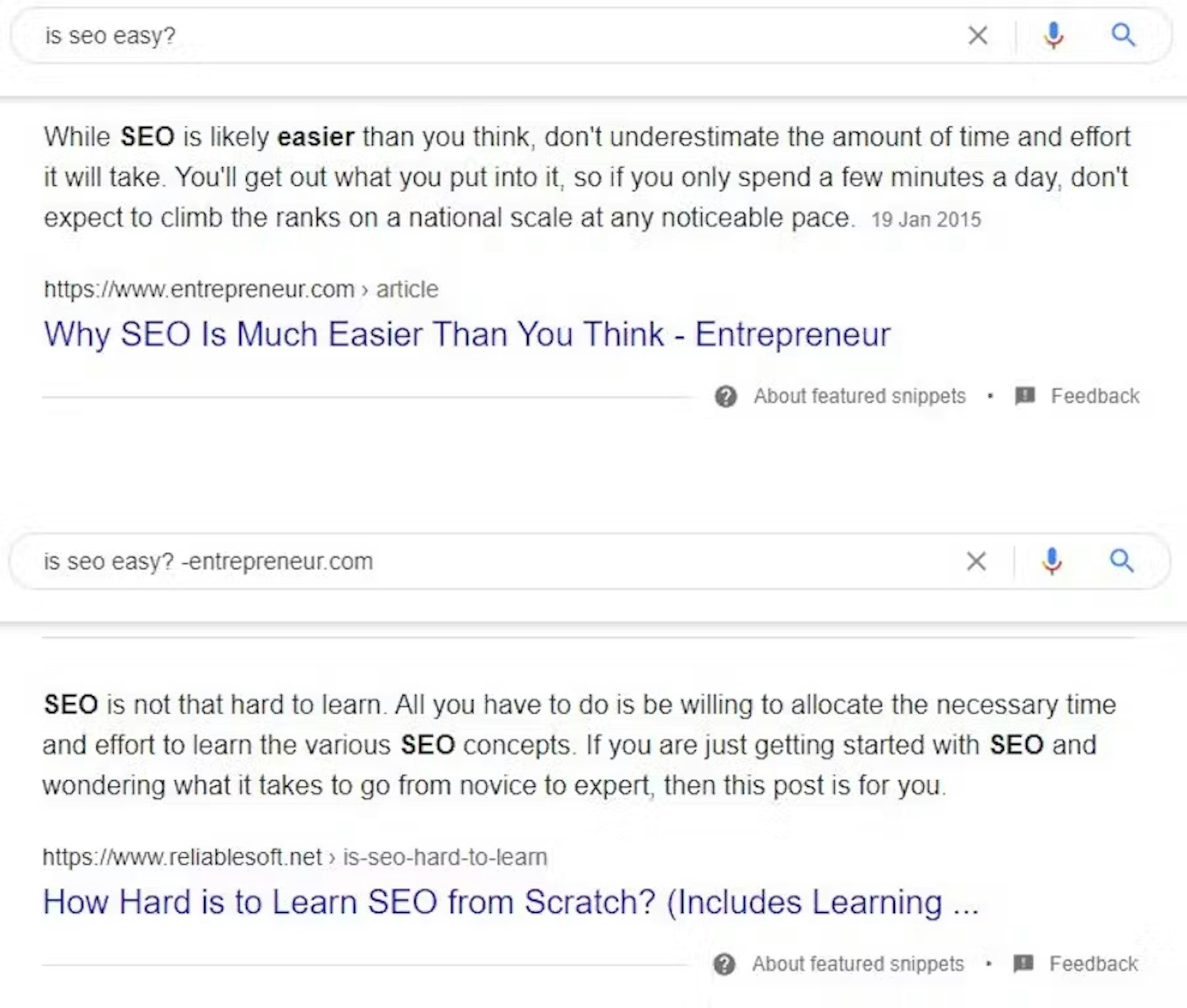

Tip #230:

Want to get rid of your featured snippet so you can appear in the 'normal' results, but worried where you might rank if you remove the snippet? You can find out! If you're ranking for a snippet, you can add the &num=9 parameter on the end of your Google search to remove it and show where you would rank without it! If you're ranking well, you can then use the nosnippet tag on then page to stop Google showing a featured snippet.

Tip #231:

Google does not "favour" long content. To be a little bit more specific: Long content has a higher probability of being better content and ranking better, but the actual word count does not matter. This means that targets like "this page must be 500 words" or "this article needs to be 1,000" are utterly meaningless. There is no logical reason why word count would be a ranking factor. Google has told us on multiple occasions, specifically, that word count is not a ranking factor - any decent SEO should tell you the same. If your SEO tells you word count is important, make sure you sack them with at least a 500 word email.

Tip #232:

Answering peoples' questions can be key to creating good content. I mentioned in the last tip that content length is not important, but is usually correlated with better content. That means understanding intent and approaching it from a topic, rather than a keyword point of view. To this end, we made AlsoAsked - it allows you to explore the (People Also Asked) questions that Google generates and understand how they are grouped.

Tip #233:

As I've mentioned in other tips. Automated 'free' SEO audits are free exactly because they offer very, very little value. It's like an architect showing you the plans for a building. All the information is there, but you're not going to try and build your own house with the knowledge, are you? I'm so confident in this - I'm quite happy to run a free (automated) SEO audit for anyone who leaves their web address in the comments if you want one. Having an SEO expert look at your site, in combination with tools like this, in context to your business, with your validation - with a plan to implement is what gets results!

Tip #234:

Google has launched a new removals tool within Google Search Console. This tool does three things (1) lets you temporarily hide URLs from showing in Google search, (2) show you which content is not in Google because it is “outdated content” and (3) shows you which of your URLs were filtered by Google’s SafeSearch adult filter.

Tip #235:

Google uses upheld DMCA actions in its ranking algorithm. One more reason to report competitors that are copying/scraping you. If you need to report a competitor, you can do so here.

Tip #236:

Google is working hard to understand "what" things are: companies, people, brands and work out how these "entities" are linked in their Knowledge Graph. It's useful to know how you sit in this and Carl Hendy has made a neat tool that requires no programming knowledge to explore the Knowledge Graph.

Tip #237:

There is absolutely loads you can be doing with Google Sheets to help your SEO efforts from pulling out insights from the rather basic Google Search Console, to tools that help you detect cannibalisation. I don't know anyone better at Google Sheets than Hannah Rampton (Butler) and she was kind enough to come to #SearchNorwich and talk everyone through the QUERY function, which is the basics of how her tools are made.

Tip #238:

The URL Removal Tool within Google Search Console does not do what it says on the tin. It only hides results from Google search results temporarily. This means if you want to permanently remove a result from Google you need to mark that page as "noindex". Remember: robots dot txt does not stop pages getting indexed! More info on Google removals in episode 47 of the Search With Candour podcast.

Tip #239:

Some sites will serve a different experience or content depending on your user agent. You can get a Chrome extension that allows you to switch to any user agent, including Googlebot. This is an incredibly useful diagnostic tool when you're dealing with sites that need things like server-side rendering, to audit what is being served to Google.

Tip #240:

Google will tend to "like" your site for specific topics. For this reason, when you set up keyword tracking, it is a good idea to use the tagging system available on most of these platforms as it will allow you to slice ranking data by said topics and monitor performance for opportunities.

Tip #241:

A simple SEO test for you that a lot of businesses can't do: Choose a key phrase you which to rank for and then simply answer: which one page do I want to send search engine users to when they type this and does the page reflect that query or intent? "Homepage" is normally not the answer and if "it could be this page or this one" then you have some problems to solve!

Tip #242:

If you want a rough understanding of how Google currently views other site's content, you can use the cache: command when searching. Try: cache:domain.com/your-page-url/ and you'll see an image of how Google has processed the site. If you see huge chunks of content or navigation missing, they may well have an issue! Note: Google has specific tools for this within GSC for your own site.

Tip #243:

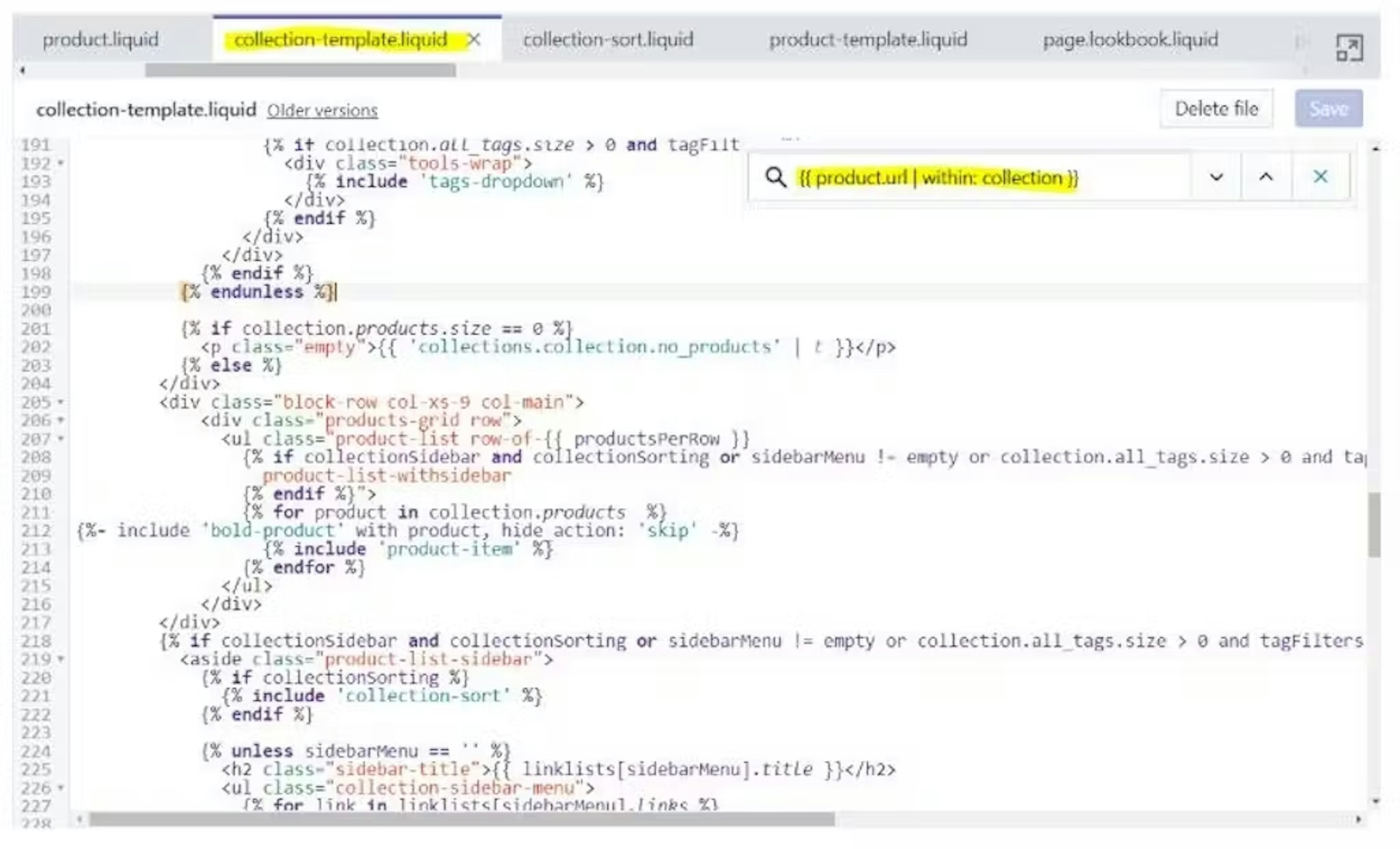

A #Shopify hack - the Shopify platform won't let you edit the robots.txt file Edit: June 2021 - They do now!, however, you can create a 301 redirect from /robots.txt to a file you control elsewhere. Google will still pick this up and it will function as a robots.txt!

Tip #244:

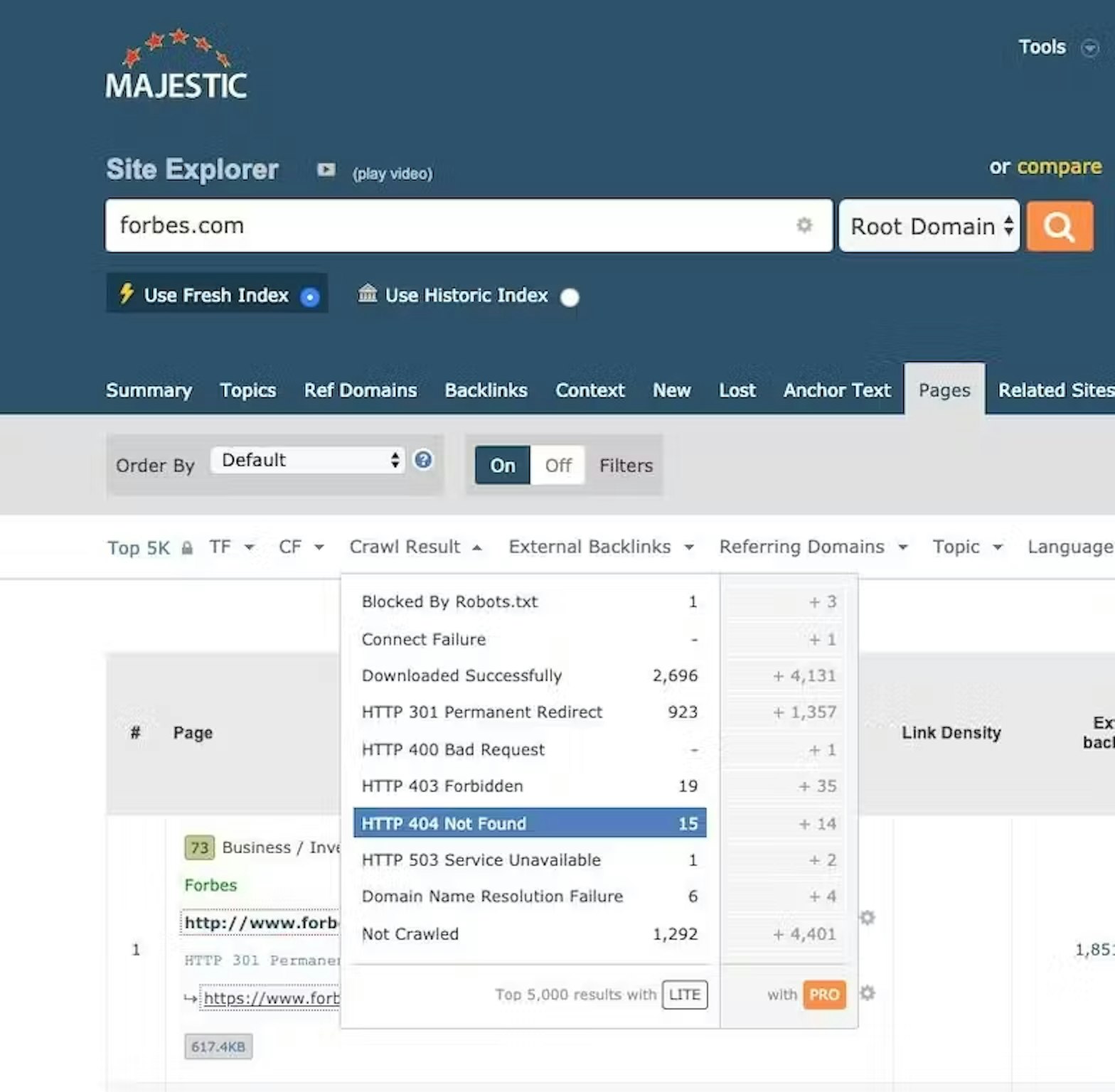

Links are important and you can get easy links by using a tool like Majestic to find broken incoming links. That means, sites that link to you that currently go to broken pages. You can either get them to update the link (to where it is meant to go) or simply set up a 301 redirect your end. To do this, login to Majestic, enter your domain then go to Pages > Crawl Status > 404 and you'll get a full list of broken incoming links! Easy!

Tip #245:

The advantage of 302 (temporary) redirects is that they will preserve the redirected URL in Google's index. This can make things a lot easier if you plan to change it again soon and avoid having to set up multiple redirects.

Tip #246:

If your e-commerce site has a faceted navigation/filter that gets you to a product sub-category that has search volume, it is good to practise to make sure this page is accessible by standard links (I.e. not checkboxes) and has its own URL so it can rank!

Tip #247:

If you do use an automated audit, be aware they don't account for the size of your site, which will often dictate the magnitude of the problem. As an analogy, if you have a leaky tap in your bathroom, this isn't a huge problem - but if your house has 500,000 bathrooms and there is a leaky tap in every one of them, it's a big problem!

Tip #248:

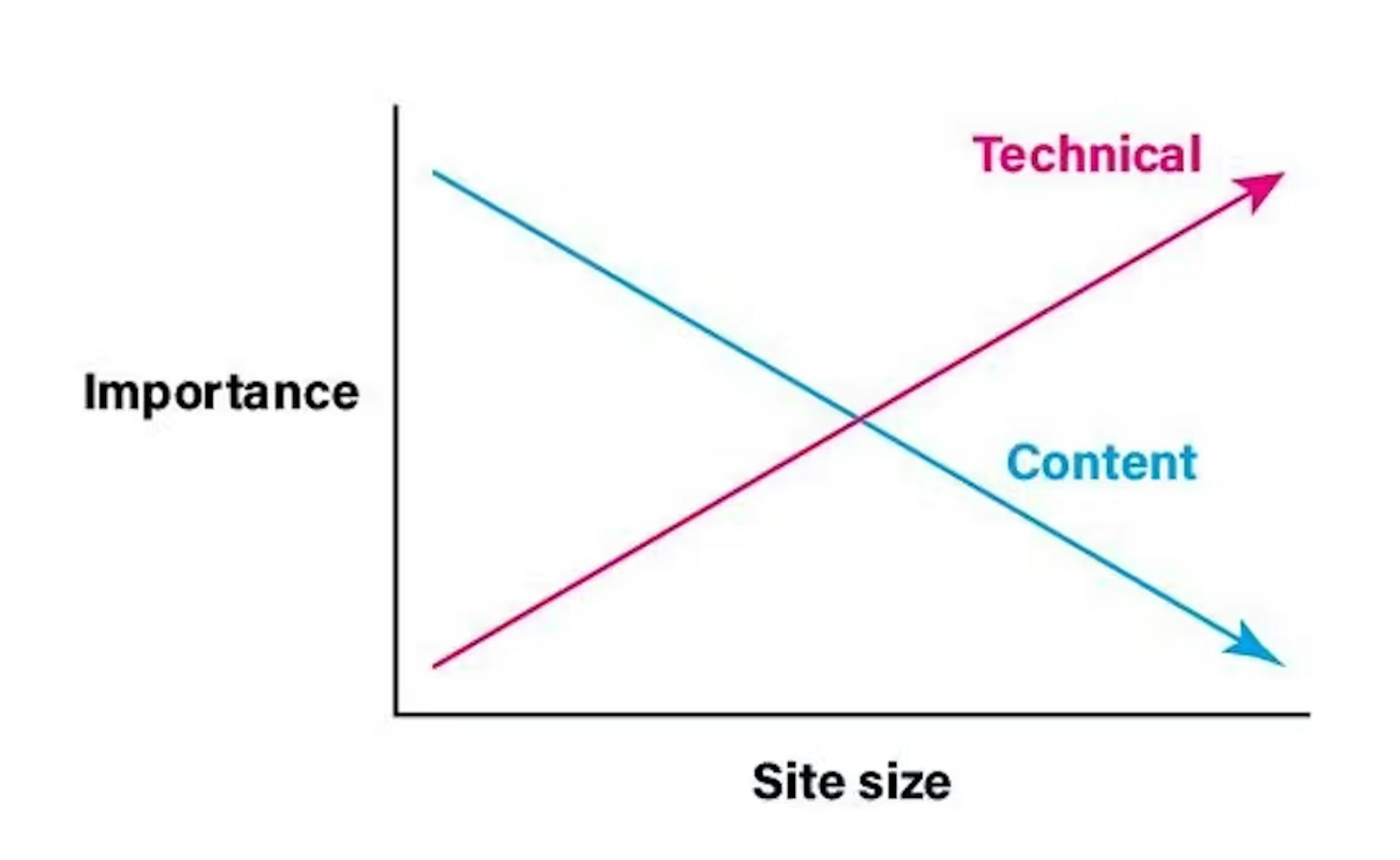

When you're getting an SEO site audit, generally the larger the site is, the more value technical fixes will hold - and the smaller the site is, generally content suggestions will carry more value. Here is a beautiful graph to demonstrate this.

Tip #249:

It is absolutely possible to break Google's webmaster guidelines and trick it into ranking your site better than it deserves. However, all blackhat SEO is temporary. You're playing in the gap between the algorithm, ideal and the technological capability to enforce it. That gap is always shrinking, so you'll eventually get caught - so plan for that day!

Tip #250: